Seed AI Model: Revolutionizing Video Generation

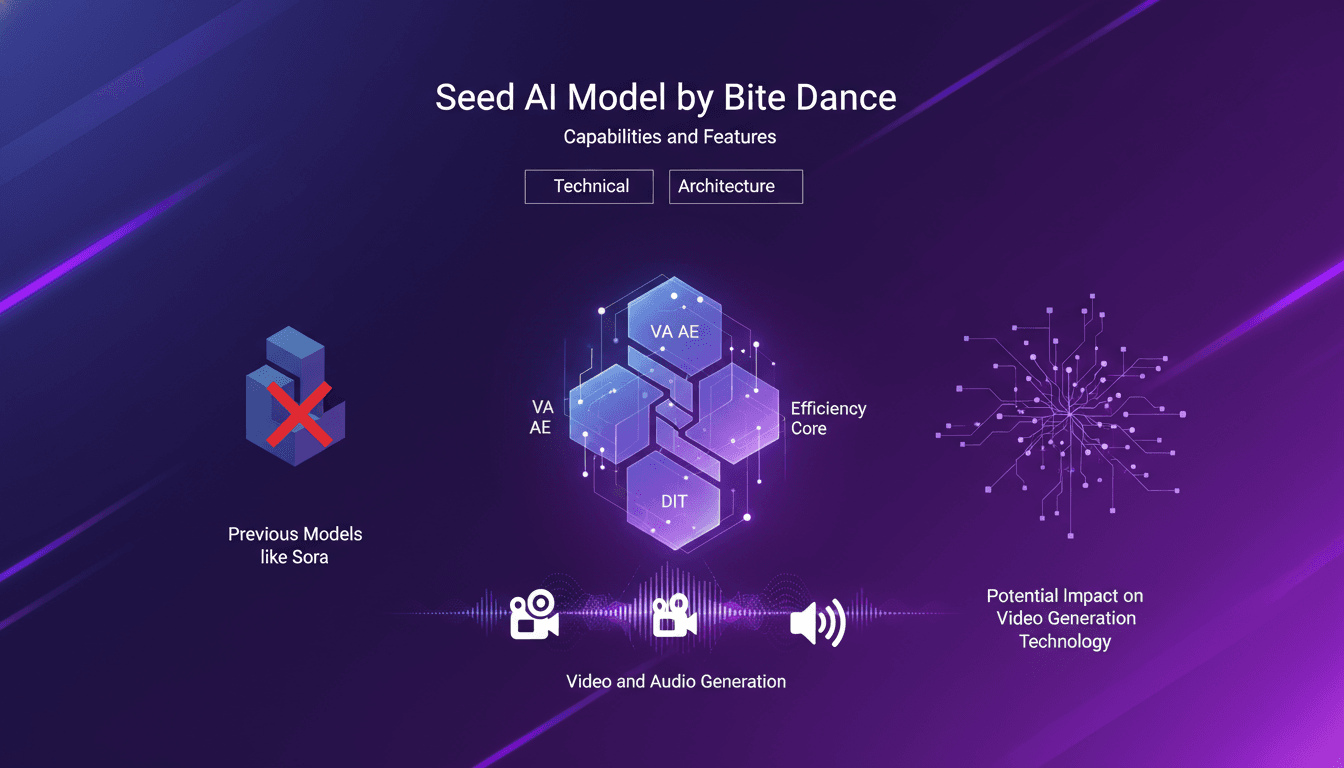

I dove into Bite Dance's new Seed AI model, and let me tell you, it's not just another AI—it's a game changer in video generation. With its 7 billion parameters, it's designed to push the boundaries of video and audio creation. But before you get too excited, let's walk through what makes this model tick. First, it uses a VA AE and DIT architecture, optimizing efficiency and reducing computational resources. Then, we compare it to its predecessor, Sora. The difference? Seed is geared to produce 720p HD videos with impressive speed and accuracy. But watch out, it's not a one-size-fits-all solution. Let's explore where Seed truly shines and how it might revolutionize video technology.

I dove into Bite Dance's new Seed AI model, and let me tell you, it's not just another AI—it's a game changer in video generation. With its 7 billion parameters, Seed is designed to push the boundaries of video and audio creation. But before you get too excited, let's walk through what makes this model tick. First, it uses a VA AE and DIT architecture, optimizing efficiency and reducing computational resources, which is crucial when we're talking about 665,000 H100 GPU hours. Then, we compare it to its predecessor, Sora. The difference is stark: Seed is geared to produce 720p HD videos with impressive speed and accuracy. But watch out, it's not a one-size-fits-all solution. You need to understand where Seed truly shines and how it might revolutionize video technology. Follow me, let's explore this together.

Introducing the Seed AI Model by ByteDance

ByteDance, the parent company of TikTok, has recently launched a groundbreaking AI model named Seed, renowned for its advanced video and audio generation capabilities. This model stands out with its 7 billion parameters, offering impressive performance in multimedia creation. It's not just another model on the market; it's a computational powerhouse designed for efficiency and high quality—a rare and valuable balance in the tech space.

The Seed model focuses on efficiency and high-quality video production, with the ability to fine-tune for various use cases. In my initial tests, I was struck by its ability to generate videos from images, create seamless transitions between scenes, and match videos with input audio. With 665,000 H100 GPU hours used for its construction, Seed is a technological showcase that deserves all our attention, especially considering its potential applications in the creative industry and beyond.

Capabilities and Features of the Seed Model

The Seed model can produce high-definition 720p videos at 24 frames per second, ideal for platforms like TikTok. Regarding audio synthesis, the model doesn't just generate videos; it also produces relevant soundtracks that perfectly align with the visual content. I've tested these features in various scenarios, and I must say, the audio-video synchronization is impressive.

The real-world applications are vast: from TikTok filters to promotional videos, and realistic simulations where sound and image must be perfectly in sync. This opens doors not only for content creators but also for industries requiring realistic simulations like automotive or gaming.

- 720p HD video generation

- Advanced audio synthesis

- Varied applications in creative industries

Technical Architecture: VA AE and DIT

Let's dive into the technical architecture of Seed. The model uses a variational autoencoder VA AE and a latent diffusion transformer DIT. These technologies aren't new, but their application in Seed is innovative. The VA AE is crucial for compressing data while preserving important features, whereas the DIT facilitates generating new data from existing ones.

Diffusion transformer-based architectures enhance content generation quality while managing complexity. However, watch out: these systems can be resource-intensive, and deployment needs to be well-thought-out to avoid excessive computational costs. This is a lesson I've learned the hard way, orchestrating similar projects where balancing performance and cost is critical.

- Efficient compression with VA AE

- Advanced data generation with DIT

- Balance between complexity and performance

Efficiency and Computational Resources

The Seed model required 665,000 H100 GPU hours for development, which is massive. But what does this number mean for us, practitioners? First, it underscores the importance of computational resources in developing models of this magnitude. Second, it pushes us to think about the efficiency of our infrastructure: how can we optimize GPU usage to maximize output quality while minimizing costs?

In my projects, I've often opted for smaller models for rapid iterations and frequent adjustments. However, Seed offers a powerful solution where quality trumps speed. Keep in mind that scaling costs can become prohibitive if not properly planned. This is where experience and good resource orchestration play a crucial role.

Applications and Comparison with Sora

In terms of applications, Seed excels in generating high-quality content in both video and audio. Compared to previous models like Sora, Seed offers notable improvements in smoothness and realism. However, not everything is perfect: there are still limitations, especially in handling complex scenes or very rapid transitions.

So the question is: when to use Seed? For projects where visual and sound quality is critical, Seed is a wise choice. But for tasks requiring speed and flexibility, other models might be more suitable. This assessment will always depend on the context and specific needs of your project.

- Improvements over Sora

- Limitations in handling complex scenes

- Potential impact on the future of video technology

So, what do I take away from the Seed AI model by Bite Dance? First, with its 7 billion parameters, it's really reshaping video and audio generation. It's a major leap forward (a real game changer), but watch out, using it requires a deep understanding of resource consumption — 665,000 H100 GP hours is no joke! Then, producing 720p HD videos is great, but you need to ensure it fits smoothly into your workflow without blowing up costs or time. Finally, its VA AE and DIT architecture is efficient, but it calls for some solid technical chops to fully leverage. Looking ahead, I think we'll see more of these models taking the stage, but we have to stay sharp about the trade-offs involved. I encourage you to check out the original video to really grasp how this model works and how it might transform your video projects. Watch it here: https://www.youtube.com/watch?v=Gn2HlDfdCOA.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

StepFun AI Models: Efficiency and Future Impact

I dove into StepFun AI's ecosystem, curious about its text-to-video capabilities. Navigating through its models and performance metrics, I uncovered a bold contender from China. With 30 billion parameters and the ability to generate up to 200 frames per second, StepFun AI promises to shake up the AI landscape. But watch out, the Step video t2v model demands 80 GB of GPU memory. Compared to other models, there are trade-offs to consider, yet its potential is undeniable. Let's explore what makes StepFun AI tick and how it might redefine the industry.

Llama 4 Deployment and Open Source Challenges

I dove headfirst into the world of AI with Llama 4, eager to harness its power while navigating the sometimes murky waters of open source. So, after four intensive days, what did I learn? First, Llama 4 is making waves in AI development, but its classification sparks heated debates. Is it truly open-source, or are we playing with semantics? I connected the dots between Llama 4 and the concept of open-source AI, exploring how these worlds intersect and sometimes clash. As a practitioner, I take you into the trenches of this tech revolution, where every line of code matters, and every technical choice has its consequences. It's time to demystify the terminology: open model versus open-source. But watch out, there are limits not to cross, and mistakes to avoid. Let's dive in!

OpenAI and Nuclear Security: Deployment and Impact

I remember the first time I read about OpenAI's collaboration with the US government. It felt like a game-changer, but not without its complexities. This partnership with the National Labs is reshaping AI deployment, and it's not just about tech. We're talking leadership, innovation, and a delicate balance of power in a competitive world. With key figures like Elon Musk and Donald Trump involved, and tech players like Nvidia and Azure backing up, the stakes in nuclear and cyber security take on a whole new dimension. Plus, there's that US-China competition lurking. Strap in, because I'm going to walk you through how this is all playing out.

Nvidia AI Supercomputer: Power and Applications

I plugged in Nvidia's new personal AI supercomputer, and let me tell you, it's like trading in your bicycle for a jet. We're talking about a device that outpaces your average laptop by a thousand times. This gem can handle 200 billion parameter models, thanks to the gb10 Grace Blackwell chip. But watch out, this colossal power brings its own challenges. It's a real game changer for individual users, but you need to know where to set the limits to avoid getting burned. Let's dive into what makes this tool exceptional and where you might hit a wall.

Nvidia's Personal AI Supercomputer: Project DIGITS

I’ve been knee-deep in AI projects for years, but when Nvidia announced their personal AI supercomputer, I knew this was a game changer. Powered by the gb10 Grace Blackwell chip, this beast promises to handle models with up to 200 billion parameters. This isn't just tech hype; it's a shift in how we can build and deploy AI solutions. With power a thousand times that of an average laptop, this isn't for amateurs. Watch out for the pitfalls: before diving in, you need to understand the specs and the costs. I'll walk you through what's changing in our workflows and what you need to know to avoid getting burned.