StepFun AI Models: Efficiency and Future Impact

I dove into StepFun AI's ecosystem, curious about its text-to-video capabilities. Navigating through its models and performance metrics, I uncovered a bold contender from China. With 30 billion parameters and the ability to generate up to 200 frames per second, StepFun AI promises to shake up the AI landscape. But watch out, the Step video t2v model demands 80 GB of GPU memory. Compared to other models, there are trade-offs to consider, yet its potential is undeniable. Let's explore what makes StepFun AI tick and how it might redefine the industry.

I dove headfirst into StepFun AI's ecosystem, curious about its text-to-video capabilities. Right off the bat, I was struck by its 30 billion parameters and its prowess to generate up to 200 frames per second (yes, you read that right!). But don't be fooled, there's a catch: you'll need 80 GB of GPU memory to run the Step video t2v model. Comparing it to other models, I found interesting trade-offs but also potential that could redefine the sector. So, what makes StepFun AI so special? I'll take you behind the scenes to dissect its performance and ambitions. Its still unknown status and recent hiring activities make it a player to watch closely. Let's see together how StepFun could very well become the next Deepseek.

Understanding StepFun AI and Its Models

StepFun AI, hailing from China, has taken the AI world by storm, seemingly appearing out of nowhere with state-of-the-art models. Among these, the Step video t2v model stands out with its 30 billion parameters. This isn't just luck but a well-orchestrated strategy. StepFun AI aggressively positions itself in the market, challenging giants like GPT and Moshi, leveraging open-source to maximize its reach.

With a model capable of handling such a massive parameter load, StepFun AI showcases impressive video processing capabilities, aiming to redefine industry standards. Their commercial approach relies on open-source licensing (MIT for video and Apache 2.0 for audio), which may seem risky, but it ensures rapid adoption. Essentially, they bank on quality and accessibility.

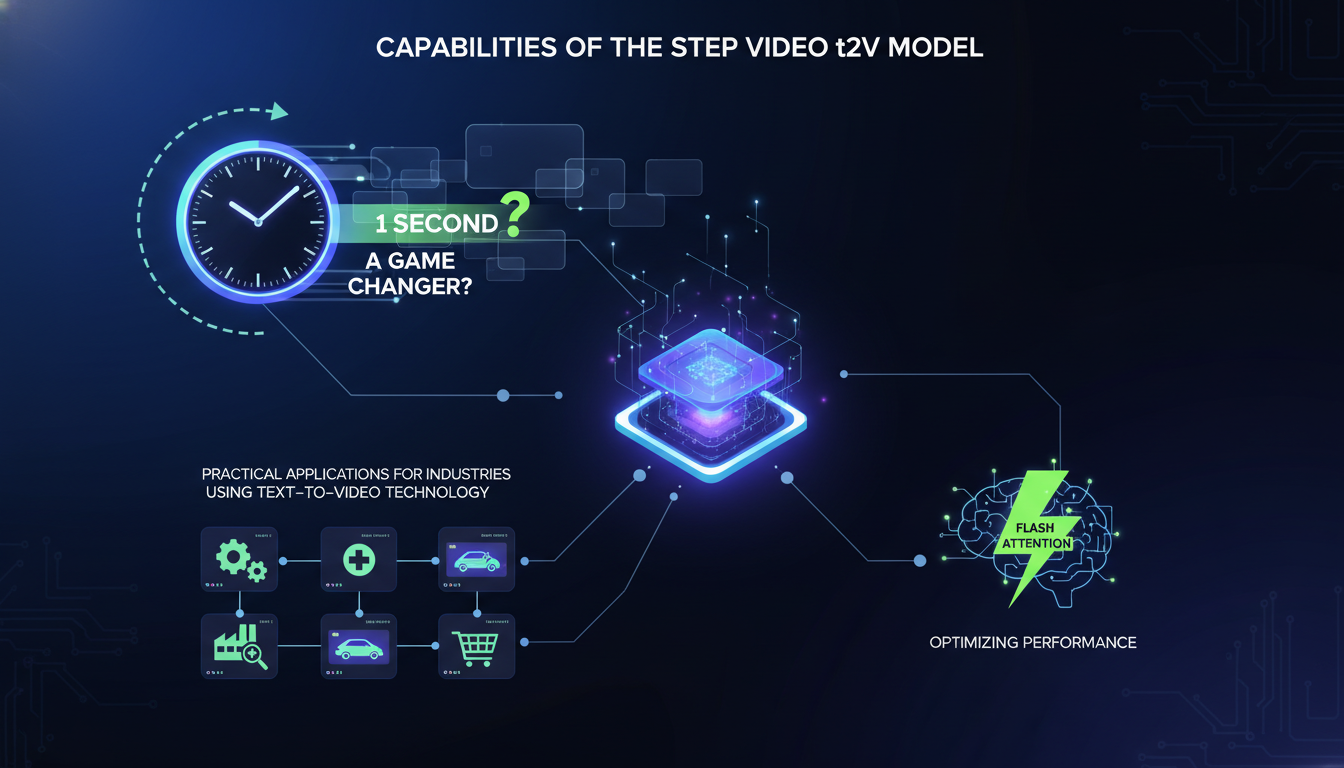

Capabilities of the Step Video t2v Model

Imagine generating 200 frames in one second. That's what the Step Video t2v model offers. For creative sectors like film and advertising, this is a game changer. From experience, I've seen promising solutions crumble under high expectations, but this one delivers thanks to Flash attention. This technology optimizes performance and reduces computational load.

The applications are vast: dynamic content creation, realistic simulations, and even enhanced user experiences in video games. However, beware of over-relying on this power without assessing the project's relevance. Sometimes, it's wiser to keep it simple rather than betting everything on the latest tech.

- Generating 200 frames/second

- Uses Flash attention to optimize performance

- Applications in film, advertising, and gaming

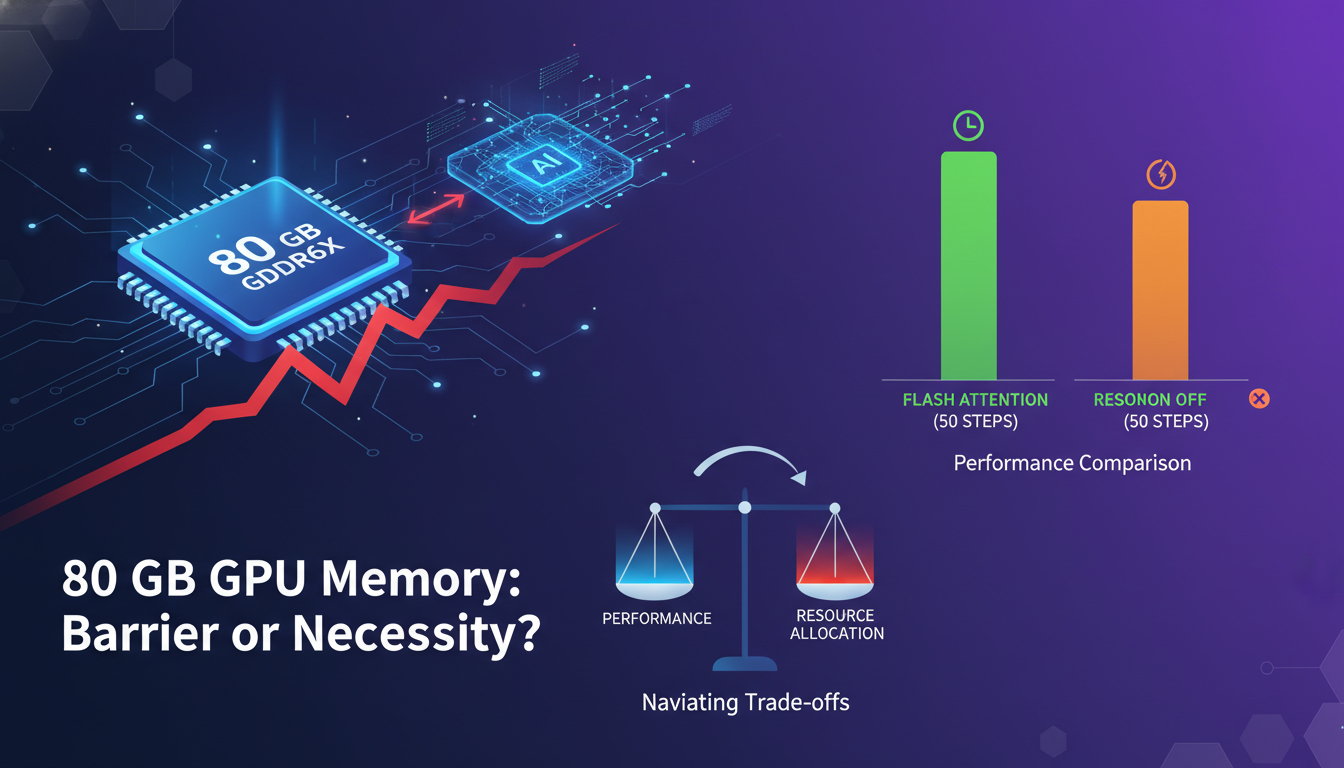

Technical Requirements and Performance Metrics

Let's get technical. Running the Step Video t2v model requires 80 GB of GPU memory. It's huge, no doubt. For many, it's a barrier, but it's the price for exceptional performance. With Flash attention, processing times are halved, from 1232 seconds to just 743 seconds for 50 steps.

However, remember that each project has its own requirements. Perhaps a lighter solution would suffice for you. Evaluate the cost-effectiveness before investing in such powerful hardware. And don't forget to consider alternatives like the turbo model, which is faster but potentially less qualitative.

StepFun AI vs Other AI Models

Let's compare StepFun AI with other behemoths like GPT, Moshi, and P Labs. Each model has its strengths and weaknesses. StepFun AI, with its open-source approach, offers MIT licenses for video and Apache 2.0 for audio, which can be a significant advantage for rapid adoption and collaboration.

StepFun AI's models are praised for their quality, although not yet on par with leaders like P Labs. However, their open-source strategy could quickly bridge this gap. The key will be to watch how they enhance their models while maintaining accessibility and collaboration.

- Open-source licenses: MIT and Apache 2.0

- Comparison with GPT, Moshi, and P Labs

- Potential for rapid growth due to open-source

Future Outlook and Industry Impact

StepFun AI isn't stopping there. Their recent recruitment campaigns clearly show their ambition for growth. As a practitioner, this reminds me of my early days in the industry where innovation was the key. They are poised to disrupt the AI landscape, and businesses adopting their models could gain a significant competitive edge.

StepFun's advancements could disrupt the market, prompting other players to rethink their strategies. For businesses, it's crucial to consider these developments in their strategic planning. Adopting these technologies can not only improve efficiency but also open new business opportunities.

- Visible growth ambitions through recruitment

- Possible disruptions in the AI market

- Strategic considerations for adopting StepFun models

In conclusion, StepFun AI is a player to watch closely. Their bold approach and cutting-edge technology could well redefine the rules of the game. But beware, as always, don't be dazzled by novelty. Carefully assess your needs before diving into StepFun's universe.

I dove into StepFun AI, and honestly, it's not just another player in the market; it's a potential disruptor. These models, with their 30 billion parameters, are powerful, but watch out, they come with hefty resource demands. 80 GB of GPU memory isn't trivial. I manage workflows daily, and the concrete impact is that you can generate up to 200 frames in one second. That's huge, but keep an eye on the resource/performance trade-off.

- StepFun AI is incredibly powerful but demands significant resource investment.

- Generating 200 frames in one second is unprecedented, but it can be a memory hog.

- Compared to other models, StepFun AI stands out but requires careful cost/benefit analysis.

Looking forward, I'm optimistic but cautious. This could be a game changer, but with scalability caveats. Stay tuned to see how StepFun AI evolves and how you can leverage it in your projects.

I recommend watching the full video for deeper insights: YouTube link.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Exploring Deep Seek R1: Reasoning AI in Action

I dove into Deep Seek's R1 light preview model, eager to see how it stacks up against OpenAI's 01 preview. Spoiler: there are some surprises! I didn’t expect the R1 to excel in math problem-solving and coding as much as it did. With its reasoning capabilities, it’s setting a new standard for AI expectations. But watch out, there are limits. For instance, Base 64 decoding revealed some unexpected challenges with model hallucinations. Then there's the test time compute scaling, which can quickly become a resource drain. Still, if you're looking to explore the potential of reasoning models, the R1 is a must-try. Don't underestimate it, but be aware of its constraints.

Becoming an AI Whisperer: A Practical Guide

Becoming an 'AI Whisperer' isn't just about the tech, trust me. After hundreds of hours engaging with models, I can tell you it's as much art as science. It's about diving headfirst into AI's depths, testing its limits, and learning from every quirky output. In this article, I'll take you through my journey, an empirical adventure where every AI interaction is a lesson. We'll dive into what truly being an AI Whisperer means, how I explore model depths, and why spending time talking to them is crucial. Trust me, I learned the hard way, but the results are worth it.

Nvidia's Personal AI Supercomputer: Project DIGITS

I’ve been knee-deep in AI projects for years, but when Nvidia announced their personal AI supercomputer, I knew this was a game changer. Powered by the gb10 Grace Blackwell chip, this beast promises to handle models with up to 200 billion parameters. This isn't just tech hype; it's a shift in how we can build and deploy AI solutions. With power a thousand times that of an average laptop, this isn't for amateurs. Watch out for the pitfalls: before diving in, you need to understand the specs and the costs. I'll walk you through what's changing in our workflows and what you need to know to avoid getting burned.

AI Exploration: 10 Years of Progress, Limits

Ten years ago, I dove into AI, and things were quite different. We were barely scratching the surface of what deep learning could achieve. Fast forward to today, and I'm orchestrating AI projects that seemed like science fiction back then. This decade has seen staggering advancements—from historical AI capabilities to recent breakthroughs in text prediction. But watch out, despite these incredible strides, challenges remain and technical limits persist. In this exploration, I'll take you through the experiments, trials, and errors that have paved our path, while also gazing into the future of AI.

Build AI Fashion Influencer: Step-by-Step

I dove headfirst into the world of virtual fashion influencers, and let me tell you, the potential for virtual try-ons is massive. Imagine crafting a model that showcases your designs without a single photoshoot. That's exactly what I did using straightforward AI tools, and it's a real game changer for cutting costs and sparking creativity. With less than a dollar per try-on and just 40 seconds per generation, this isn't just hype. In this article, I'll walk you through how to leverage this technology to revolutionize your fashion marketing approach. From AI-generated models to monetization opportunities, here’s how to orchestrate this tech effectively.