Exploring Deep Seek R1: Reasoning AI in Action

I dove into Deep Seek's R1 light preview model, eager to see how it stacks up against OpenAI's 01 preview. Spoiler: there are some surprises! I didn’t expect the R1 to excel in math problem-solving and coding as much as it did. With its reasoning capabilities, it’s setting a new standard for AI expectations. But watch out, there are limits. For instance, Base 64 decoding revealed some unexpected challenges with model hallucinations. Then there's the test time compute scaling, which can quickly become a resource drain. Still, if you're looking to explore the potential of reasoning models, the R1 is a must-try. Don't underestimate it, but be aware of its constraints.

I dove headfirst into Deep Seek's R1 light preview model, eager to see if it could rival OpenAI's 01 preview. Spoiler alert: I was in for some surprises. In the realm of reasoning models, Deep Seek promises a lot, but how does it truly perform in real-world tasks like math and coding? I orchestrated some tests, especially with Base 64 encoding and decoding, and here's where the R1 showed both its strengths and weaknesses. For instance, model hallucinations were a real headache. And then there's the compute scaling during tests, which can quickly become a black hole (trust me, I've been burned before). Despite all this, the R1 is far from being just a gimmick. For those of us looking to push reasoning models to their limits, it's a must-try, though you need to keep an eye on its constraints.

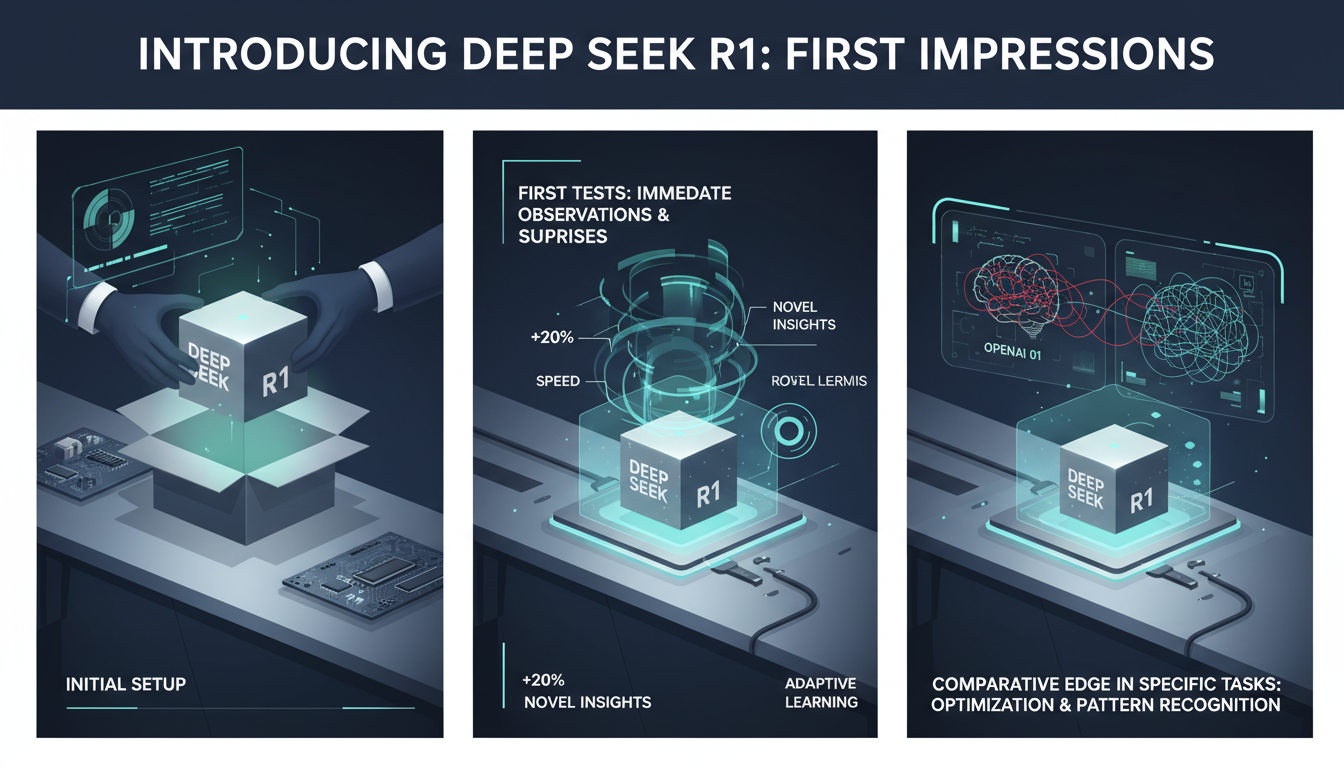

Introducing Deep Seek R1: First Impressions

Unboxing the Deep Seek R1 felt like a trip down memory lane to my early AI days. It's intuitive and quick to set up, and right from the first tests, there were surprises. The real-time transparent thought process is a real advantage. Compared to OpenAI 01, R1 shines in specific tasks. But beware, it's not all perfect. The performance score of 3.5 against OpenAI 01 indicates potential, but also limitations. For developers, the immediate practical implications are significant: a powerful tool but one to handle with care.

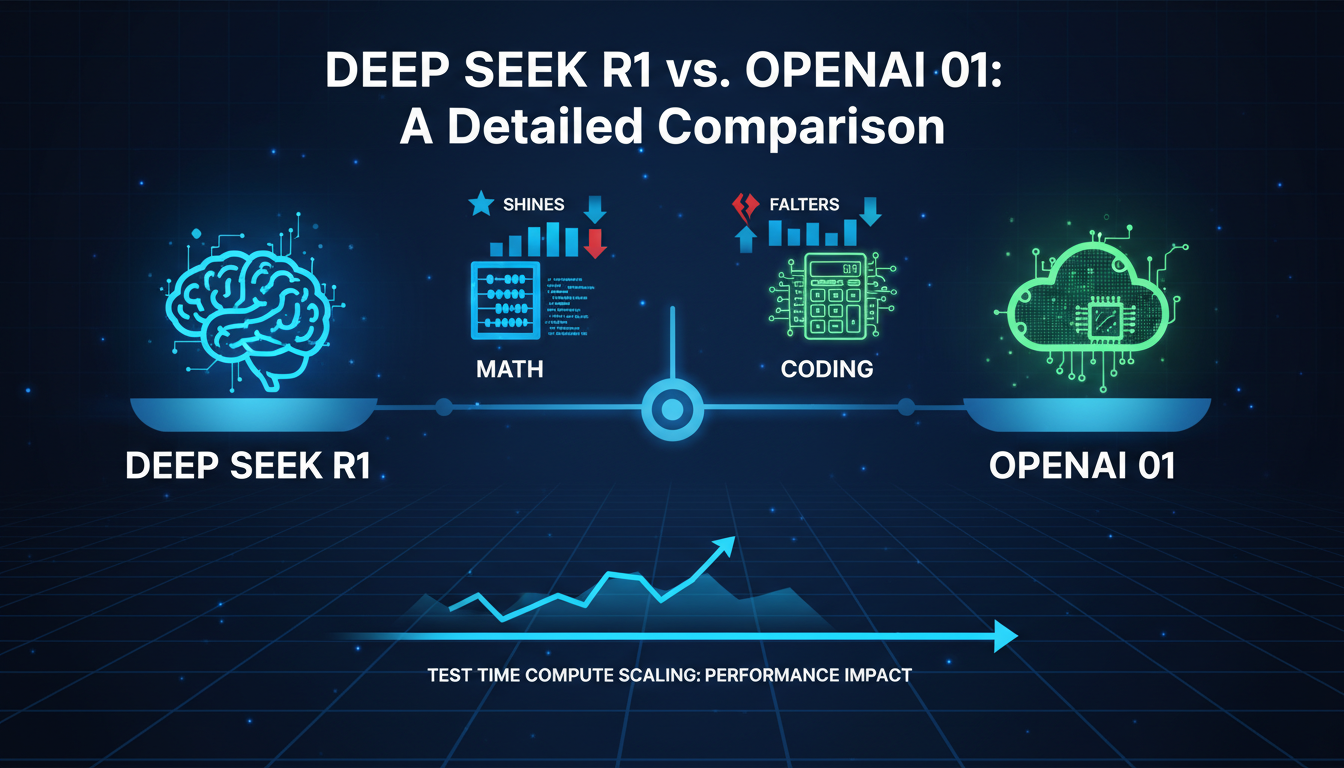

Deep Seek R1 vs. OpenAI 01: A Detailed Comparison

When pitting Deep Seek R1 against OpenAI 01, significant differences emerge, particularly in math and coding. R1 excels where OpenAI 01 stumbles, but it has its own weaknesses. Understanding test time compute scaling is key here. It impacts performance: more compute power but more time. It's a balance between speed and precision. For workflows, this means increased efficiency but with a potential cost in time or resources.

- Mathematics: Deep Seek R1 often outperforms OpenAI 01.

- Coding: A slight edge for R1 with a margin of 0.3%.

- Compute Scaling: More efficient but watch resource consumption.

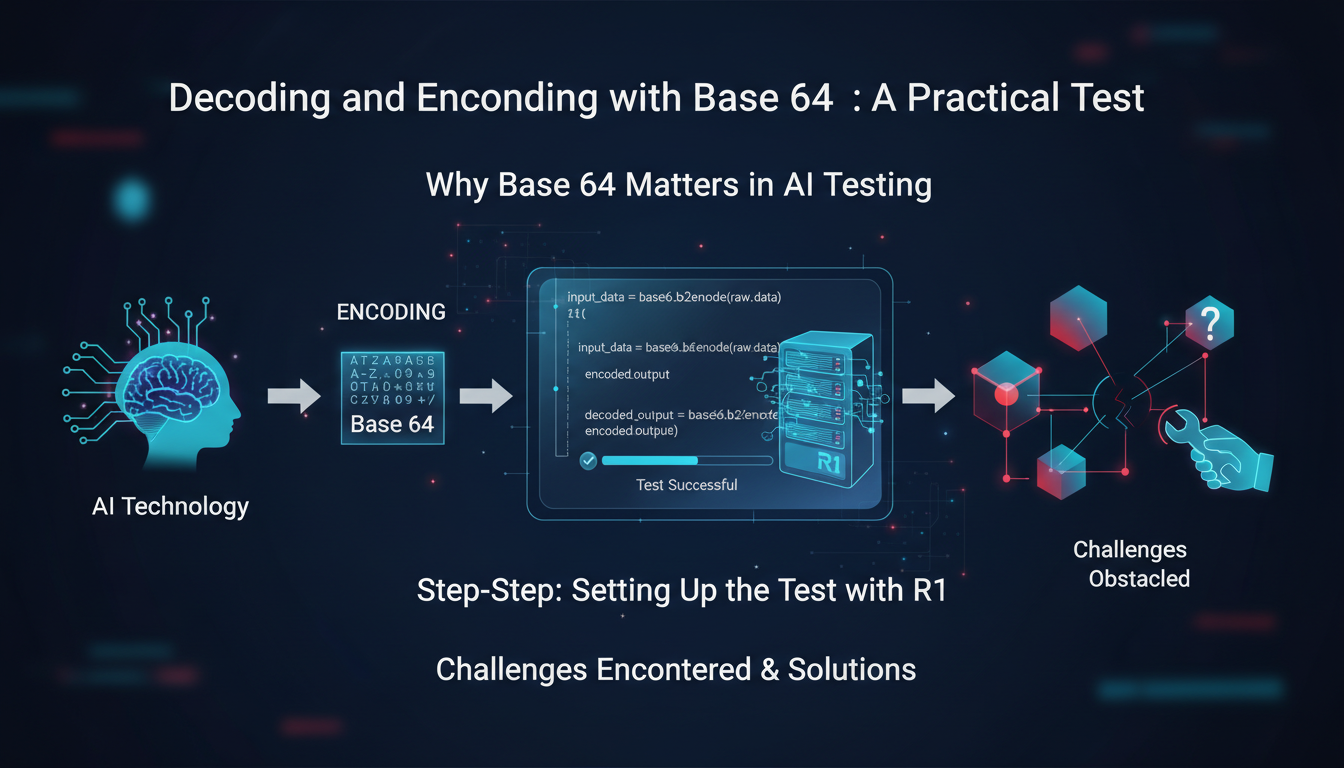

Decoding and Encoding with Base 64: A Practical Test

Base 64 is crucial for testing AI models, and with R1, I set up a concrete test. The process wasn't without challenges. I had to navigate decoding errors and model hallucinations. Sometimes, accuracy was questionable. But by tweaking parameters, I improved results and reduced errors. In this test, the model took 5 seconds for decoding and 7 seconds for processing.

- Accuracy: A mix of hits and misses.

- Processing Time: 5 seconds to decode, 7 to process.

Majority Voting and Chain of Thought: Enhancing AI Responses

The Chain of Thought is a fascinating concept. Implementing it, I used five candidate responses for majority voting. It's a balance between accuracy and processing time. Too much computation can hamper efficiency. Sometimes, less is more for speed. To optimize response quality, fine-tune the number of candidates and allocated time.

- Accuracy: Improved with majority voting.

- Time: A compromise is necessary.

Applications and Limitations of Reasoning Models

Reasoning models like R1 have enormous potential in real-world scenarios, but they're not without limits. Deployment costs can be high, and sometimes, they fail where simpler solutions suffice. For the future, these models will evolve, but watch out for costs and technical limits. To integrate them effectively into workflows, understanding their capabilities and restrictions is crucial.

- Applications: Numerous but require fine-tuning.

- Limits: Cost and technical complexity.

- Future: Promising with constant updates.

When I dove into the Deep Seek R1, I found it has some compelling advantages, but it doesn't come without its challenges. Here’s what stood out:

- First, generating 5 candidate responses during majority voting is powerful, but watch out for test time compute scaling—it can get tricky.

- Next, with a performance comparison score of 3.5 against the OpenAI 01 model, R1 shows strong potential, especially in math and coding.

- However, with 48 messages left for the day, manage your usage carefully to avoid hitting limits.

I'm convinced Deep Seek R1 can be a game changer in your AI toolkit, but you’ve got to know the boundaries. Ready to dive into reasoning AI? Start experimenting with Deep Seek R1 and see how it can transform your projects. For a deeper dive, check out the full video here: The NEW REASONING AI you shouldn't ignore!!.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Kokoro TTS: The New King of Text-to-Speech

I stumbled upon Kokoro TTS while searching for a robust, cost-effective Text-to-Speech solution. Unlike the overhyped options that drain your budget, Kokoro offers a refreshing alternative with its Apache 2.0 license. In this comparison with 11 Labs, I explain why Kokoro might be your next go-to tool. With 10 unique voice packs and an impressive ranking on the Hugging Face TTS Arena leaderboard, Kokoro doesn't just promise—it delivers. I dive into its technical specs, use cases, and implementation ease to show you how to integrate it effectively into your projects.

Kokoro TTS: Leading Open Source Text-to-Speech

I stumbled upon Kokoro TTS while hunting for a free alternative to pricey text-to-speech solutions like ElevenLabs. This open-source model isn't just a knockoff; it’s a genuine game changer in the TTS landscape. Packed with 82 billion parameters and an Apache 2.0 license, it's ideal for commercial applications. I compare its performance with ElevenLabs, especially in emotional expressiveness and pronunciation accuracy. You can easily integrate it into your projects thanks to its user-friendly nature and unique voice packs. Join me as we explore how this model can transform your audio applications.

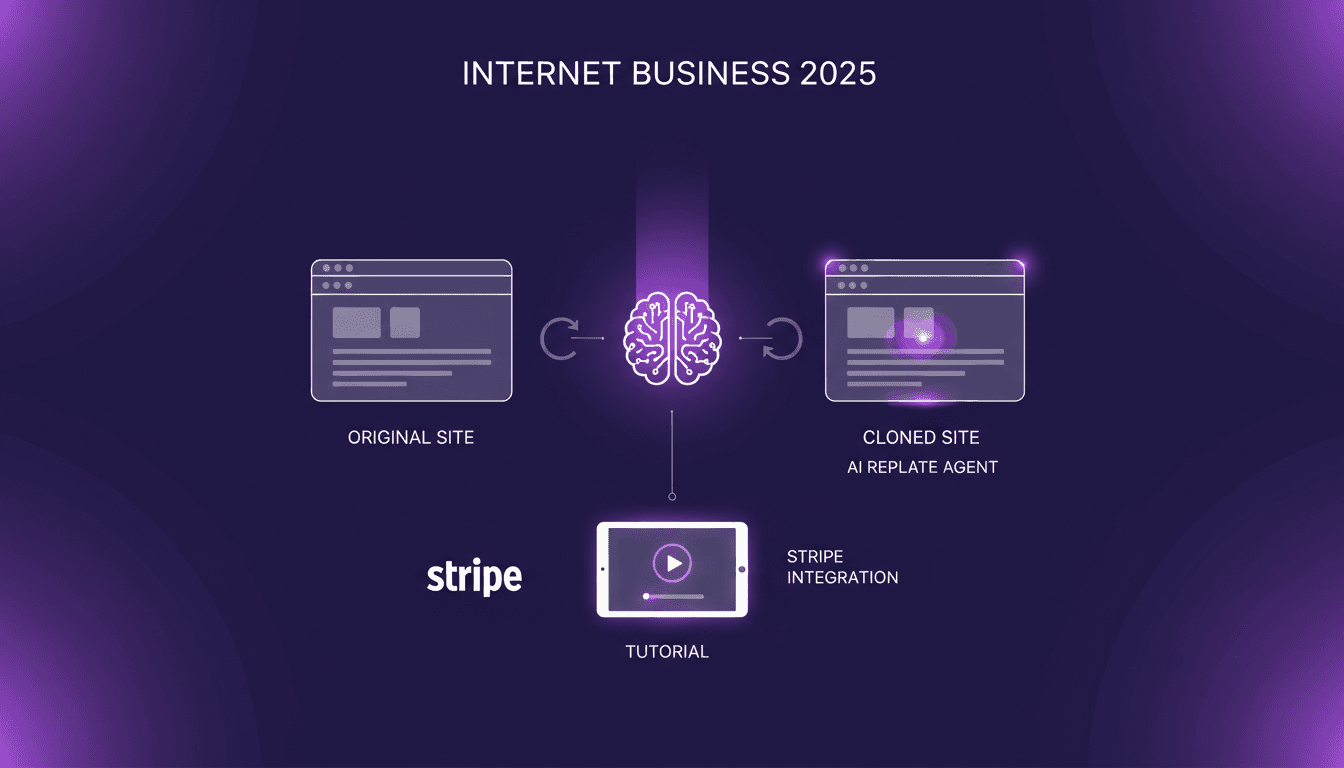

Cloning Websites with AI: A 2025 Practical Guide

I still remember the first time I cloned a website using AI. Ten minutes—that's all it took for me to be blown away by the efficiency. In 2025, cloning websites isn't just a developer's game anymore. With tools like Replate Agent, even non-technical founders can quickly and cost-effectively build robust websites. From Stripe integration for payment processing to self-hosting, it feels like you're wielding pro-level tools. In this guide, I walk you through how to leverage these technologies to start your internet business. Watch out, the efficiency is real, but avoid the traps that can cost you in performance.

Launch Your SaaS Without Code Using Data Button

Ever been stuck paying for tools that promise the world but deliver little? I was there too, until I discovered how to launch a SaaS product without writing a single line of code, using Data Button and Firebase. First, I set up Firebase authentication for social logins. Then, I connected my app to Data Button, and it changed everything. Building your startup is about orchestration. Launch without breaking the bank, and let me show you how I turned this idea into reality.

Clone Websites with AI in 2025: A Practical Guide

I remember the first time I cloned a website in under ten minutes. Yes, it was 2025, and I was using Replet Agent, an AI tool that made the process incredibly smooth. Gone were the days of relying on expensive development agencies. In this article, I'll show you how I did it. I'll guide you through integrating essential features like Stripe and encourage you to focus your energy on creation rather than technical complexities.