AI Exploration: 10 Years of Progress, Limits

Ten years ago, I dove into AI, and things were quite different. We were barely scratching the surface of what deep learning could achieve. Fast forward to today, and I'm orchestrating AI projects that seemed like science fiction back then. This decade has seen staggering advancements—from historical AI capabilities to recent breakthroughs in text prediction. But watch out, despite these incredible strides, challenges remain and technical limits persist. In this exploration, I'll take you through the experiments, trials, and errors that have paved our path, while also gazing into the future of AI.

I remember diving into AI a decade ago. Back then, it was almost science fiction to think deep learning could one day transform our workflows. But today, I'm steering AI projects that push the boundaries of what we thought possible. First observation: ten years ago, AI was in its adolescence, full of potential but often limited by the technology of the time. Fast forward—three years of experimenting and getting burned, and text prediction has become a fascinating playground, complete with breakthroughs redefining our digital interactions. But watch out, each advance comes with its own set of technical challenges, and it's crucial to tackle them with discernment. In this talk, I'll guide you through this evolution, from our early deep learning stumbles to future prospects, not forgetting the lessons learned along the way. Get ready to dive into a decade of innovation and imagine what might be the next step.

Historical AI Capabilities: A Decade in Review

Ten years ago, AI was barely capable of distinguishing a dog from a cat. Back then, the limitations in computing power and algorithm efficiency were glaring. For those of us in the trenches, every project felt like trying to climb a mountain with primitive tools. I recall working on an image classification system that, despite all our efforts, struggled to achieve acceptable accuracy. The challenges were numerous: lack of data, basic algorithms, and machines that overheated more than they computed.

But it was during those years that certain key advancements started to emerge. For instance, early deep learning models appeared, carrying immense promise. However, the road was long, and failures were frequent. I often got burned by promises of quick results, ending up spending sleepless nights debugging Python scripts that stubbornly refused to work.

- AI capabilities were limited by computing power.

- Basic algorithms were ineffective for complex tasks.

- Early advances in deep learning paved the way for future developments.

Deep Learning: Unlocking Potential and Facing Limits

Deep learning truly transformed AI development. Instead of spending hours manually extracting features, we could finally let the machines learn by themselves. It was a time of great excitement but also caution. I quickly realized that, despite its potential, deep learning had its own constraints. One of the biggest traps was the overblown enthusiasm around this technology. Many thought it would solve all AI problems, but in reality, the application contexts were limited.

In my own experiments, I was often surprised by the importance of input data quality. A single mislabeling could lead to catastrophic results. However, by properly orchestrating processes and optimizing models, some unexpected results emerged. For example, while playing with recurrent neural networks, I discovered text prediction capabilities I hadn't anticipated.

- Deep learning replaced manual feature extraction with automated learning.

- There are still limits in real-world application contexts.

- Data quality is crucial for accurate results.

Experimentation in AI: Trial, Error, and Breakthroughs

Experimentation is at the heart of AI evolution. Every trial, successful or not, brings its own set of lessons. Among my many attempts, some led to breakthroughs, particularly in text prediction. One project, in particular, taught me the importance of balancing innovation with practical application. For instance, while testing different text generation algorithms, I found that the most innovative solutions weren't always the most efficient in terms of cost and time.

I also learned from my mistakes, particularly in underestimating the complexity of integrating new models into existing systems. It's a constant challenge to adapt theoretical advances to operational constraints. But every failure is an opportunity to learn and refine one's strategy. And when an experiment works, the business impact is direct and palpable.

- Experimentation is essential for advancing AI.

- Breakthroughs can occur unexpectedly.

- Balancing innovation and practical application is crucial.

Recent Progress in AI: What Changed in the Last 3 Years?

The last three years have witnessed significant advancements in AI and deep learning. The increase in computing power and data availability have played a key role in these developments. Personally, I've noticed a marked improvement in the speed and efficiency of new AI tools and frameworks I've used. However, trade-offs still exist. For instance, some ultra-fast solutions consume enormous resources, which can be a hindrance for smaller setups.

Working with text generation systems, I've observed that the quality of predictions has greatly improved with newer models, but this also required a complete overhaul of underlying infrastructures. I had to orchestrate entire system updates to leverage these new tools, which is not without risks or costs.

- Increase in computing power and available data.

- Improved speed and efficiency of AI tools.

- Trade-offs exist between performance and resources.

Future of AI Exploration: What's Next?

Looking to the future, I believe AI will continue to evolve towards more integrated and efficient solutions. The areas of orchestration and energy efficiency are particularly promising for new breakthroughs. Personally, I plan to explore further how AI can be optimized for real-time applications, while keeping a close eye on growing ethical considerations.

However, challenges remain. One of the biggest is AI ethics. With the growing power of these technologies, it's crucial to ensure they are used responsibly. Transparency, privacy protection, and combating bias are issues we need to address now to avoid unforeseen consequences in the future.

- AI will continue to integrate more into our lives.

- Advancements in orchestration and efficiency are expected.

- Ethical issues need to be addressed now.

Honestly, looking back at the past 10 years, it's wild to see how AI has evolved. But let's not get ahead of ourselves. First, today's practical applications are our compass — every tech breakthrough needs a concrete, measurable impact. Then, experimentation is our best friend. We need to test, iterate, fail, and try again. When it comes to deep learning, it's a real game changer, but let's not overlook the trade-offs in power and resources. Finally, text prediction has taken a leap forward, but watch out for context limits when you go beyond 100K tokens.

The journey continues and it's exciting. Let's stay curious and keep building the future of AI together. Check out the full video for a deeper dive into these fascinating topics. It's a must-watch for anyone serious in this field. [Link: https://www.youtube.com/watch?v=8JXwrVQQ4jw]

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

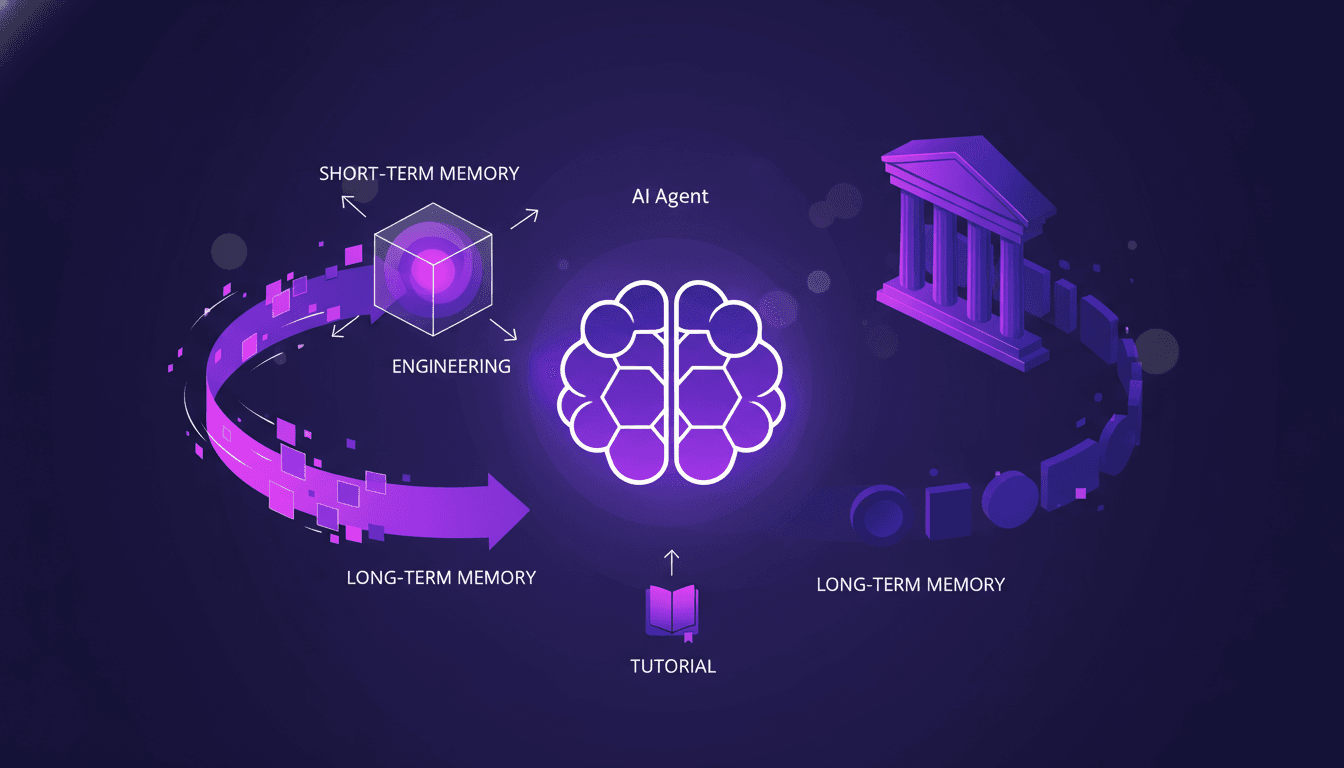

Optimizing AI Agent Memory: Advanced Techniques

I've been in the trenches with AI agents, wrestling with memory patterns that can literally make or break your setup. First, let's dive into what Agent Memory Patterns really mean and why they're crucial. In advanced AI systems, managing memory and context is not just about storing data—it's about optimizing how that data is used. This article explores the techniques and challenges in context management, drawing from real-world applications. We delve into the differences between short-term and long-term memory, potential pitfalls, and techniques for efficient context management. You'll see, two members of our solution architecture team have really dug into this, and their insights could be a game changer for your next project.

AI's Impact on Consumer Startups Today

Imagine a world where creating music is as easy as listening to it. Thanks to AI, that future is within reach. Consumer startups are breaking down traditional barriers. With insights from industry expert Mike McNano, we explore how AI is revolutionizing content creation and personalizing education. Platforms are evolving, distribution challenges are intensifying, but opportunities abound. Let's uncover how AI is redefining taste and craftsmanship in product development. Don't miss the untapped opportunities AI offers today.

LLM Memory: Weights, Activations, and Solutions

Imagine a library where books are constantly shuffled and some get misplaced. That's the memory challenge for Large Language Models (LLMs) today. As AI evolves, understanding LLMs' limitations and potentials becomes vital. This article delves into the intricacies of contextual memory in LLMs, highlighting recent advancements and ongoing challenges. We explore retrieval-augmented generation, embedding training data into model weights, and parameter-efficient fine-tuning. Discover how model personalization and synthetic data generation are shaping AI's future.

Poolside: Revolutionizing AI with Jason Warner

Imagine a world where AI seamlessly converts code across languages. Poolside is making this a reality. In a recent talk, Jason Warner and Eiso Kant unveiled their daring mission. Their Malibu agent aims to optimize efficiency and innovation. Discover how Poolside is redefining AI's future. A stunning code conversion demonstration captivated the audience. What are the challenges of AI in high-stakes environments? What are Poolside's future deployment plans? Jason Warner and Eiso Kant share their journey and the collaboration driving this revolution. Reinforcement learning is pushing AI capabilities to new heights. Poolside is set to transform the infrastructure and scale of AI model development. Don't miss this captivating exploration of revolutionary AI.

AI Evaluation Framework: A Guide for PMs

Imagine launching an AI product that surpasses all expectations. How do you ensure its success? Enter the AI Evaluation Framework. In the rapidly evolving world of artificial intelligence, product managers face unique challenges in effectively evaluating and integrating AI solutions. This article delves into a comprehensive framework designed to help PMs navigate these complexities. Dive into building AI applications, evaluating models, and integrating AI systems. The crucial role of PMs in development, iterative testing, and human-in-the-loop systems are central to this approach. Ready to revolutionize your product management with AI?