Optimizing AI Agent Memory: Advanced Techniques

I've been in the trenches with AI agents, wrestling with memory patterns that can literally make or break your setup. First, let's dive into what Agent Memory Patterns really mean and why they're crucial. In advanced AI systems, managing memory and context is not just about storing data—it's about optimizing how that data is used. This article explores the techniques and challenges in context management, drawing from real-world applications. We delve into the differences between short-term and long-term memory, potential pitfalls, and techniques for efficient context management. You'll see, two members of our solution architecture team have really dug into this, and their insights could be a game changer for your next project.

I've been burned more than once wrestling with AI agent memory patterns. These little quirks can really make or break your setup if you're not careful. So, what does an Agent Memory Pattern really mean and why is it so crucial? In advanced AI systems, managing memory and context is not just about storing data—it's about optimizing how that data is used. In this article, I dive into the techniques and challenges in context management, drawing from real-world applications. We'll discuss the differences between short-term and long-term memory, failure modes in context management, and techniques for efficient management. With two members of our architecture team, we've really dug into this topic. For instance, after three turns, we keep the information for summarization, but watch out—by the fourth turn, the context gets compacted. These insights could transform your next AI setup.

Understanding Agent Memory Patterns

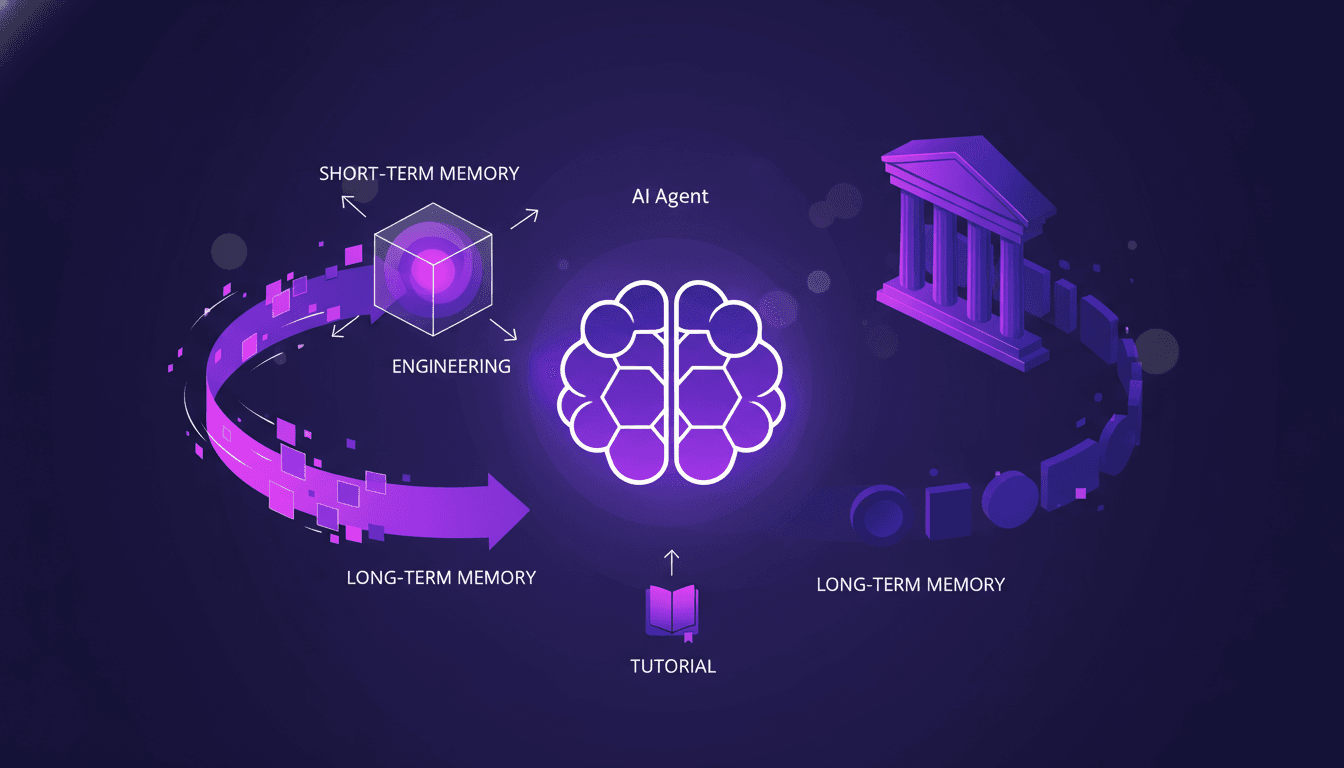

In the realm of AI systems, agent memory patterns play a crucial role. It's a topic I've delved into multiple times, and each time, I uncover something new. Short-term memory handles immediate demands, while long-term memory — encompassing semantic, episodic, and procedural elements — builds a deeper foundation. But managing these types of memory isn't child's play.

First, there's short-term memory, which ensures smooth, coherent interactions in the moment. On the other hand, long-term memory provides continuity and personalization across sessions. And here's where things get tricky: how do you balance the two? I've often found that when you overload short-term memory, performance drops. The key is in context engineering, a field I consider both an art and a science.

Context Engineering: The Art and Science

Context engineering is as much an art as a science. One must juggle judgment and concrete methods. I often use techniques like reshape and fit and isolate and route to optimize context. For instance, in a recent project where agents tended to "forget", I applied trimming and compaction to lighten the cognitive load. The result was a significant reduction in token usage.

It's vital to understand that context engineering isn't limited to a single technique. It's an ecosystem of context optimization. I've learned that combining different approaches based on the context budget is often the winning strategy.

- Reshape and fit: Adjust context for better performance.

- Isolate and route: Distribute contexts to target the right agents.

- Compaction and trimming: Reduce unnecessary data to optimize storage.

Techniques for Efficient Context Management

Let me walk you through some techniques I use daily, like extract and retrieve and memory injection. These methods are essential for managing context efficiently. For example, extracting and retrieving memory allows the agent to recall key information at the right time without overloading short-term memory.

But beware, there are trade-offs. Sometimes it's faster to compact information than to summarize it, especially if you only have three recent turns to keep for summarization.

- Extract and retrieve: For efficient retrievals.

- Memory injection: For personalized responses.

- Summarization: Directly impacts efficiency.

Navigating Failure Modes in Context Management

Failure modes like context burst, conflict, poisoning, and noise can ruin your project. I've had some spectacular failures, but they taught me to anticipate and mitigate these problems. For instance, creating context profiles allowed me to better manage agents according to their specific needs.

Here's a tip: don't take these failures lightly. Establishing context profiles can be a game-changer, enabling you to categorize your agents based on their context needs.

"I realized early detection of context bursts is crucial to avoid chaos."

Best Practices and Future Directions

Ultimately, context management is a delicate dance between efficiency and complexity. I strongly recommend adopting the best practices I've mentioned. Additionally, emerging trends show that balancing dynamic and static context components is becoming increasingly important.

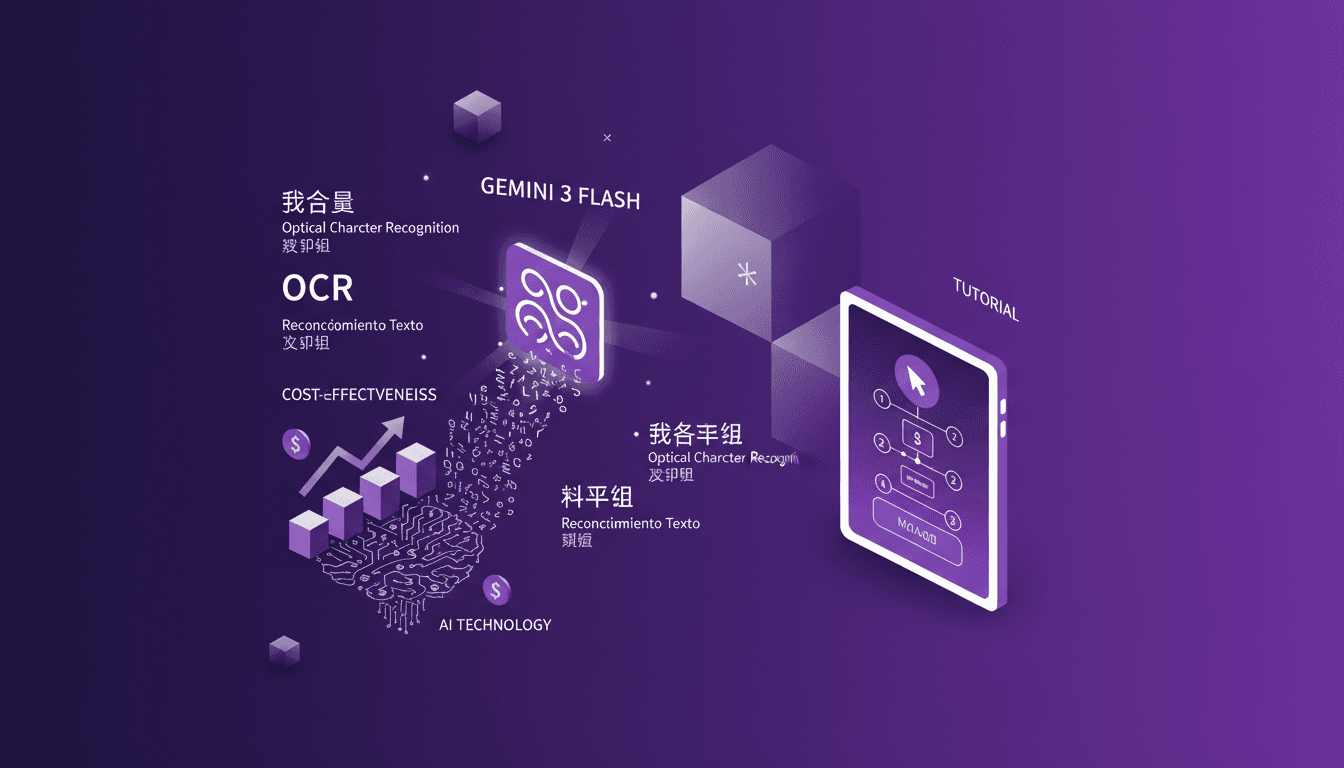

I encourage you to experiment with new tools and techniques. The future looks promising, especially with innovations like Gemini 3 Flash OCR that can truly enhance your daily efficiency.

- Experiment with various tools to find what works best.

- Follow trends to stay updated.

- Balance efficiency and complexity for optimal results.

Managing AI agent memory is like juggling flaming torches – complex, but incredibly rewarding once you get the hang of it. Here's what I've learned from the field:

- Mastering context engineering is your key to boosting AI performance. I focus on summarizing the last three turns.

- Differentiating between short-term and long-term memory is crucial. After four turns, I compact the context to maintain efficiency.

- Accounting for failure modes saves you from unpleasant surprises. Two team members ensure this in our solution architecture.

Experimenting with these techniques can genuinely push AI context management forward. Remember, every experiment counts – share your experiences! For a deeper dive, I recommend watching the 'Build Hour: Agent Memory Patterns' video. You'll see it's a real game changer, but watch out for the limits. Video link.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

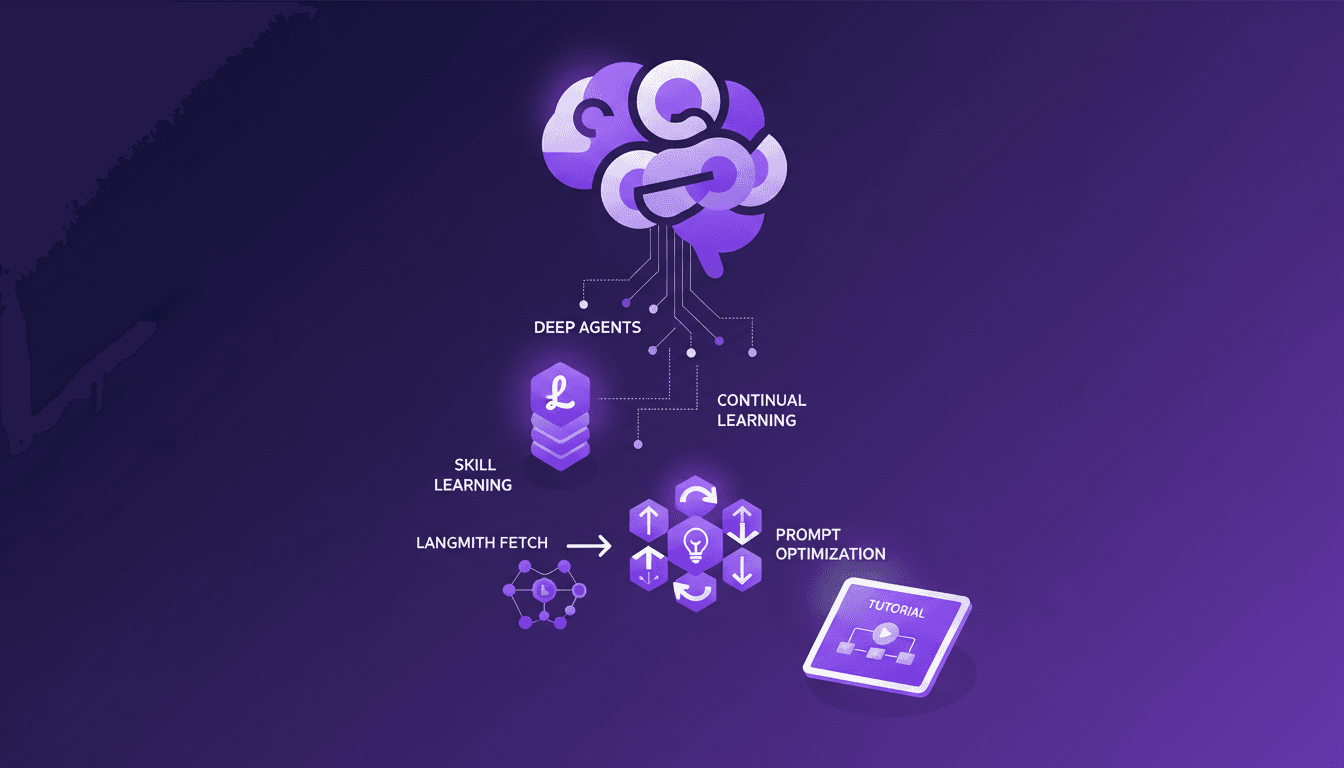

Continual Learning with Deep Agents: My Workflow

I jumped into continual learning with deep agents, and let me tell you, it’s a game changer for skill creation. But watch out, it's not without its quirks. I navigated the process using weight updates, reflections, and the Deep Agent CLI. These tools allowed me to optimize skill learning efficiently. In this article, I share how I orchestrated the use of deep agents to create persistent skills while avoiding common pitfalls. If you're ready to dive into continual learning, follow my detailed workflow so you don't get burned like I did initially.

Continual Learning with Deepagents: A Complete Guide

Imagine an AI that learns like a human, continuously refining its skills. Welcome to the world of Deepagents. In the rapidly evolving AI landscape, continual learning is a game-changer. Deepagents harness this power by optimizing skills with advanced techniques. Discover how these intelligent agents use weight updates to adapt and improve. They reflect on their trajectories, creating new skills while always seeking optimization. Dive into the Langmith Fetch Utility and Deep Agent CLI. This complete guide will take you through mastering these powerful tools for an unparalleled learning experience.

Cut Costs with Gemini 3 Flash OCR

I've been diving into OCR tasks for years, and when Gemini 3 Flash hit the scene, I had to test its promise of cost savings and performance. Imagine a model that's four times cheaper than Gemini 3 Pro, at just $0.50 per million token input and $3 for output tokens. I'll walk you through how this model stacks up against the big players and why it's a game changer for multilingual OCR. From cost-effectiveness to multilingual capabilities and technical benchmarks, I'll share my practical findings. Don't get caught up in the hype, discover how Gemini 3 Flash is genuinely transforming the game for OCR tasks.

Gemini 3 Flash: Upgrade Your Daily Workflow

I was knee-deep in token usage issues when I first got my hands on Gemini 3 Flash. Honestly, it was like switching from a bicycle to a sports car. I integrated it into my daily workflow, and it's become my go-to tool. With its multimodal capabilities and improved spatial understanding, it redefines efficiency. But watch out, there are limits. Beyond 100K tokens, it gets tricky. Let me walk you through how I optimized my operations and the pitfalls to avoid.

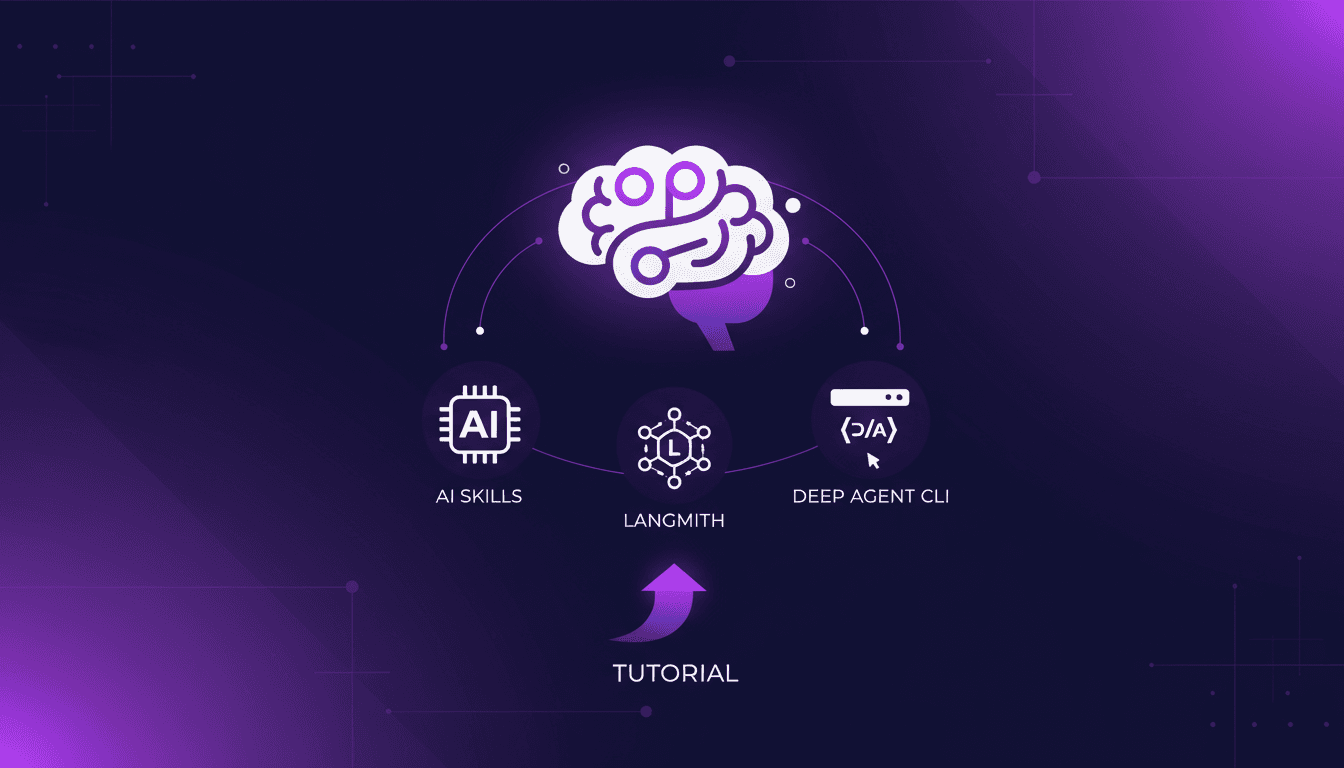

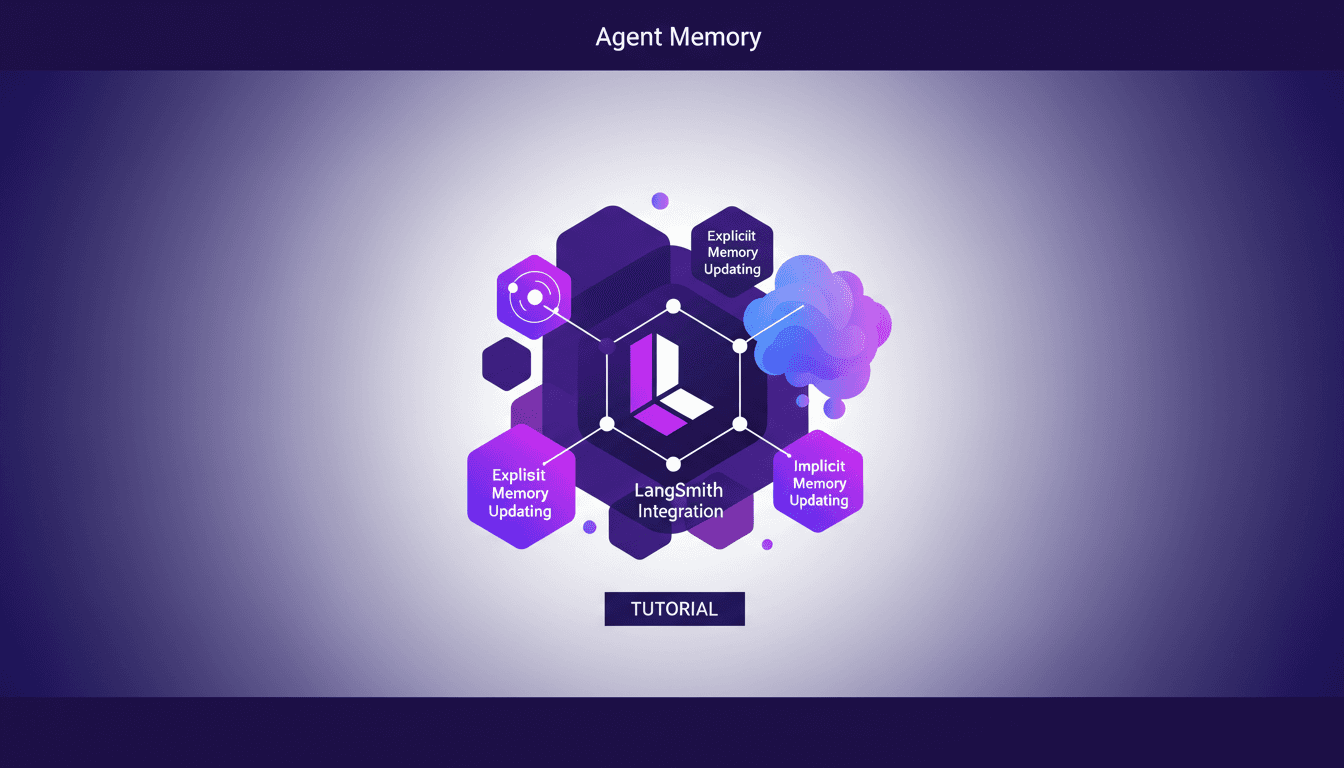

Managing Agent Memory: Practical Approaches

I remember the first time I had to manage an AI agent’s memory. It was like trying to teach a goldfish to remember its way around a pond. That's when I realized: memory management isn't just an add-on, it's the backbone of smart AI interaction. Let me walk you through how I tackled this with some hands-on approaches. First, we need to get a handle on explicit and implicit memory updates. Then, integrating tools like Langmith becomes crucial. We also dive into using session logs to optimize memory updates. If you've ever struggled with deep agent management and configuration, I'll share my tips to avoid pitfalls. This video is an advanced tutorial, so buckle up, it'll be worth it.