Managing Agent Memory: Practical Approaches

I remember the first time I had to manage an AI agent’s memory. It was like trying to teach a goldfish to remember its way around a pond. That's when I realized: memory management isn't just an add-on, it's the backbone of smart AI interaction. Let me walk you through how I tackled this with some hands-on approaches. First, we need to get a handle on explicit and implicit memory updates. Then, integrating tools like Langmith becomes crucial. We also dive into using session logs to optimize memory updates. If you've ever struggled with deep agent management and configuration, I'll share my tips to avoid pitfalls. This video is an advanced tutorial, so buckle up, it'll be worth it.

I remember the first time I had to manage an AI agent's memory. It was like trying to teach a goldfish to remember its way around a pond. That's when I realized: memory management isn't just an add-on, it's the backbone of smart AI interaction. Let me walk you through how I tackled this with some hands-on approaches. Initially, I tried explicit memory updates, but without understanding implicit updates, you hit a wall fast. I connect tools like Langmith to amplify these capabilities. Then, leveraging session logs allowed me to fine-tune memory updates. For those who've wrestled with deep agent management and configuration, I'll share my tips to avoid common pitfalls. This video is an advanced tutorial, so get ready, it's worth the ride.

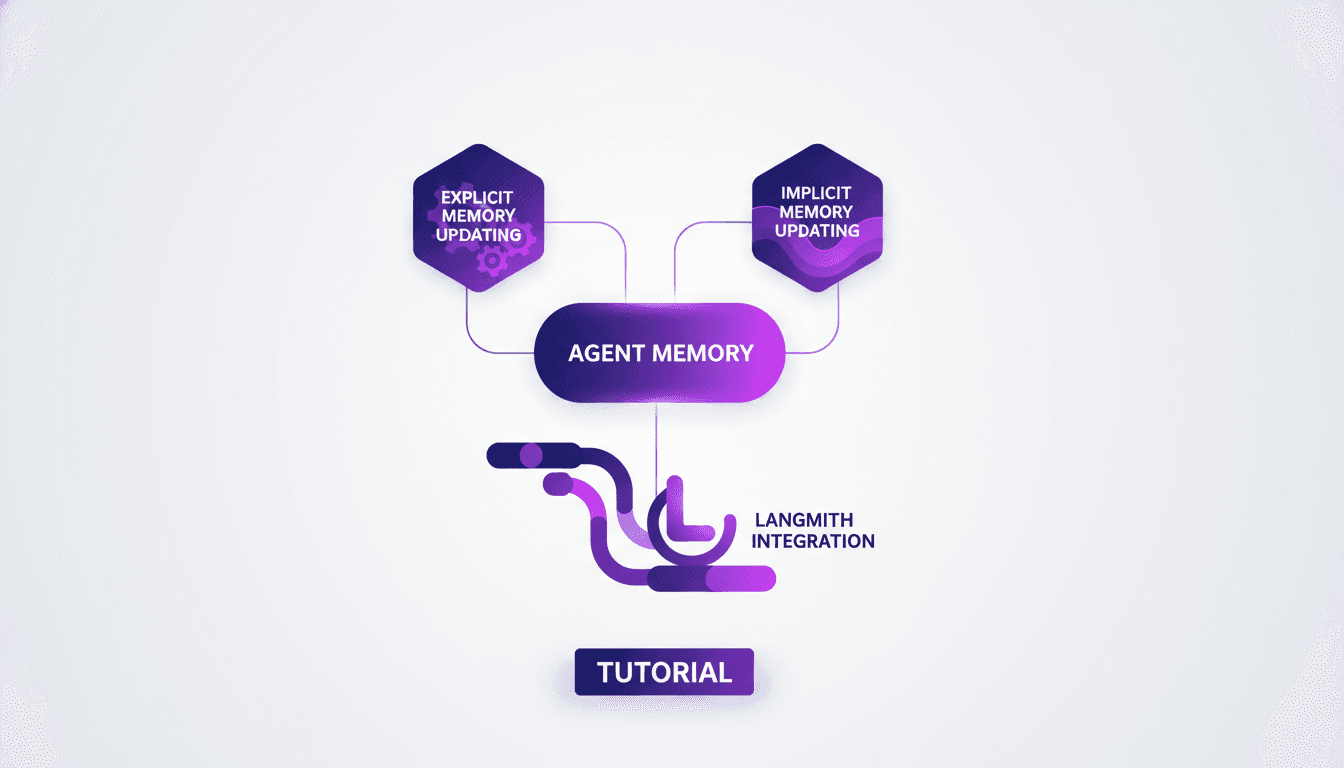

Explicit Memory Updating: Cloud Code and Deep Agents

First, I set up cloud code to handle explicit memory updates. This was my initial step in managing memory updates in my projects. Let me tell you, deep agents provide a robust framework for these updates. You can see a concrete example with the deep agent CLI. Each agent has a configuration home directory that allows for these updates. Watch out for configuration pitfalls—make sure your settings align with your goals. I found that explicit updates provide clear control, but they require more setup time. It's an investment worth making if precision is your endgame.

- Explicit updates ensure precise control but need careful setup.

- Deep agents facilitate managing multiple configurations.

- Be wary of configuration errors that may undermine your goals.

Implicit Memory Updating: User-Agent Interaction

Implicit updates happen seamlessly through interaction. I configured my agents to learn from user behavior naturally. This method saves time but can lead to unexpected memory patterns if not monitored carefully. Balancing explicit and implicit updates is crucial for consistent performance. It's like allowing an agent to absorb information without direct intervention, which is both a blessing and a risk.

- Implicit updates save time but can create unexpected memory patterns.

- A balance between explicit and implicit ensures optimal performance.

- Monitor patterns to avoid unwanted biases.

Reflection and Context Evolution in AI

Reflection allows agents to adapt to new contexts over time. I used reflection to enhance agent adaptability in dynamic environments. However, too much reflection can lead to resource drain. Context evolution is key for long-term AI system sustainability. It's like a brain that learns and constantly reevaluates its environment to improve.

- Reflection enhances adaptability but can be resource-intensive.

- Context evolution is crucial for AI system longevity.

- Avoid excessive reflection that might slow down the system.

Session Logs and Langmith for Memory Updates

Session logs are a goldmine for memory insights. The integration of Langmith streamlined my memory update process. I automated log analysis to save time and improve accuracy. Ensure logs are comprehensive to maximize their utility. It's like having a historical record of all interactions, ready to be tapped for refining agent capabilities.

- Session logs provide valuable insights for memory updates.

- Langmith integration enhances update efficiency.

- Automating log analysis saves time and improves accuracy.

Practical Demonstration: Implicit Memory Patterns

I demonstrated implicit memory patterns with real-world examples. Understanding preferences helps in designing better agent interactions. I adjusted my system based on observed patterns for optimal results. Beware of overfitting to specific patterns—stay flexible. It's like fine-tuning based on field feedback to enhance effectiveness.

- Understanding user preferences enhances agent interaction.

- Adjustments based on observed patterns optimize results.

- Avoid overfitting to specific patterns; remain adaptable.

Managing agent memory is both art and science. I've found that balancing explicit updates with implicit adaptation is crucial. By integrating tools like Langmith and leveraging session logs, I've pushed my AI systems towards better performance and adaptability.

- Explicit updating: I take direct control of agent memory when immediate accuracy is critical.

- Implicit adaptation: Sometimes it's more efficient to let the AI naturally evolve, but watch out for drift.

- Configuration management: With deep agents, getting the setup right is key to avoiding poor performance.

- Context evolution: I orchestrate continuous adaptation so the agent stays relevant in shifting contexts.

Looking forward, these techniques are game changers, but don't overlook the limits of each approach. Ready to transform your AI memory management? Dive into these techniques and see the difference in your systems. For deeper understanding, I recommend watching the full video. It'll give you the practical insights you need.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Agent Memory Management: Key Approaches

Imagine if your digital assistant could remember your preferences like a human. Welcome to the future of AI, where managing agent memory is key. This article delves into the intricacies of explicit and implicit memory updating, and how these concepts are woven into advanced AI systems. Explore how Cloud Code and deep agent memory management are revolutionizing digital assistant capabilities. From CLI configuration to context evolution through user interaction, dive into cutting-edge memory management techniques. How does Langmith fit into this picture? A practical example will illuminate the fascinating process of memory updating.

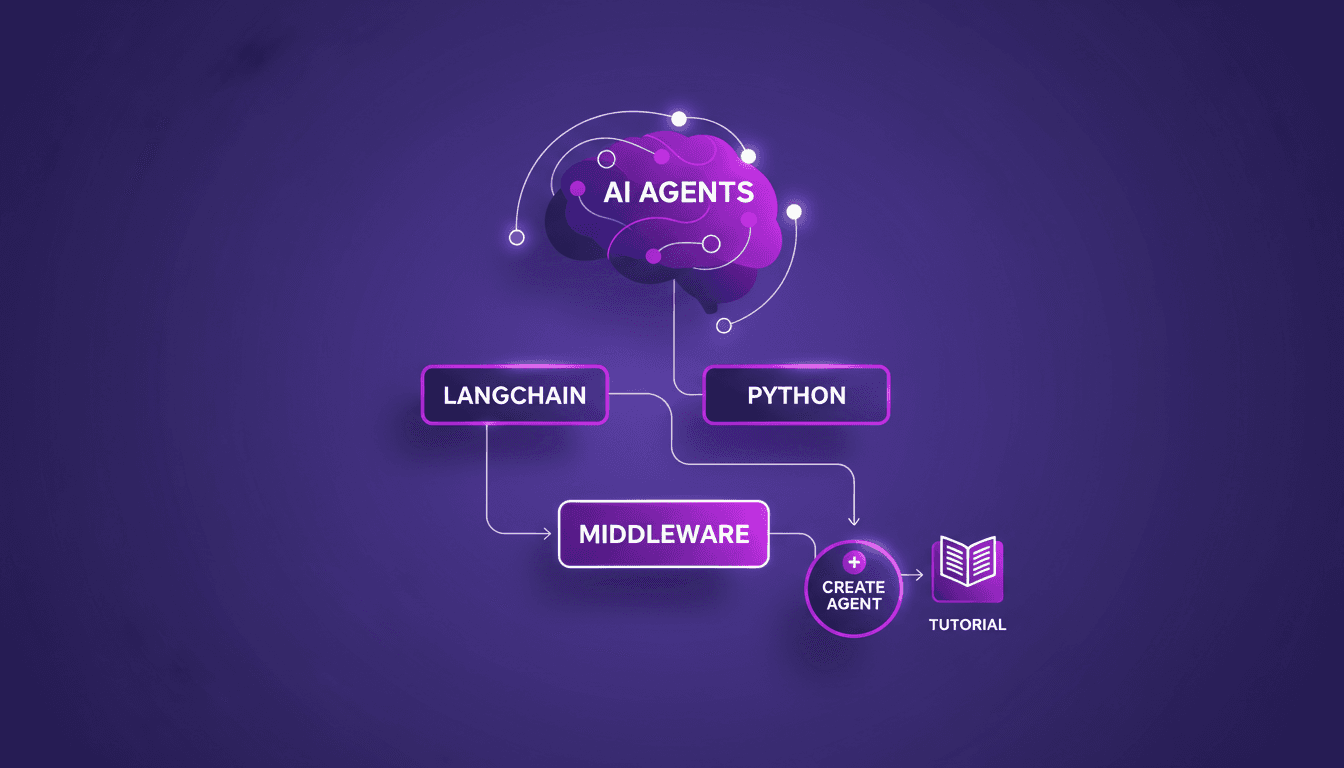

LangChain Academy: Start with LangChain

I dove into LangChain Academy's new course to see if it could really streamline my AI agent projects. Spoiler: it did, but not without some head-scratching moments. LangChain is all about building autonomous agents efficiently. This course promises to take you from zero to hero with practical projects and real-world applications. You'll learn to create agents, customize them with middleware, and explore real-world applications. For anyone looking to automate intelligently, it's a game changer, but watch out for context limits and avoid getting lost in module configurations.

Introduction to LangChain: AI Agents in Python

Imagine a world where AI agents handle your daily tasks effortlessly. Welcome to LangChain, a powerful tool in Python that makes this a reality. LangChain is changing how developers craft AI agents. Explore the new LangChain Academy course, designed to arm you with the skills to build sophisticated AI systems. Learn to create customized agents with the 'create agent' abstraction and refine their behavior with middleware. This course guides you through practical modules and projects to master this groundbreaking technology. Don't miss the chance to revolutionize how you interact with AI!

Build MCP Agent with Claude: Dynamic Tool Discovery

I dove headfirst into building an MCP agent with LangChain, and trust me, it’s a game changer for dynamic tool discovery across Cloudflare MCP servers. First, I had to get my hands dirty with OpenAI and Entropic's native tools. The goal? To streamline access and orchestration of tools in real-world applications. In the rapidly evolving AI landscape, leveraging native provider tools can save time and money while boosting efficiency. This article walks you through the practical steps of setting up an MCP agent, the challenges I faced, and the lessons learned along the way.

Native Tools: Build a Cloudflare MCP Agent

Imagine harnessing the full power of cloud platforms with just a few clicks. Welcome to the world of MCP agents and native provider tools. As AI technology evolves, integrating tools across multiple cloud platforms becomes essential. This tutorial explores building a Cloudflare MCP agent using Langchain, making complex integrations seamless and efficient. Discover how native tools from OpenAI and Entropic transform your cloud experience. Dive into dynamic tool discovery, integration, MCP server connections, and the advantages of using native tools. Let's explore practical scenarios and envision how these tools shape the future of AI applications.

Continual Learning with Deep Agents: My Workflow

I jumped into continual learning with deep agents, and let me tell you, it’s a game changer for skill creation. But watch out, it's not without its quirks. I navigated the process using weight updates, reflections, and the Deep Agent CLI. These tools allowed me to optimize skill learning efficiently. In this article, I share how I orchestrated the use of deep agents to create persistent skills while avoiding common pitfalls. If you're ready to dive into continual learning, follow my detailed workflow so you don't get burned like I did initially.