OpenAI and Nuclear Security: Deployment and Impact

I remember the first time I read about OpenAI's collaboration with the US government. It felt like a game-changer, but not without its complexities. This partnership with the National Labs is reshaping AI deployment, and it's not just about tech. We're talking leadership, innovation, and a delicate balance of power in a competitive world. With key figures like Elon Musk and Donald Trump involved, and tech players like Nvidia and Azure backing up, the stakes in nuclear and cyber security take on a whole new dimension. Plus, there's that US-China competition lurking. Strap in, because I'm going to walk you through how this is all playing out.

I remember the first time I read about OpenAI teaming up with the US government, it was like a bolt out of the blue. We're talking about a real game-changer in the AI landscape, but hey, it's not without its complications. This partnership with the US National Labs is much more than just a tech leap. It's about leadership, innovation, and the balance of power in a fiercely competitive world. With Elon Musk and Donald Trump in the mix, and tech giants like Nvidia and Azure backing up this initiative, the stakes in nuclear and cyber security have never been higher. Not to mention the ever-present US-China rivalry looming over it all. I was personally taken aback by the potential impacts on job creation and medical research. So, let me take you through this intricate partnership and its implications for our future.

Navigating the OpenAI-Government Collaboration

Collaborating with the government is like steering a ship in choppy waters. OpenAI's bold partnership with the US National Labs involves 15,000 scientists in AI and security projects. It's massive, and I know because I've seen such partnerships transform entire sectors. Elon Musk, with his often controversial vision, also influences this collaboration, which is significant. Public-private partnerships play a crucial role in advancing AI, but watch out, aligning government and tech company goals is never a walk in the park.

- 15,000 scientists involved, speaking volumes about the project's scale.

- OpenAI mentions 'nuclear' four times in their announcement, a strong signal.

- Elon Musk and other influential figures add complexity to the dynamics.

These collaborations are essential, but they come with challenges. I've seen projects hit bureaucratic walls, and that's a point to watch.

Deploying the O Series Model: What's Under the Hood?

Deploying OpenAI's unannounced O Series model is like opening a black box. With Nvidia's supercomputer support on Azure, the possibilities are enormous, but orchestration challenges lurk. I've faced similar technological limits, and I can tell you every technical choice is a compromise. Here, AI safety and nuclear security protocols are at the core. This could also create jobs and advance medical research, but let's not get too excited just yet.

- Nvidia's Azure is a powerful lever, but with integration challenges.

- Potential impact on national security and medical research.

AI Safety and Cyber Security: A Delicate Balance

When you're juggling AI safety, especially in a nuclear context, cybersecurity becomes paramount. OpenAI has implemented robust measures to mitigate risks, but nothing is foolproof. I've often seen models fail in real-world security tests. Protocols must be rigorous, but they have their limits, and identifying them is crucial.

- Real-world scenarios to test security protocols.

- Limitations of current models, with clear areas for improvement.

US-China AI Competition: The Global Stakes

The US-China dynamic shapes AI development. The political implications are huge, with figures like Trump and Nadella playing key roles. The strategy to maintain US leadership in AI is complex and influenced by tense international relations.

- Political influence on AI development.

- Maintaining AI leadership against international competition.

The Bigger Picture: AI, Politics, and Innovation

AI at the intersection of technology and politics is like walking a tightrope. Public-private partnerships are catalysts for innovation, but ethics must not be sacrificed on the altar of progress. I see potential impacts on global tech ecosystems, but this must be balanced with ethical considerations. The future of AI depends on this delicate balance.

- Potential impacts on global tech ecosystems.

- Balancing innovation and ethics is crucial for the future.

So here's the deal, OpenAI teaming up with the US government is a bold move. It's this intricate dance of tech, national security, and politics. Here's what I've distilled:

- Innovation meets security: With 15,000 scientists from the National Labs teaming up with new AI models, we're redefining nuclear security.

- Technical leadership: That Nvidia nvl 72 supercomputer running on Azure? It's pushing AI models to new heights, boosting US tech leadership.

- Key figures involved: Elon Musk and Donald Trump aren't just there for show. Their involvement signals the seriousness of this endeavor.

- Risks to watch: Rapid innovation is exciting, but keep an eye on cybersecurity and nuclear safety. Too much enthusiasm without vigilance can be risky.

The potential is massive, but we need to stay alert. It's a real game changer for AI, but let's not forget the trade-offs. I recommend checking out the video "☢️ OpenAI goes Nuclear" to grasp the nuances of this collaboration. It's worth a watch, especially if you're in the AI field. Watch here.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

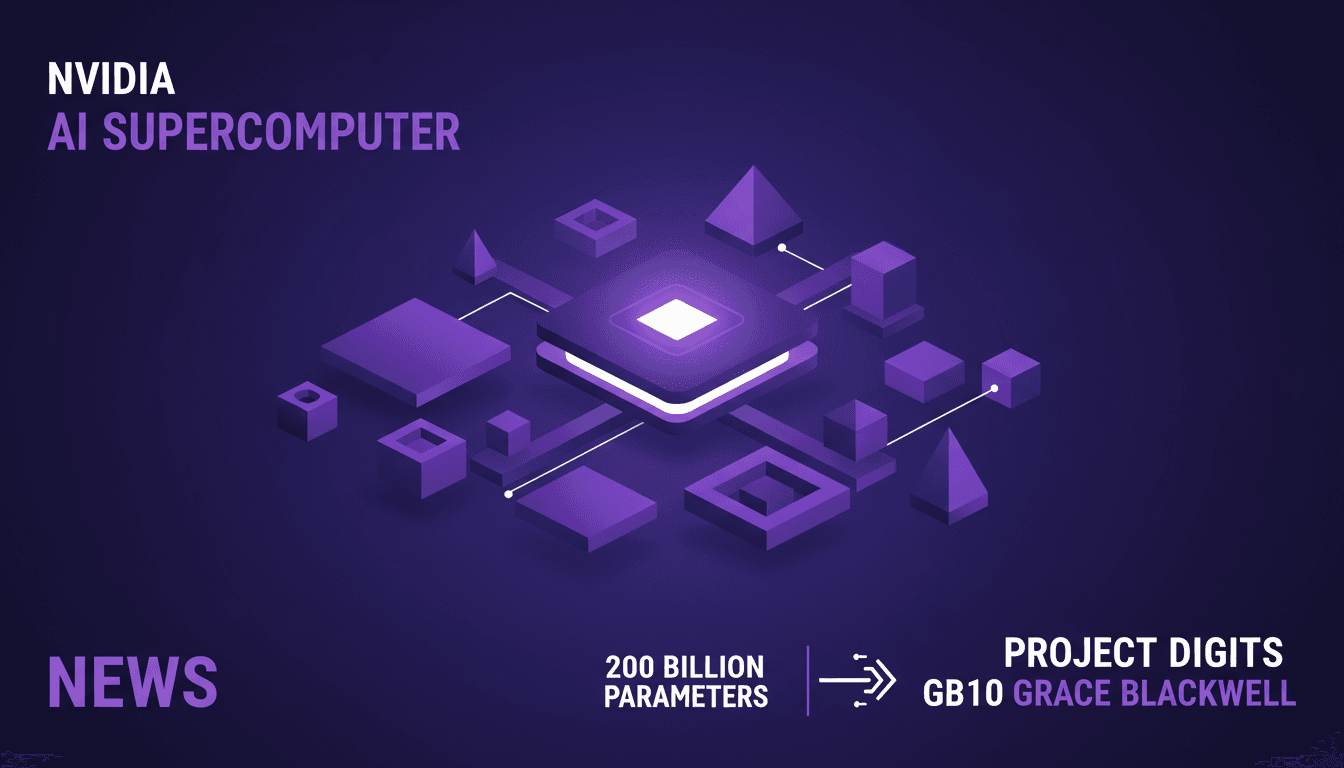

Nvidia AI Supercomputer: Power and Applications

I plugged in Nvidia's new personal AI supercomputer, and let me tell you, it's like trading in your bicycle for a jet. We're talking about a device that outpaces your average laptop by a thousand times. This gem can handle 200 billion parameter models, thanks to the gb10 Grace Blackwell chip. But watch out, this colossal power brings its own challenges. It's a real game changer for individual users, but you need to know where to set the limits to avoid getting burned. Let's dive into what makes this tool exceptional and where you might hit a wall.

Nvidia's Personal AI Supercomputer: Project DIGITS

I’ve been knee-deep in AI projects for years, but when Nvidia announced their personal AI supercomputer, I knew this was a game changer. Powered by the gb10 Grace Blackwell chip, this beast promises to handle models with up to 200 billion parameters. This isn't just tech hype; it's a shift in how we can build and deploy AI solutions. With power a thousand times that of an average laptop, this isn't for amateurs. Watch out for the pitfalls: before diving in, you need to understand the specs and the costs. I'll walk you through what's changing in our workflows and what you need to know to avoid getting burned.

Build AI Fashion Influencer: Step-by-Step

I dove headfirst into the world of virtual fashion influencers, and let me tell you, the potential for virtual try-ons is massive. Imagine crafting a model that showcases your designs without a single photoshoot. That's exactly what I did using straightforward AI tools, and it's a real game changer for cutting costs and sparking creativity. With less than a dollar per try-on and just 40 seconds per generation, this isn't just hype. In this article, I'll walk you through how to leverage this technology to revolutionize your fashion marketing approach. From AI-generated models to monetization opportunities, here’s how to orchestrate this tech effectively.

Claude: Philosophy and Ethics in AI

I joined Anthropic not just as a philosopher, but as a builder of ethical AI. First, I had to grasp the character of Claude, the AI model I’d be shaping. This journey isn't just about coding—it's about embedding ethical nuance into AI decision-making. In the rapidly evolving world of AI, ensuring that models like Claude make ethically sound decisions is crucial. This isn't theoretical; it's about practical applications of philosophy in AI. We tackle the character of Claude, nuanced questions about AI behavior, and how to teach AI ethical behavior. As practitioners, I share with you the challenges and aspirations of AI ethics.

Treating AI Models: Why It Really Matters

I've been in the trenches with AI models, and here's the thing: how we treat these models isn't just a tech issue. It's a reflection of our values. First, understand that treating AI models well isn't just about ethics—it's about real-world impact and cost. In AI development, every choice carries ethical and practical weight. Whether it's maintaining models or how we interact with them, these decisions shape both our technology and society. We're talking about the impact of AI interactions on human behavior, cost considerations, and ethical questions surrounding humanlike entities. Essentially, our AI models are a mirror of ourselves.