Claude: Philosophy and Ethics in AI

I joined Anthropic not just as a philosopher, but as a builder of ethical AI. First, I had to grasp the character of Claude, the AI model I’d be shaping. This journey isn't just about coding—it's about embedding ethical nuance into AI decision-making. In the rapidly evolving world of AI, ensuring that models like Claude make ethically sound decisions is crucial. This isn't theoretical; it's about practical applications of philosophy in AI. We tackle the character of Claude, nuanced questions about AI behavior, and how to teach AI ethical behavior. As practitioners, I share with you the challenges and aspirations of AI ethics.

Why is a philosopher working in AI? That's exactly the question I asked myself before joining Anthropic. I'm not here to philosophize in the abstract but to build—and more importantly, to embed ethical nuances into Claude's decision-making, our AI model. First, I had to understand who Claude is, his character. And we're not just talking about lines of code here. We're talking about teaching an AI how to make complex decisions with real ethical stakes. In the fast-evolving world of AI, ensuring that models like Claude make ethically sound decisions is crucial. This isn't just theory; it's philosophy applied to the practical. Here, I discuss Claude's character, the nuanced questions we pose, and what it really means to teach an AI ethical behavior. As a practitioner, I'll show you the real challenges and aspirations of AI ethics.

Understanding the Character of Claude

Claude isn't just code; it's a model with a character that embodies ethical considerations. First, I mapped out Claude's decision-making processes to identify potential ethical blind spots. It's like charting a moral map before diving into the journey. Then, I worked on integrating philosophical frameworks into Claude’s architecture. This ensures that its technical capabilities are balanced with ethical constraints. Why is it crucial? Because without this, we risk drifting towards purely functional decisions, disregarding human impact.

It's a delicate dance between technical power and moral constraint. Imagine Claude facing a decision where the most efficient solution is also the most ethically questionable. That's where integrating these frameworks makes all the difference.

- Character of Claude: Balancing technical capabilities and ethical constraints.

- Mapping: Identifying potential ethical blind spots.

Navigating Nuanced Questions in AI

Nuanced questions aren't just academic; they directly impact AI behavior. Here, I developed scenarios where Claude faced complex ethical dilemmas. This involved iterative testing and refining decision pathways. But watch out for oversimplification. Real-world decisions are rarely binary.

I realized that Claude’s decisions had to reflect the ethical nuances comparable to those an ideal human might make, especially in tricky situations. Imagine for a moment Claude having to choose between two bad options. Each choice needed careful weighing.

- Ethical dilemmas: Testing and refining decisions.

- Ethical nuances: Avoid binary simplification of decisions.

Teaching AI Ethical Decision-Making

Next, I implemented ethical training modules within Claude’s learning process. The goal was to simulate real-world ethical challenges. I used a feedback loop to refine Claude’s responses over time. But don't over-rely on pre-set rules. Flexibility is key. I frequently had to adjust, especially when unexpected behaviors emerged.

It's like teaching a child to navigate new situations. The model must learn to adapt, not just apply rigid rules.

- Training modules: Simulating real-world ethical challenges.

- Feedback loop: Continuous refinement of Claude’s responses.

- Flexibility: Importance of adapting rather than rigidly applying rules.

Emulating Ideal Person Behavior in AI

The aspiration is for AI to mirror the decision-making of an ideal person. I crafted benchmarks for ideal behavior based on philosophical principles. This process involved continuous alignment with ethical goals. Be cautious of cultural biases creeping into the model. It's a constant risk, especially when AI is deployed in varied contexts.

That's where the complexity lies: ensuring that AI doesn't just reflect a single culture or viewpoint, but embraces a universal ethical perspective.

- Ideal behavior: Benchmarks based on philosophical principles.

- Ethical alignment: Continuous adjustment of goals.

- Cultural biases: Constant vigilance to prevent integration.

Aspirational Goals for AI Ethics

The long-term goal is to set a standard for ethical AI behavior. I piloted new ethical guidelines to guide AI development. This requires ongoing collaboration with interdisciplinary teams. Remember, ethical AI is a journey, not a destination. It's a process that demands constant reevaluation and adaptation to new discoveries.

For me, each iteration of Claude is a step towards this ultimate goal. But it's essential to remember that each ethical decision must be contextualized and reviewed.

- Ethical standards: Establishing benchmarks for AI behavior.

- Collaboration: Interdisciplinary work for AI development.

- Ethical journey: Continuous process of reevaluation and adaptation.

Building ethical AI like Claude is about more than technical prowess; it's about weaving deep philosophical insights into our everyday decision-making. First, I focus on ensuring Claude asks those nuanced questions — the kind that makes us rethink ethical behavior. Next, it's about continuously training Claude to act like an 'ideal person'. But watch out, making tough decisions is still a challenge; balancing complexity and response speed is key. Clearly, embedding these behaviors in AI is a game changer, yet there's always a risk of oversimplifying. Looking ahead, I'm convinced we can make AI a major ethical player. Join me on this journey of ethical AI development. Share your experiences, and together, let's shape the future of AI. For deeper insights, I highly recommend watching the original video: "Why is a philosopher working in AI?" YouTube Video.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Treating AI Models: Why It Really Matters

I've been in the trenches with AI models, and here's the thing: how we treat these models isn't just a tech issue. It's a reflection of our values. First, understand that treating AI models well isn't just about ethics—it's about real-world impact and cost. In AI development, every choice carries ethical and practical weight. Whether it's maintaining models or how we interact with them, these decisions shape both our technology and society. We're talking about the impact of AI interactions on human behavior, cost considerations, and ethical questions surrounding humanlike entities. Essentially, our AI models are a mirror of ourselves.

Poolside: Revolutionizing AI with Jason Warner

Imagine a world where AI seamlessly converts code across languages. Poolside is making this a reality. In a recent talk, Jason Warner and Eiso Kant unveiled their daring mission. Their Malibu agent aims to optimize efficiency and innovation. Discover how Poolside is redefining AI's future. A stunning code conversion demonstration captivated the audience. What are the challenges of AI in high-stakes environments? What are Poolside's future deployment plans? Jason Warner and Eiso Kant share their journey and the collaboration driving this revolution. Reinforcement learning is pushing AI capabilities to new heights. Poolside is set to transform the infrastructure and scale of AI model development. Don't miss this captivating exploration of revolutionary AI.

AI Evaluation Framework: A Guide for PMs

Imagine launching an AI product that surpasses all expectations. How do you ensure its success? Enter the AI Evaluation Framework. In the rapidly evolving world of artificial intelligence, product managers face unique challenges in effectively evaluating and integrating AI solutions. This article delves into a comprehensive framework designed to help PMs navigate these complexities. Dive into building AI applications, evaluating models, and integrating AI systems. The crucial role of PMs in development, iterative testing, and human-in-the-loop systems are central to this approach. Ready to revolutionize your product management with AI?

Claude Code: Unveiling Architecture and Simplicity

Imagine a world where coding agents autonomously write and debug code. Claude Code is at the forefront of this revolution, thanks to Jared Zoneraich's innovative approach. This article unveils the architecture behind this game-changer, focusing on simplicity and efficiency. Dive into the evolution of coding agents and the importance of context management. Compare different philosophies and explore the future of AI agent innovations. Prompt engineering skills are crucial, and the role of testing and evaluation can't be overlooked. Discover how these elements are shaping the future of AI agents.

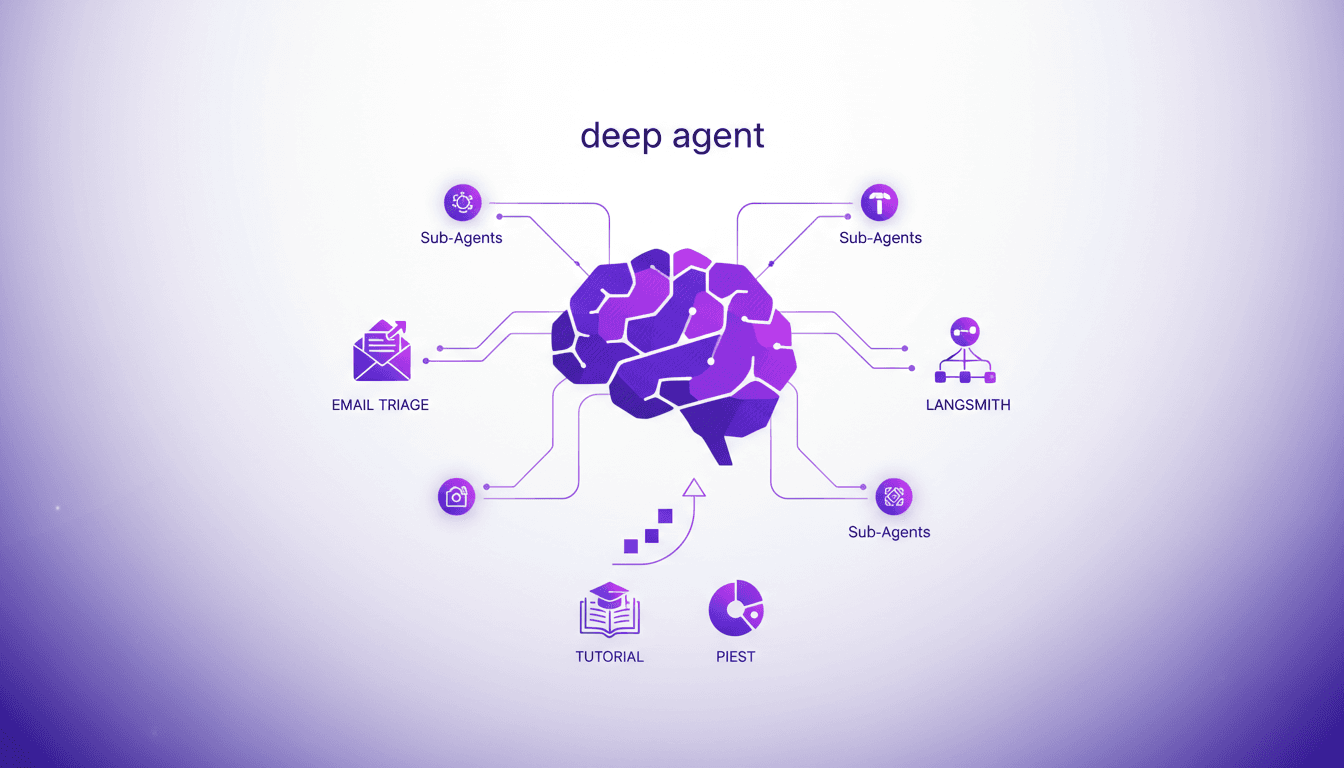

Building a Deep Agent for Email Triage

I've been knee-deep in AI development, and let me tell you, building a deep agent for email triage with Langmith is like orchestrating a symphony. First, I set up my instruments — in this case, system prompts and sub-agents — then I conduct the performance with precision tools like Piest and Viest. The goal? To streamline email management, integrate calendar scheduling, and enhance agent performance through practical, hands-on implementation using Langmith. Let’s dive into how I made this work.