Mistral 3: Europe's Breakthrough or Too Late?

Ever since I got my hands on Mistral 3, I've been diving deep into its mechanics. This isn't just another AI model; it's Europe's bold move in the AI race. With 675 billion parameters, Mistral 3 stands as a heavyweight contender, but is it enough against giants like Deep Seek? I connect the dots between performance, fine-tuning strategies, and what this means for European innovation. Let's break it down together.

Ever since I got my hands on Mistral 3, I've been diving into the nitty-gritty to understand what really makes it tick. This model isn't just another iteration; it's Europe's bold answer in the global AI race. With 675 billion parameters, it's playing in the big leagues, but is it enough against giants like Deep Seek? As a practitioner, I've been scrutinizing its performance (and with that many parameters, there's a lot to chew on) while comparing its results with other models. But what really catches my interest is Mistral's strategy: releasing base models for fine-tuning. Does this reposition Europe in the open-source space? We'll explore that and, of course, take a close look at how this smaller dense model might change the game. Basically, we're going to see if Mistral 3 is the breakthrough we've been hoping for or just another step in an already well-established race.

Understanding Mistral 3: The Nuts and Bolts

When I first got my hands on Mistral 3, I knew we were looking at a game changer. With 675 billion parameters, this model is pushing the boundaries. What's fascinating here is the Mixture of Experts model, which activates 41 billion parameters per task. That's optimization at its finest! This selective activation ensures performance while cutting down computational costs.

Another highlight of Mistral 3 is its Apache 2 License, opening doors for open-source collaboration. As a developer, I appreciate the flexibility for tweaks and experiments. Unlike monolithic models, Mistral focuses on releasing base models for fine-tuning, which enhances adaptability and efficiency. But watch out, each approach has its limits. The challenge here is to not lose sight of the balance between customization and overall performance.

Mistral vs. The Giants: A Comparative Analysis

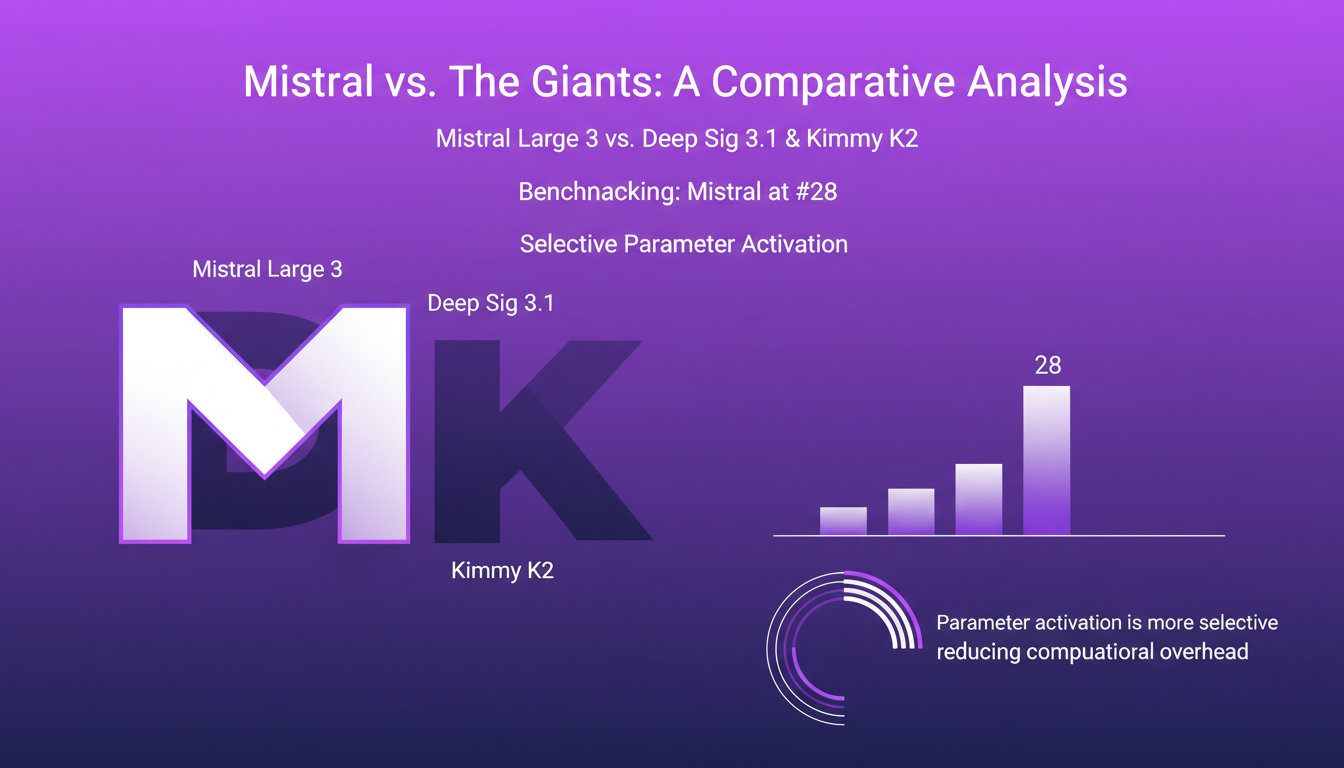

When comparing Mistral Large 3 to Deep Sig 3.1 and Kimmy K2, Mistral ranks at the 28th position in benchmarks. Not bad, but there's room for improvement. What sets Mistral apart is its ability to activate parameters more selectively, reducing computational overhead.

| Model | Performance | Efficiency |

|---|---|---|

| Mistral Large 3 | 28th position | Active Parameters: 41 billion |

| Deep Sig 3.1 | 25th position | Active Parameters: N/A |

| Kimmy K2 | 27th position | Active Parameters: N/A |

The real-world implications for developers lie in choosing the right model based on specific needs. The trade-offs, like managing complexity versus performance gains, are unavoidable. Sometimes, opting for a simpler solution that's quicker to deploy is wiser.

Smaller Models, Bigger Impact: Mistral's Strategic Release

Mistral isn't just about big models. They've also introduced smaller dense models, like the Mini Style 3, to meet the growing demand for efficiency. These compact models are perfect for specific AI applications without sacrificing performance. But remember, there's always a balance between model size and capability.

- Cost-effective

- Adjusted performance

- Task-specific adaptability

These models cater to the demand for more efficient solutions, but we must not overlook the importance of capacity and features offered by larger models.

Mistral's Role in European and Open-Source AI

With Mistral, European AI has a leader championing innovation and adaptability. Their open-source approach under the Apache 2 License encourages community-driven development. However, competing with US giants remains a challenge, especially in capturing market share.

For me, open-source is a major asset as it allows for model enhancement through collaboration. But don't underestimate the challenges of this approach, particularly the need to stand out against better-funded players like OpenAI or Google.

Looking Ahead: Expectations for Mistral 3

There's a lot of anticipation for the Mistral 3 reasoning model, which could redefine AI standards in Europe. Future updates might address current limitations and enhance performance.

To stay competitive, Mistral must balance innovation with practical application. In my view, the future lies in their ability to adapt models to market needs while maintaining a continuous innovation strategy.

- Expected improvements

- Competition with current standards

- Innovation vs practical application

Mistral 3 is definitely a bold move for European AI, here's the deal:

- First, with its 675 billion parameters, the Mistral Large 3 model is a beast. But watch out, only 41 billion of those are active parameters, so don't get blinded by the big numbers.

- Second, Mistral's strategy of releasing base models for fine-tuning is a bold one. It allows for real customization, but it also demands a good level of expertise to get the most out of it.

- Lastly, Mistral is aiming to make a mark in the European tech scene. It's promising, but they need to keep innovating and strategically positioning themselves to really compete with the big players.

Looking ahead, I'd say Mistral 3 could be a game changer in Europe, but keep an eye on future developments. If you're in the AI field, keep tabs on Mistral's progress. It might just be the model to consider for your next project. For a deeper dive, I'd recommend checking out the full video; it's packed with insights!

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Winning Strategies for AI Projects: Field Lessons

I've been in the trenches of AI development, and let me tell you, it's not just about cutting-edge tech. It's about vision, strategy, and navigating a maze of regulations. Let's dive into what really makes or breaks AI projects. From the EU's AI Act to cultural and regulatory hurdles, I share insights from my journey and how younger leadership is reshaping the tech landscape. The keys to success lie in a clear vision and solid strategy, not to mention the open-source versus closed-source debate. But watch out, moving from proof of concept to production is fraught with pitfalls. Let's explore what truly works.

StepFun AI Models: Efficiency and Future Impact

I dove into StepFun AI's ecosystem, curious about its text-to-video capabilities. Navigating through its models and performance metrics, I uncovered a bold contender from China. With 30 billion parameters and the ability to generate up to 200 frames per second, StepFun AI promises to shake up the AI landscape. But watch out, the Step video t2v model demands 80 GB of GPU memory. Compared to other models, there are trade-offs to consider, yet its potential is undeniable. Let's explore what makes StepFun AI tick and how it might redefine the industry.

Gemini 2.5 Pro: Performance and Comparisons

I dove into the Gemini 2.5 Pro with high expectations, and it didn't disappoint. From coding accuracy to search grounding, this model pushes boundaries. But let's not get ahead of ourselves—there are trade-offs to consider. With a score of 1443, it's the highest in the LM arena, and its near-perfect character recognition is impressive. However, excessive tool usage and a tendency to overthink can sometimes slow down the process. Here, I share my hands-on experience with this model, highlighting its strengths and potential pitfalls. Get ready to see how Gemini 2.5 Pro stacks up and where it might surprise you.

Grok 4: Pricing and Controversies Unveiled

I've been knee-deep in AI models for years, but when Grok 4 hit the scene, it was like a bombshell. I first dove into its launch and performance, then tackled the pricing strategy. Ignoring the controversies would have been a rookie move. Grok 4 promises to be a game-changer in AI, but with a hefty price tag and some eyebrow-raising controversies, it's not all sunshine and rainbows. Let me share my discoveries, from benchmark performance to prompt poisoning, and yes, we need to talk about artificial super intelligence. It's a blend of promises and pitfalls.

Llama 4 Deployment and Open Source Challenges

I dove headfirst into the world of AI with Llama 4, eager to harness its power while navigating the sometimes murky waters of open source. So, after four intensive days, what did I learn? First, Llama 4 is making waves in AI development, but its classification sparks heated debates. Is it truly open-source, or are we playing with semantics? I connected the dots between Llama 4 and the concept of open-source AI, exploring how these worlds intersect and sometimes clash. As a practitioner, I take you into the trenches of this tech revolution, where every line of code matters, and every technical choice has its consequences. It's time to demystify the terminology: open model versus open-source. But watch out, there are limits not to cross, and mistakes to avoid. Let's dive in!