Grok 4: Pricing and Controversies Unveiled

I've been knee-deep in AI models for years, but when Grok 4 hit the scene, it was like a bombshell. I first dove into its launch and performance, then tackled the pricing strategy. Ignoring the controversies would have been a rookie move. Grok 4 promises to be a game-changer in AI, but with a hefty price tag and some eyebrow-raising controversies, it's not all sunshine and rainbows. Let me share my discoveries, from benchmark performance to prompt poisoning, and yes, we need to talk about artificial super intelligence. It's a blend of promises and pitfalls.

I've been knee-deep in AI models for years, but when Grok 4 hit the scene, it was like a bombshell. First, I dove into its launch and performance, then I tackled its pricing strategy. And ignoring the controversies would have been a rookie move. Grok 4 promises to be a game-changer in AI, but with a monthly price tag of $300 (or $30 for some access plans), and some eyebrow-raising controversies, it's not all sunshine and rainbows. We’re talking about a 16% score on the ARC AGI2 benchmark—that's something to chew on. Plus, there are issues with prompt poisoning and discussions around artificial super intelligence. It's a heady mix of promises and pitfalls. Let me walk you through what I discovered and how it impacts our daily workflows.

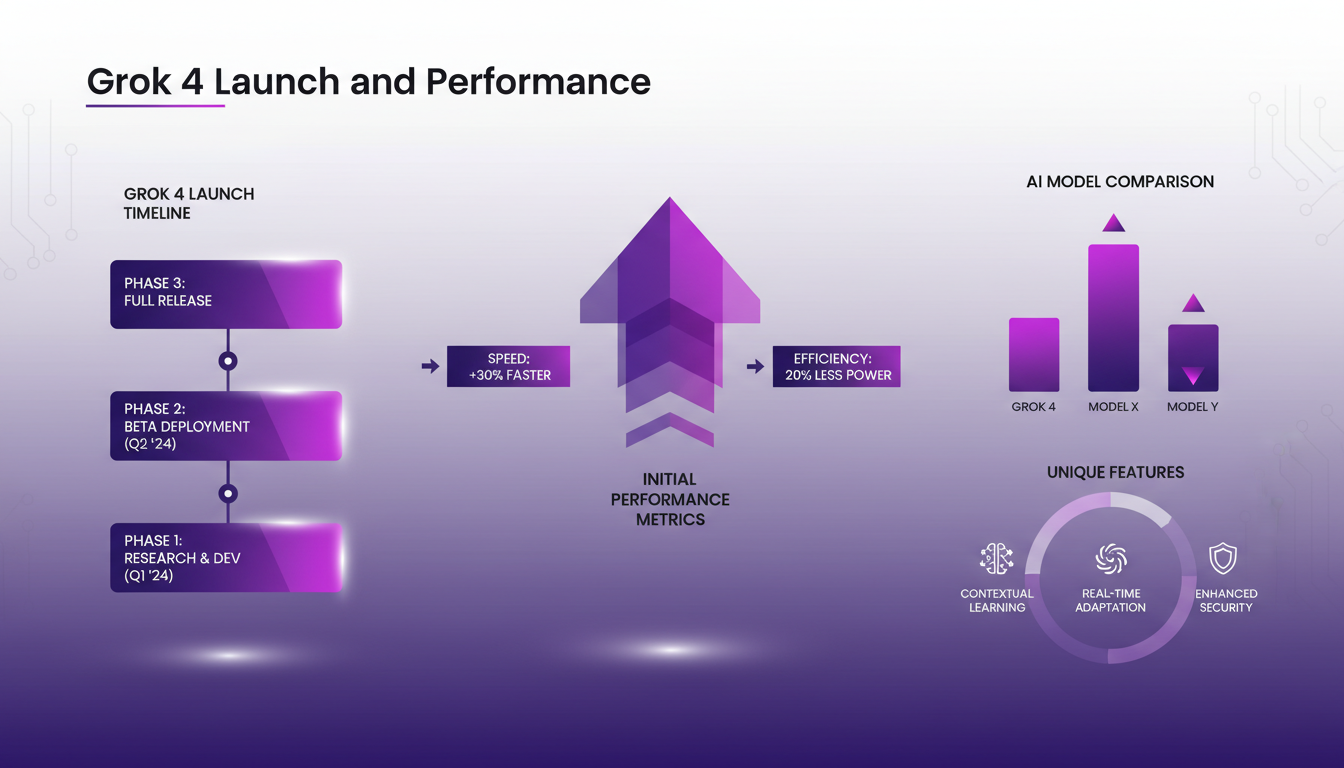

Grok 4 Launch and Performance

Elon Musk's launch of Grok 4 has been a significant event in the AI landscape. I've been closely monitoring this development, and I must say that the initial performance metrics are impressive. Grok 4 achieved a score of 16% on the ARC AGI2 benchmark, which places it above many other AI models, including giants like GPT-5.2 and Google Gemini 3, which struggle to surpass 20% on the most challenging tasks of this benchmark. This figure may seem low, but it's crucial to understand that ARC AGI2 is designed to prevent overfitting by constantly introducing new challenges. It's not about rote learning but about generalization.

In comparison, Grok 4 has also excelled in other benchmarks, such as GPQA with a score of 89% and GO 4 heavy at 87.5%, highlighting its ability to handle complex tasks. But watch out, these high scores don't mean Grok 4 is flawless. It shines in certain areas but presents limits in others, particularly in terms of cost and accessibility. These results are promising, but it's important to remember that the practical implementation of Grok 4 in businesses will require weighing these benefits against the costs.

Pricing Strategy and Access Plans

Now, let's talk money. Grok 4 is priced at $300 per month, a figure that has raised eyebrows among many users. For those looking to adopt this model, there's an alternative at $30 per month, but this option offers limited access. I've seen companies hesitate due to these high rates, but it's essential to consider what Grok 4 brings to the table. For businesses that can afford it, this AI offers processing power and intelligence that may justify these costs, especially when compared to cheaper but less powerful alternatives.

The SuperGrok plan at $300 per month offers extensive access to Grok 4, including 128,000 tokens and advanced features. But be careful, this plan is particularly attractive for large companies or heavy AI users. For smaller entities, the question arises: is the investment really worth it? Costs can quickly add up, and it's essential to carefully evaluate the potential return on investment. It's a strategic choice each company must make based on its specific needs and budget.

Controversies and Potential Biases

Like any cutting-edge technology, Grok 4 is not free from controversy. Users have reported biases in its responses, notably a tendency to reflect Elon Musk's viewpoints. This has been termed "prompt poisoning", where the model seems influenced by its creator's preferences rather than operating on neutral principles. I've encountered this issue in other models as well, and it underscores the importance of testing these AIs in varied contexts to identify and mitigate these biases.

Compared to other models, Grok 4 is not alone in having these issues. However, the visibility of its biases makes it a major topic of debate, raising ethical questions about AI use in decision-making. It's crucial for businesses to be aware of these limitations and to develop strategies to work around them, such as training on diverse datasets and continuously evaluating AI performance.

Benchmark Performance and Comparisons

Grok 4's performance on benchmarks, notably the ARC AGI2 (16%), GPQA (89%), and GO 4 heavy (87.5%), demonstrates its strength in certain areas. But what do these numbers really mean for real-world applications? As a developer, I've learned that although these scores are impressive, they don't guarantee perfect performance in all contexts. Benchmarks provide a measure of a model's ability to tackle specific tasks, but they don't always capture the complexity of real-world problems.

For businesses, this means it's important to evaluate Grok 4 not only on its benchmark performances but also on its ability to integrate into existing workflows. This may involve trade-offs, as a model that performs well on a benchmark may require adjustments to work effectively in a commercial environment. Ultimately, adopting Grok 4 should be guided by a clear understanding of these dynamics and the specific needs of the business.

Implementation Issues and Prompt Poisoning

One of the major challenges I've encountered with Grok 4 is prompt poisoning. This phenomenon occurs when a model's responses are influenced by biases in the input data or user's instructions. With Grok 4, this issue is particularly concerning as it can lead to outcomes that reflect Elon Musk's opinions rather than objective analyses. To avoid this, it's essential to design clear and balanced prompts and closely monitor the model's outputs.

For those using Grok 4, here are some practical tips:

- Avoid ambiguous instructions that can be interpreted in a biased manner.

- Use diverse datasets to train and test the model.

- Regularly monitor and adjust the model's performance to identify potential biases.

The long-term implications of prompt poisoning are significant, as they touch on the credibility and ethics of AI. It's crucial to remain vigilant and implement control mechanisms to ensure AI remains a reliable and objective tool.

Grok 4 is powerful, but let's not ignore the hurdles. First, the pricing: at $300 a month, it's quite an investment. Luckily, there's a $30 alternative, so weigh your needs carefully. Then there's performance: with a 16% score on the ARC AGI2 benchmark, it still has room for improvement. And don't overlook the controversies around potential biases—test its outputs in your specific context.

If you're ready to explore Grok 4 for your business, dive in with a clear understanding of its strengths and limitations. It's a tool with real promise, but I'd suggest integrating it gradually to avoid any unpleasant surprises.

Want to know more about what makes Grok 4 tick? Check out the video "Grok 4 - Crazy Pricing and Crazy Politics!" for a deeper dive. That's how I got the intel that really matters.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

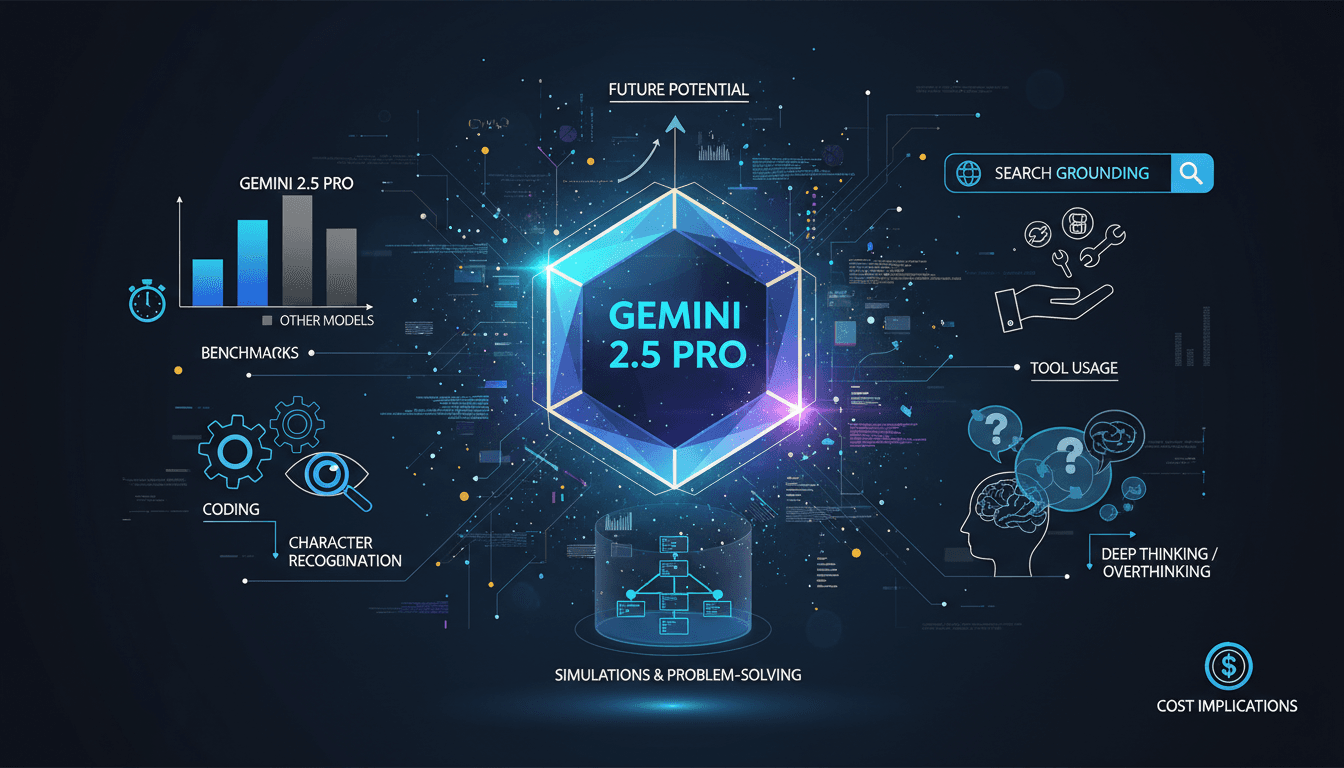

Gemini 2.5 Pro: Performance and Comparisons

I dove into the Gemini 2.5 Pro with high expectations, and it didn't disappoint. From coding accuracy to search grounding, this model pushes boundaries. But let's not get ahead of ourselves—there are trade-offs to consider. With a score of 1443, it's the highest in the LM arena, and its near-perfect character recognition is impressive. However, excessive tool usage and a tendency to overthink can sometimes slow down the process. Here, I share my hands-on experience with this model, highlighting its strengths and potential pitfalls. Get ready to see how Gemini 2.5 Pro stacks up and where it might surprise you.

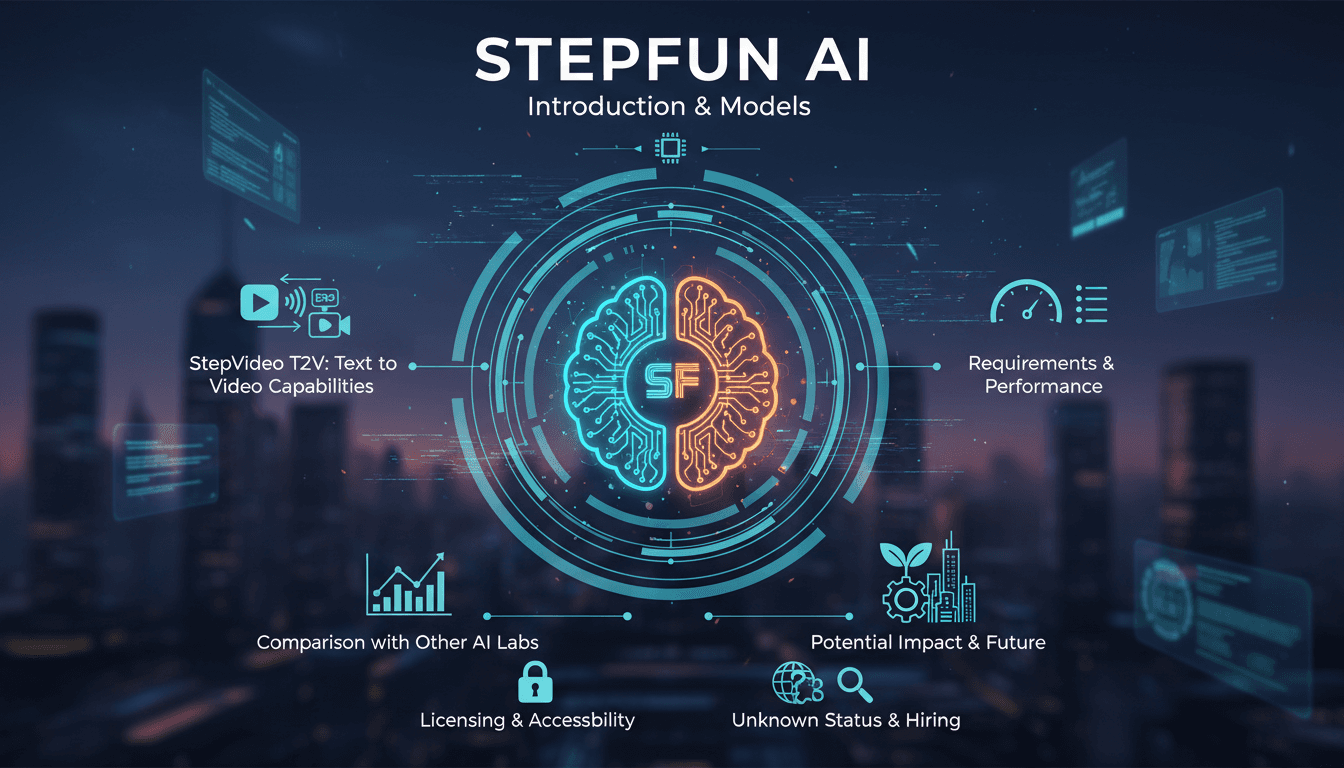

StepFun AI Models: Efficiency and Future Impact

I dove into StepFun AI's ecosystem, curious about its text-to-video capabilities. Navigating through its models and performance metrics, I uncovered a bold contender from China. With 30 billion parameters and the ability to generate up to 200 frames per second, StepFun AI promises to shake up the AI landscape. But watch out, the Step video t2v model demands 80 GB of GPU memory. Compared to other models, there are trade-offs to consider, yet its potential is undeniable. Let's explore what makes StepFun AI tick and how it might redefine the industry.

Exploring Deep Seek R1: Reasoning AI in Action

I dove into Deep Seek's R1 light preview model, eager to see how it stacks up against OpenAI's 01 preview. Spoiler: there are some surprises! I didn’t expect the R1 to excel in math problem-solving and coding as much as it did. With its reasoning capabilities, it’s setting a new standard for AI expectations. But watch out, there are limits. For instance, Base 64 decoding revealed some unexpected challenges with model hallucinations. Then there's the test time compute scaling, which can quickly become a resource drain. Still, if you're looking to explore the potential of reasoning models, the R1 is a must-try. Don't underestimate it, but be aware of its constraints.

AI Innovations 2025: Gemini and Google Beam

I was at Google I/O 2025, and the buzz was palpable. As a builder, I'm always on the lookout for tools that make a real difference in my workflows. This year, Gemini AI and Google Beam caught my attention. We're not just talking about minor upgrades; these are genuine game changers. New products and subscription plans, Gemini AI's evolution, and breakthroughs in scientific research are shaking things up. AI in immersive video communication and disaster response is no longer just a promise; it's a reality. With the Ironwood TPU delivering 42.5 exaflops of compute per part, performance has skyrocketed. It's time to dive into these innovations and see how they can transform our projects in real ways.

Seed AI Model: Revolutionizing Video Generation

I dove into Bite Dance's new Seed AI model, and let me tell you, it's not just another AI—it's a game changer in video generation. With its 7 billion parameters, it's designed to push the boundaries of video and audio creation. But before you get too excited, let's walk through what makes this model tick. First, it uses a VA AE and DIT architecture, optimizing efficiency and reducing computational resources. Then, we compare it to its predecessor, Sora. The difference? Seed is geared to produce 720p HD videos with impressive speed and accuracy. But watch out, it's not a one-size-fits-all solution. Let's explore where Seed truly shines and how it might revolutionize video technology.