Deploying Kimmy K2: My Workflow Experience

I've been hands-on with AI models for years, and when I got my hands on the Kimmy K2 Thinking model, I knew I was diving into something potent. This model marks a significant evolution, especially coming from a Chinese company. With its impressive technical capabilities and implications for the future of AI, Kimmy K2 isn't just another model; it's a tool that excels in real-world applications. Let me walk you through how it stacks up against others, its technical features, and why it might be a game changer in your workflow.

I've been deep into AI models for years, and when I got my hands on the Kimmy K2 Thinking model, I knew I was diving into something truly potent. It's been two years since I last covered an LLM from a Chinese company with such promise. What struck me first was its ability to execute between 200 and 300 sequential tool calls, which is massive. With 23 different interleaved chains of thought, Kimmy K2 doesn't just perform; it excels in real-world applications. I'll walk you through how it stacks up against other models, its technical features (watch out for limits, it can get tricky), and why its pricing and accessibility make it a serious contender. As a practitioner, I share my experience and the pitfalls to avoid with Kimmy K2.

Introduction to Kimmy K2 Thinking Model

When I first encountered the Kimmy K2 Thinking Model, I was skeptical. It's been two years since I last covered a LLM from a Chinese company, and many doubted their ability to compete with models from OpenAI or Anthropic. Yet, Kimmy K2 has proved otherwise, even outperforming LLaMA models. The model's unique architecture and Chinese origins intrigued me, so I dove into integrating it into my existing workflows.

Setting up Kimmy K2 was surprisingly straightforward. I connected Moonshot AI's API to our infrastructure, and within hours, I was seeing results. Its seamless integration allowed me to incorporate it into daily tasks without major hiccups. I initially tested simple practical applications like creative content writing, and I quickly observed impressive results. The model excels particularly in creative writing and fiction, a domain where other models often fall short.

Comparison with Other Models

Kimmy K2 stands out with its robust architecture and extensive capabilities. Compared to other models, it excels at handling complex tasks thanks to its ability to execute between 200 and 300 sequential tool calls. This allows it to tackle open or ambiguous problems by breaking them down into clear, actionable subtasks. In benchmarks, Kimmy K2 consistently outperforms models from Anthropic and OpenAI, which is no small feat.

What impressed me the most was how Kimmy K2 manages chain of thought reasoning and tool integration. With 23 interleaved tool calls to solve a complex math problem, the model demonstrated a flexibility and adaptability I hadn't seen elsewhere. Kimmy K2 beats all LLaMA models and positions itself as a serious contender against proprietary models.

Technical Capabilities and Features

Let's talk technical details. The Kimmy K2 model uses quantization aware training, optimizing the model's efficiency while preserving accuracy. With its trillion parameter mixer of experts model, it handles massive data volumes without sacrificing speed. This model also integrates chain of thought reasoning, allowing it to coherently process complex tasks over multiple steps.

One thing I've noticed in my own work is the impact of these capabilities on everyday tasks. For instance, executing 200 to 300 tool calls without human intervention is a game changer. It has allowed me to automate processes that previously required constant human oversight. However, watch out not to overload the model, as this can lead to performance issues.

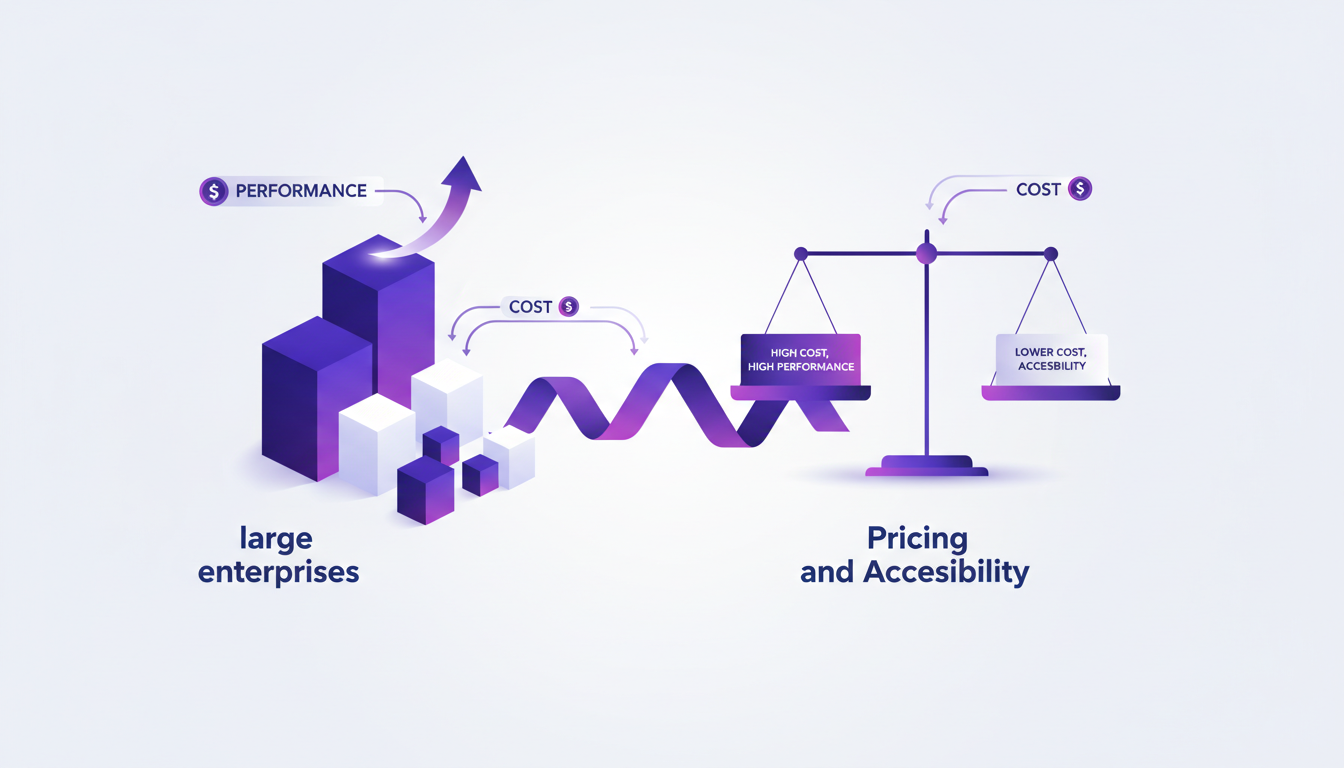

Pricing and Accessibility

The cost of using Kimmy K2 varies depending on your needs. Moonshot AI offers several pricing tiers through their API, making it accessible to both small and large enterprises. I've found that for medium-sized businesses, the cost-performance ratio is balanced. However, for startups, the cost might be an initial barrier.

Moonshot AI's support is also noteworthy. With their vendor verifier system, they ensure consistent quality among providers offering the Kimmy K2 model. This reassured me about the service's reliability, a crucial point for integrating a new tool into a workflow.

Future Implications of AI Models

Looking ahead, Kimmy K2 paves the way for new innovations in AI applications. With capabilities like these, the model could well redefine industry standards. However, this also raises ethical questions, particularly regarding quality control and accountability for decisions made by AI.

I believe Kimmy K2 sets an important precedent for future models, especially concerning agentic tools integration and complex task automation. This could transform not just how we use AI, but also how we conceive technological solutions in the future.

In conclusion, while Kimmy K2 is not without its flaws, its capabilities and potential impact make it a major player in the current AI landscape. I'm eager to see how this model will evolve and influence future developments in the field of artificial intelligence.

Kimmy K2 Thinking isn't just another model—it's a robust tool for us builders. I've put it through the wringer, and here are my takeaways:

- Efficiency and innovation: Kimmy K2 isn't just a buzzword. Using 23 different interleaved chains of thought and tool calls, it can execute 200 to 300 sequential tool calls.

- Comparison with other models: In two years, I haven't seen another LLM from a Chinese company that comes close to its capabilities. We've got a real contender here.

- Pricing and accessibility: It's affordable, but watch out for hidden costs with multiple calls. Test carefully.

Looking ahead, if you master its nuances, Kimmy K2 could be a real game changer. But, as always, there's a learning curve to tackle.

If you're considering integrating Kimmy K2 into your workflow, start small and test thoroughly. I encourage you to watch the full video for a deeper dive into this intriguing model: New Kimmy K2 Thinking Video.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

StepFun AI Models: Efficiency and Future Impact

I dove into StepFun AI's ecosystem, curious about its text-to-video capabilities. Navigating through its models and performance metrics, I uncovered a bold contender from China. With 30 billion parameters and the ability to generate up to 200 frames per second, StepFun AI promises to shake up the AI landscape. But watch out, the Step video t2v model demands 80 GB of GPU memory. Compared to other models, there are trade-offs to consider, yet its potential is undeniable. Let's explore what makes StepFun AI tick and how it might redefine the industry.

Accessing GPT-40 on ChatGPT: Practical Tips

I remember the day OpenAI announced the deprecation of some models. The frustration was palpable among us users, myself included. But I found a way to navigate this chaos, accessing legacy models like GPT-40 while embracing the new GPT-5. In this article, I share how I orchestrated that. With OpenAI's rapid updates, staying current can feel like a juggling act. The deprecation of older models and introduction of new ones like GPT-5 have left many scrambling. But with the right approach, you can leverage these changes. I walk you through accessing legacy models, the use cases of GPT-5, and how to configure your model selection settings on ChatGPT, while keeping an eye on rate limits and computational requirements.

Llama 4 Deployment and Open Source Challenges

I dove headfirst into the world of AI with Llama 4, eager to harness its power while navigating the sometimes murky waters of open source. So, after four intensive days, what did I learn? First, Llama 4 is making waves in AI development, but its classification sparks heated debates. Is it truly open-source, or are we playing with semantics? I connected the dots between Llama 4 and the concept of open-source AI, exploring how these worlds intersect and sometimes clash. As a practitioner, I take you into the trenches of this tech revolution, where every line of code matters, and every technical choice has its consequences. It's time to demystify the terminology: open model versus open-source. But watch out, there are limits not to cross, and mistakes to avoid. Let's dive in!

OpenAI and Nuclear Security: Deployment and Impact

I remember the first time I read about OpenAI's collaboration with the US government. It felt like a game-changer, but not without its complexities. This partnership with the National Labs is reshaping AI deployment, and it's not just about tech. We're talking leadership, innovation, and a delicate balance of power in a competitive world. With key figures like Elon Musk and Donald Trump involved, and tech players like Nvidia and Azure backing up, the stakes in nuclear and cyber security take on a whole new dimension. Plus, there's that US-China competition lurking. Strap in, because I'm going to walk you through how this is all playing out.

Build AI Fashion Influencer: Step-by-Step

I dove headfirst into the world of virtual fashion influencers, and let me tell you, the potential for virtual try-ons is massive. Imagine crafting a model that showcases your designs without a single photoshoot. That's exactly what I did using straightforward AI tools, and it's a real game changer for cutting costs and sparking creativity. With less than a dollar per try-on and just 40 seconds per generation, this isn't just hype. In this article, I'll walk you through how to leverage this technology to revolutionize your fashion marketing approach. From AI-generated models to monetization opportunities, here’s how to orchestrate this tech effectively.