Building Knowledge Graphs: A Practical Guide

I remember the first time I stumbled upon knowledge graphs. It felt like discovering a secret weapon for data organization. But then the complexity hit. Navigating the maze of graph structures isn't straightforward. Yet, when I connect the dots, the impact on my models' performance is undeniable. Knowledge graphs aren't just powerful tools; they're almost indispensable in a world where managing complex IT systems is the norm. But beware, don't underestimate the learning curve. In this article, I show you how I tamed these tools and how you can effectively integrate them into your projects.

The first time I encountered knowledge graphs, it was like opening a Pandora's box of possibilities for data organization. We're talking a real game changer, but with a catch. The complexity of these structures hit me hard. I found myself juggling ontologies, graph databases, and query languages, trying not to drown in a sea of data. Yet, once I figured out how to orchestrate them, I saw a significant boost in my models' performance. Knowledge graphs are powerful tools for managing the complexity of modern IT systems, but watch out—the learning curve is steep. In this article, I share my journey, the mistakes I made, and how I finally integrated these tools into my daily workflows.

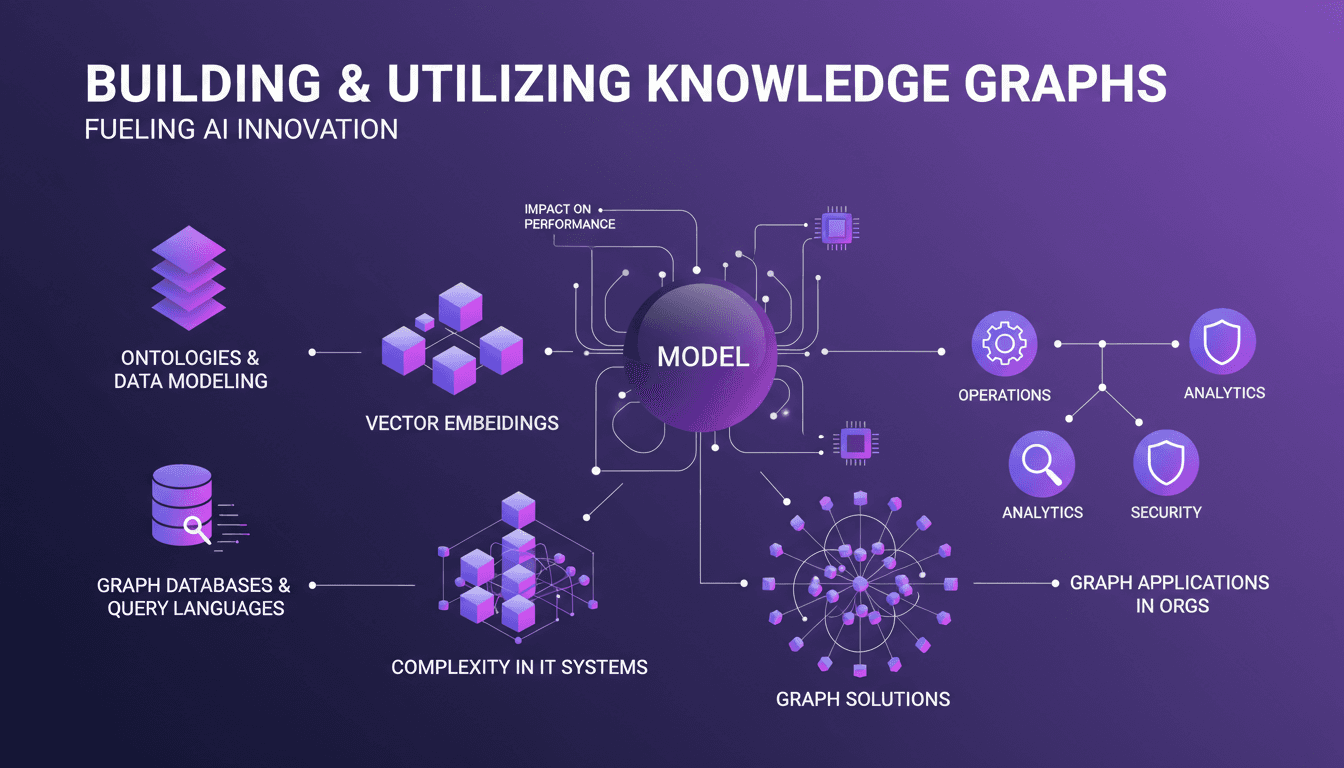

Building and Utilizing Knowledge Graphs

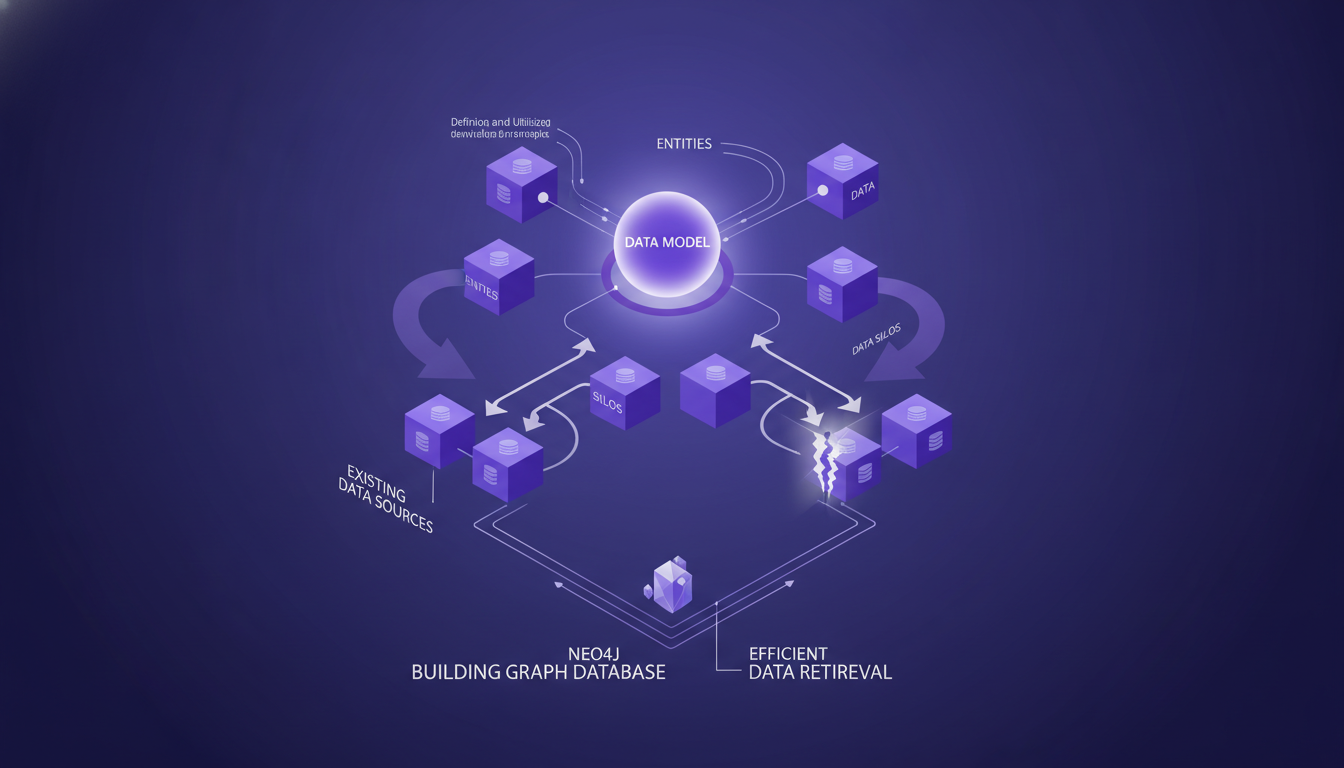

I dove into building knowledge graphs by first defining a solid data model. This is key: you start by identifying the key entities that will become the nodes of your graph. Take a bank, for example: entities would be the bank itself, a client, an account. Then, I integrate existing data sources. Watch out for data silos that can complicate integration.

I use graph databases like Neo4j for efficient data retrieval. But don't let complexity overwhelm you. It's essential to balance between detail and complexity. Don't over-engineer your model. Always keep scalability in mind: plan for tens of millions of nodes. It's a challenge but crucial if you want your system to be sustainable in the long run.

- Define a data model with entities as nodes.

- Integrate existing data sources while avoiding silos.

- Use Neo4j for efficient data retrieval.

- Balance detail and complexity; don't over-engineer.

- Plan for scalability with millions of nodes.

Impact of Graph Structures on Model Performance

Graph structures can significantly enhance data relationships. I incorporate vector embeddings to enrich node features. But watch out for performance bottlenecks that can slow down queries.

I optimize graph traversal algorithms for better efficiency. There's always a trade-off between precision and performance, and it's a balance you need to master to avoid wasting time.

- Enhance data relationships with graph structures.

- Incorporate vector embeddings to enrich features.

- Avoid performance bottlenecks.

- Optimize traversal algorithms for efficiency.

- Balance precision and performance.

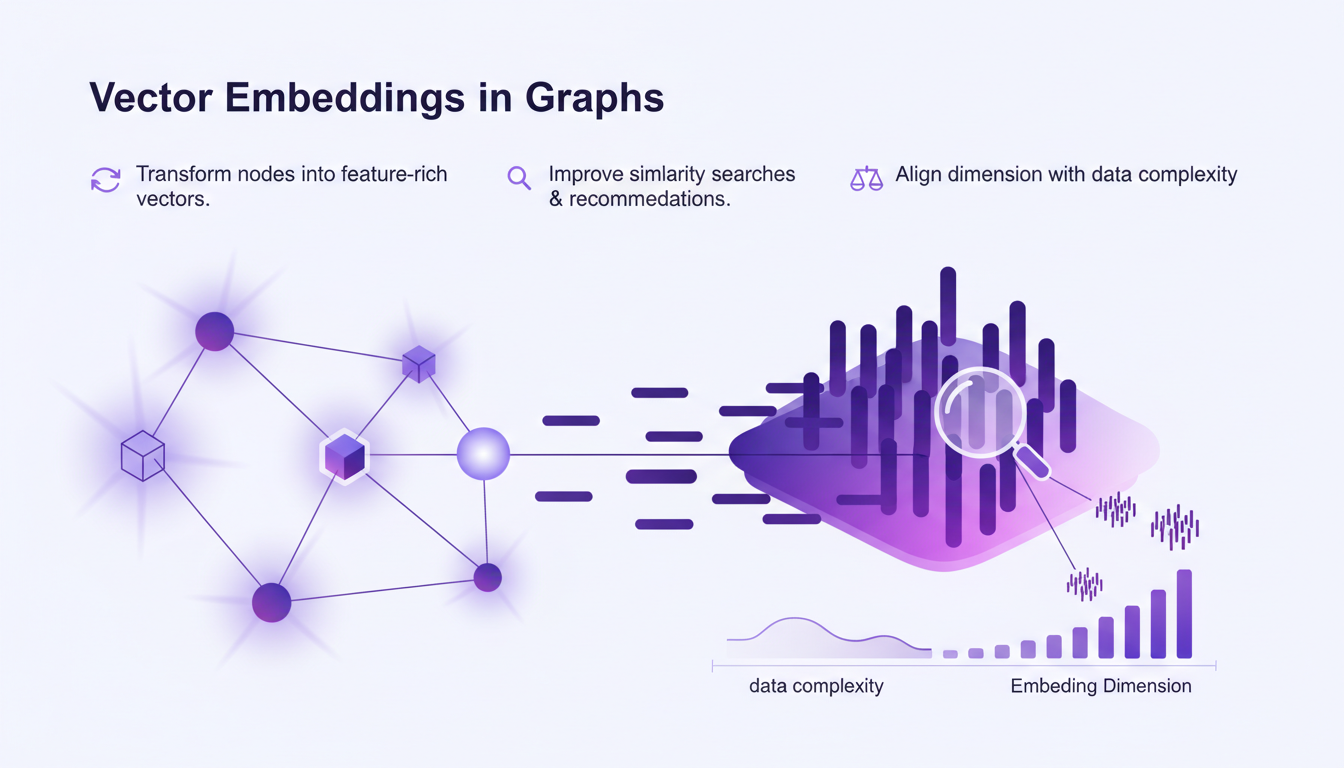

Vector Embeddings in Graphs

Vector embeddings transform nodes into feature-rich vectors. This improves similarity searches and recommendations. However, ensure your embedding dimension aligns with your data complexity.

Overfitting can be a risk; it's crucial to regularize your embeddings. Test different embedding techniques to find the best fit for your situation.

- Transform nodes into feature-rich vectors.

- Improve similarity searches and recommendations.

- Align embedding dimension with data complexity.

- Regularize embeddings to avoid overfitting.

- Test different embedding techniques.

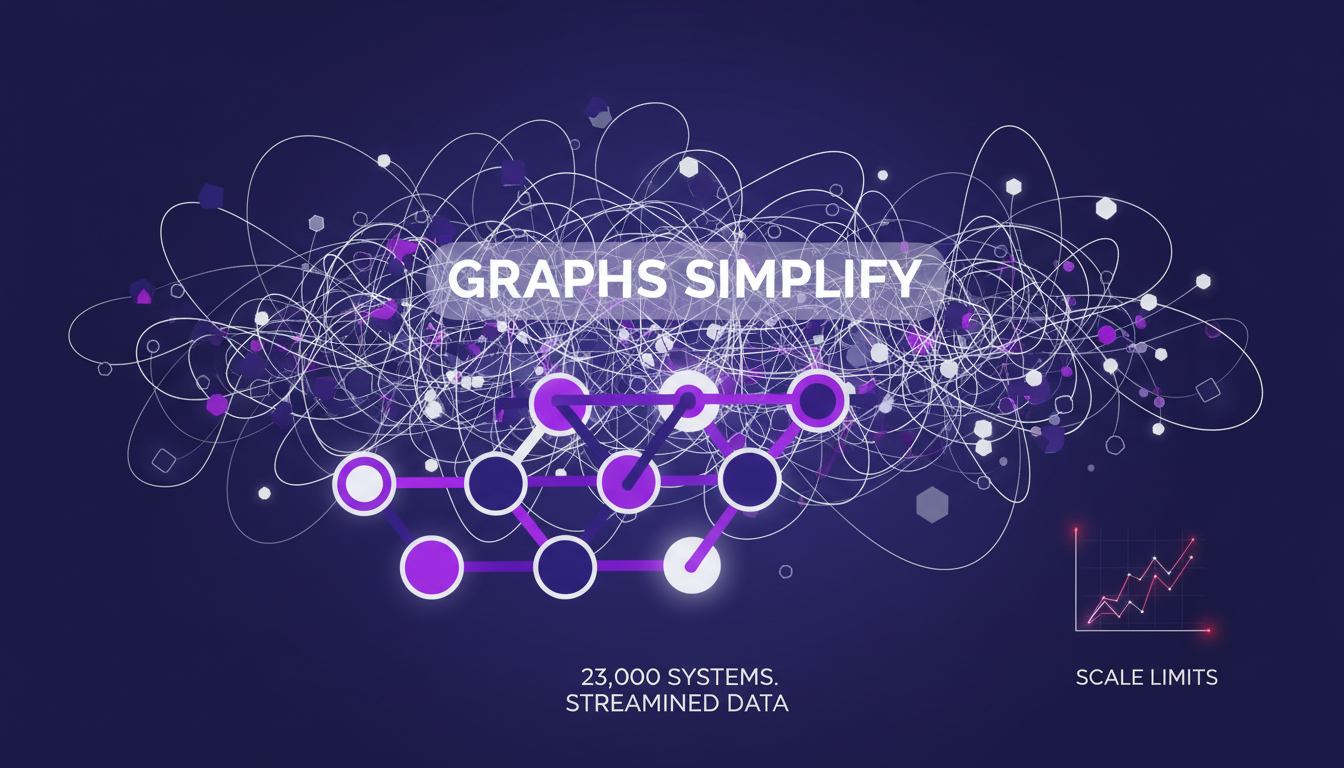

Complexity in IT Systems and Graph Solutions

Graphs can simplify the complexity of interconnected systems. However, it's crucial to understand the limits of graph scalability in large IT infrastructures.

Graph solutions can streamline data integration across 23,000 systems. But beware, if the graph is poorly structured, the failure rate can reach 99%. Leverage graphs to prioritize and focus on the top organizational goals.

- Simplify the complexity of interconnected systems with graphs.

- Understand the limits of graph scalability.

- Integrate data across 23,000 systems.

- Avoid a 99% failure rate with proper graph structure.

- Prioritize and focus on key organizational goals.

Graph Databases, Query Languages, and Applications

Graph databases like Neo4j and their query languages, such as Cypher and Gremlin, are essential. These languages are powerful but come with a learning curve. Choose the right database that fits your application's needs.

Graph applications can transform organizational data strategies. Plan for integration with existing systems and workflows, keeping in mind the three overarching organizational priorities you need to stay focused on.

- Use Neo4j and graph query languages.

- Master Cypher and Gremlin despite their learning curve.

- Choose the right database for your application's needs.

- Transform data strategies with graph applications.

- Plan for integration with existing systems.

Diving into knowledge graphs is a real game changer for data management and model performance. But let's not kid ourselves, it's not a magic wand. I had to plan my graph structures carefully and pick the right tools, or else it's chaos. For example, even with 100 types of insurance in a single Postgres database, the graph structure revealed insights I hadn't imagined. Key takeaway one: understanding how to structure your graph is fundamental. Then, leverage vector embeddings, they turbo-charge performance. Finally, be aware of the complexity it introduces to your IT systems, especially when juggling 23,000 different systems. Ready to start building your own knowledge graph? With the right strategies and tools, watch your data management capabilities soar. For a deeper dive, check out the video "The Graph Problem Most Developers Don't Know They Have" on YouTube; it's a goldmine for further exploration.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Open Hands Model Performance: Local and Efficient

I’ve been diving into local AI models for coding, and let me tell you, the Open Hands model is a game changer. Running a 7 billion parameter model locally isn’t just possible—it’s efficient if you know how to handle it. In this article, I’ll walk you through my experience: from setup to code examples, comparing it with other models, and error handling. You’ll see how these models can transform your daily programming tasks. Watch out for context window limits, though. But once optimized, the impact is direct, especially for tackling those tricky Stack Overflow questions.

Accessing GPT-40 on ChatGPT: Practical Tips

I remember the day OpenAI announced the deprecation of some models. The frustration was palpable among us users, myself included. But I found a way to navigate this chaos, accessing legacy models like GPT-40 while embracing the new GPT-5. In this article, I share how I orchestrated that. With OpenAI's rapid updates, staying current can feel like a juggling act. The deprecation of older models and introduction of new ones like GPT-5 have left many scrambling. But with the right approach, you can leverage these changes. I walk you through accessing legacy models, the use cases of GPT-5, and how to configure your model selection settings on ChatGPT, while keeping an eye on rate limits and computational requirements.

React Compiler: Transforming Frontend

I still remember the first time I flipped on the React Compiler in a project. It felt like turning on a light switch that instantly transformed the room's atmosphere. Components that used to drag suddenly felt snappy, and my performance metrics were winking back at me. But hold on, this isn't magic. It's the result of precise orchestration and a bit of elbow grease. In the ever-evolving world of frontend development, the React Compiler is emerging as a true game changer. It automates optimization in ways we could only dream of a few years ago. Let's dive into how it's reshaping the digital landscape and what it means for us, the builders of tomorrow.

Voice Cloning: Efficient Model for Commercial Use

I dove into voice cloning out of necessity—clients needed unique voiceovers without the hassle of endless recording sessions. That's when I stumbled upon this voice cloning model. First thing I did? Put it against Eleven Labs to see if it could hold its ground. Voice cloning isn't just about mimicking tones—it's about creating a scalable solution for commercial applications. In this article, I'll take you behind the scenes of this model: where it shines, where it falters, and the limitations you need to watch out for. If you've dabbled in voice cloning before, you know technical specs and legal considerations are crucial. I’ll walk you through the model's nuances, its commercial potential, and how it really stacks up against Eleven Labs.

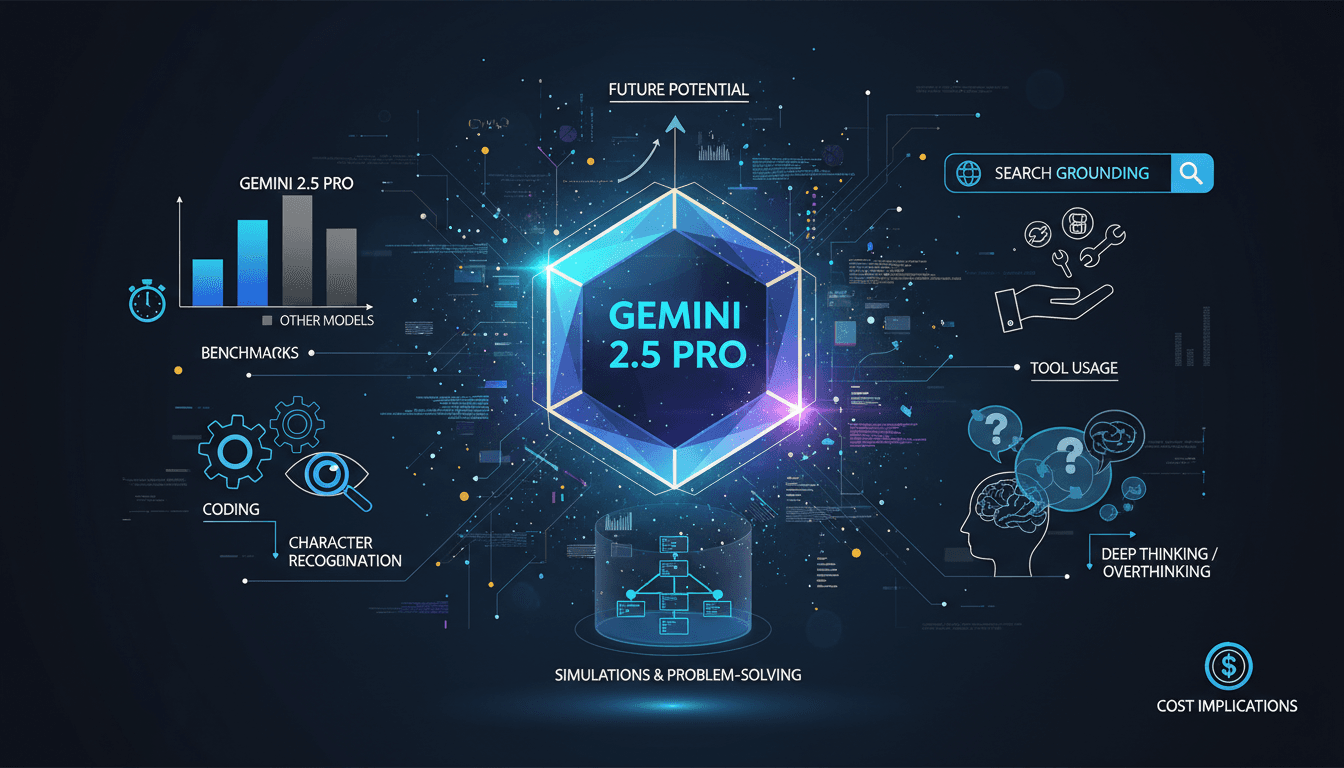

Gemini 2.5 Pro: Performance and Comparisons

I dove into the Gemini 2.5 Pro with high expectations, and it didn't disappoint. From coding accuracy to search grounding, this model pushes boundaries. But let's not get ahead of ourselves—there are trade-offs to consider. With a score of 1443, it's the highest in the LM arena, and its near-perfect character recognition is impressive. However, excessive tool usage and a tendency to overthink can sometimes slow down the process. Here, I share my hands-on experience with this model, highlighting its strengths and potential pitfalls. Get ready to see how Gemini 2.5 Pro stacks up and where it might surprise you.