Clone Any Voice for Free: Qwen TTS Revolutionizes

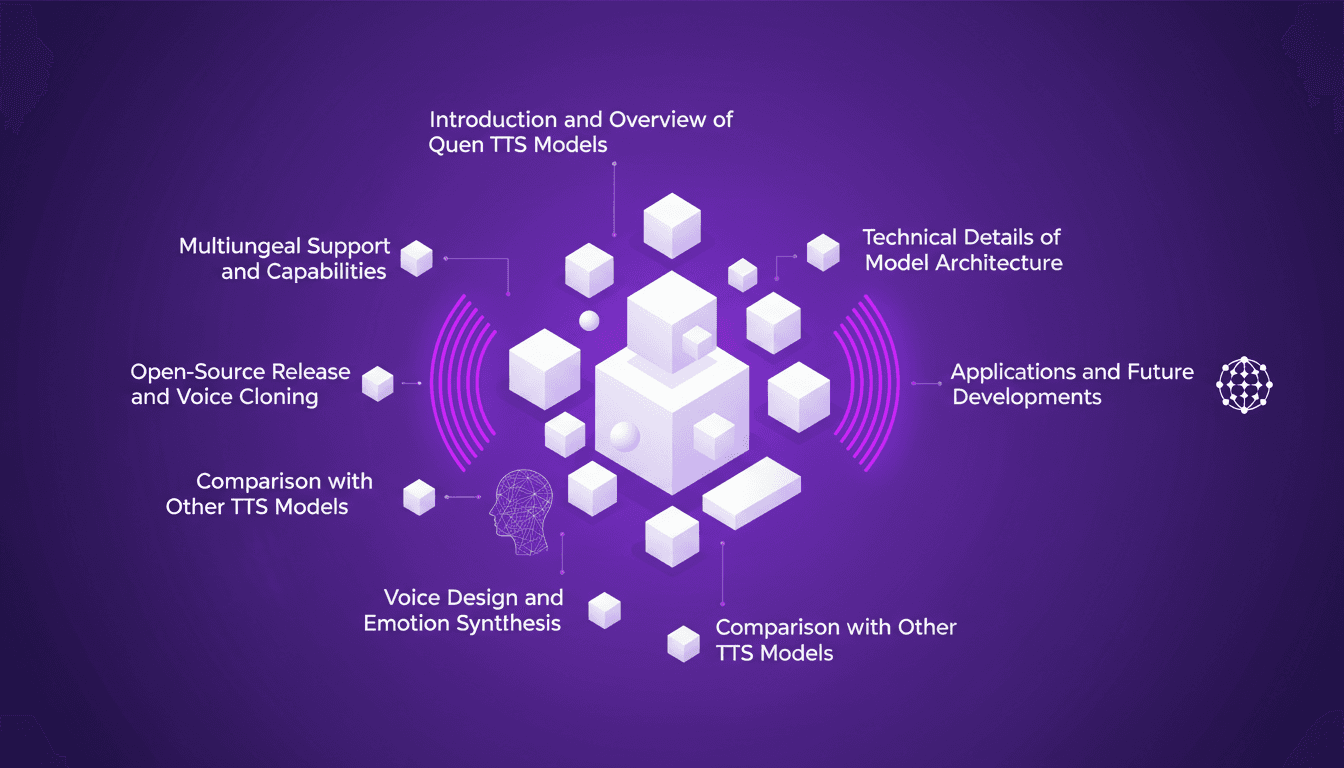

I remember the first time I cloned a voice with Qwen TTS—it was like stepping into the future. Imagine having such a powerful tool, and it's open source, right at your fingertips. This isn't just theory; it's about real-world application today. Last June, Qwen announced their TTS models, and by September, the Quen 3 TTS Flash with multilingual support was ready. For anyone interested in voice cloning and multilingual speech generation, this is a true game changer. With models ranging from 0.6 billion to 1.7 billion parameters, the possibilities are vast. But watch out, there are technical limits to be mindful of. In this article, I'll guide you through multilingual capabilities, open-source release, and emotion synthesis. Get ready to explore how you can leverage this tech today.

I remember the first time I cloned a voice with Qwen TTS—it was like stepping into the future. Suddenly, I had this incredibly powerful tool at my fingertips, and it was open source. This isn't just theory; it's a revolution for anyone who wants to harness the power of voice cloning today. When Qwen announced their TTS models last June, I knew it was going to be a game changer. By September, the Quen 3 TTS Flash with multilingual support was out. With models ranging from 0.6 billion to 1.7 billion parameters, there's a lot to explore. But watch out, there are pitfalls like technical limits you need to navigate. In this article, I'll walk you through how I leverage this tech—from mastering multilingual capabilities to emotion synthesis. It's a tool you can use today, but don't get burned by excitement without knowing the limits.

Getting Started with Qwen TTS Models

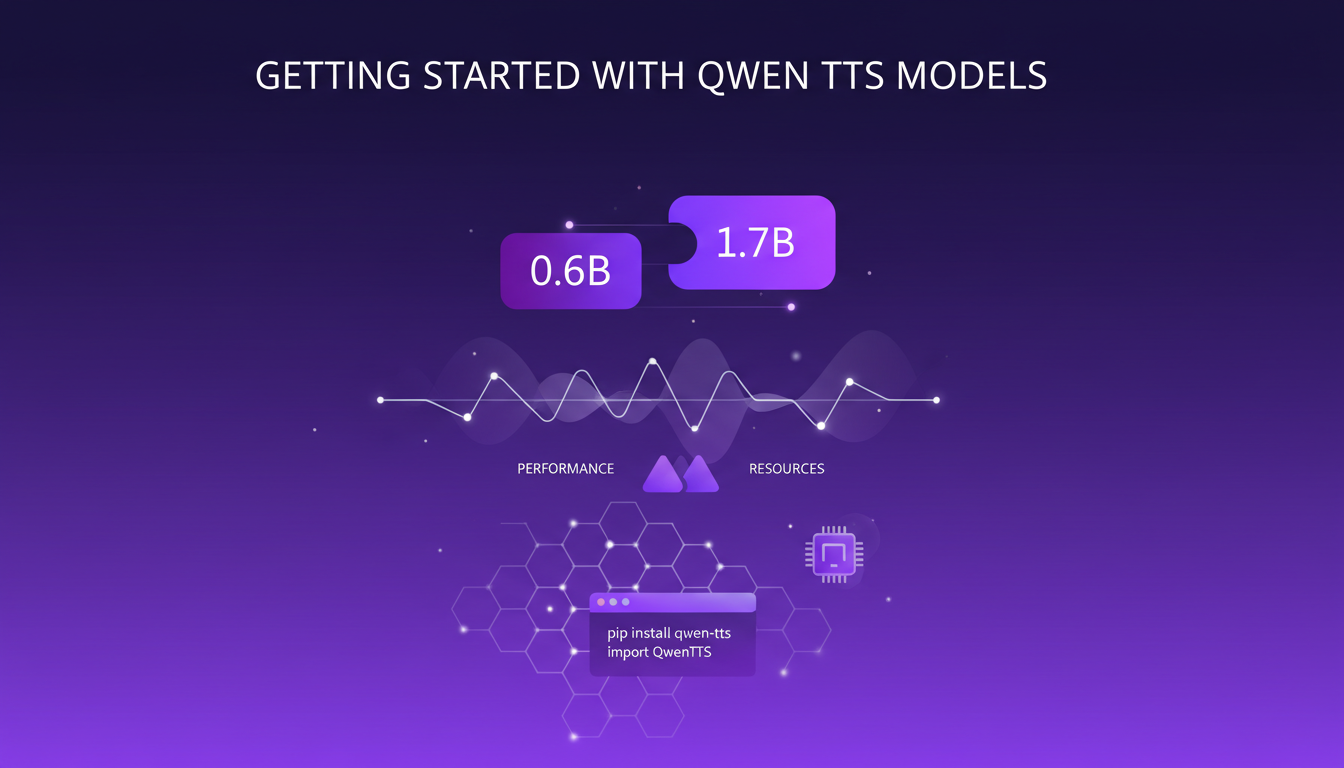

When I first started tinkering with the Qwen TTS models, the variety of options was striking. You have the smaller 0.6b models and the larger 1.7B models. Choosing between these really depends on your use case. The smaller model is perfect for quick, lightweight tasks, while the larger one offers incredible power, especially for voice cloning.

Setting up your environment for Qwen TTS isn't rocket science, but expect some initial challenges. For instance, I discovered that resource management is crucial, especially with the 1.7B model. I got burned once, thinking my setup could handle everything without breaking a sweat. Rookie mistake!

Exploring Multilingual Capabilities

With support for 10 languages, 9 dialects, and 49 tambas, Qwen TTS's multilingual capabilities are impressive. This opens incredible doors for reaching a global audience. However, be warned, integrating these languages is no trivial task. I initially underestimated the complexity of the setup, naively thinking everything would run smoothly.

To implement multilingual TTS, you really need to understand your target audience's needs and choose languages accordingly. But don't spread yourself too thin either, as each added language complicates the architecture.

- Clearly define your target languages from the start.

- Test each language individually to avoid surprises.

- Consider the technical support needed for each language.

Open-Source Release and Voice Cloning

Quen's decision to make their models open-source is a game-changer for developers. Now I can clone a voice without the usual constraints of proprietary APIs. That said, the path to perfect voice cloning is not without pitfalls. It took me a few tries to grasp the nuances of the process.

Here's how I approach it:

- Prepare a clean and clear voice sample.

- Use Quen's tools to create an initial model.

- Fine-tune for a realistic outcome.

Technical Dive: Model Architecture

Understanding the architecture of Qwen TTS is crucial to getting the most out of the models. What struck me was the end-to-end system training approach. This means you can train the model from start to finish without needing complex intermediaries. But beware, customization isn't always straightforward.

The 0.6B model is a good starting point for small applications, while the 1.7B is more suited for complex tasks like emotion synthesis. However, trying to customize everything can quickly become a time-sink if you're not careful.

Applications and Future Developments

Current applications of Qwen TTS range from gaming to accessibility. I have personally used these models to create custom voices in video games, and the results have been astonishing. Voice design and emotion synthesis add an extra dimension, bringing characters to life like never before.

But what excites me the most are the future trends in TTS technology. With constant innovation, we must always balance between innovation and practical implementation. The potential is massive, but we need to stay pragmatic to avoid getting lost in technological complexity.

In conclusion, Qwen TTS doesn't just change the game; it redefines industry standards. But as always, stay vigilant and adapt technologies to your specific needs.

Diving into Qwen TTS is like opening Pandora's box for voice synthesis. First, these 0.6b and 1.7B models offer impressive flexibility with multilingual support, perfect for bold developers. Then, with its open-source core, you can really tinker and adapt to specific needs. But watch out, the more powerful the model, the more your orchestration must be spot on. Performance can tank if mismanaged.

What I find really thrilling is the prospect of cloning any voice, for free. If that doesn’t make you want to explore, I don't know what will. But remember, every tech breakthrough comes with its challenges.

Ready to clone your first voice? Go check out the original video "Clone ANY Voice for Free — Qwen Just Changed Everything" on YouTube. It's worth it to really grasp the full potential (and pitfalls) of Qwen TTS.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Fastest TTS on CPU: Voice Cloning in 2026

I remember the first time I tried running a text-to-speech model on my consumer-grade CPU. Slow, frustrating, like being stuck in the past. But with the TTH model, everything's changed. Imagine achieving lightning-fast TTS performance on regular hardware, with some astonishing voice cloning thrown in. In this article, I guide you through this 2026 innovation: from technical setup to comparisons with other models, and how you can integrate this into your projects. If you're a developer or just an enthusiast, you're going to want to see this.

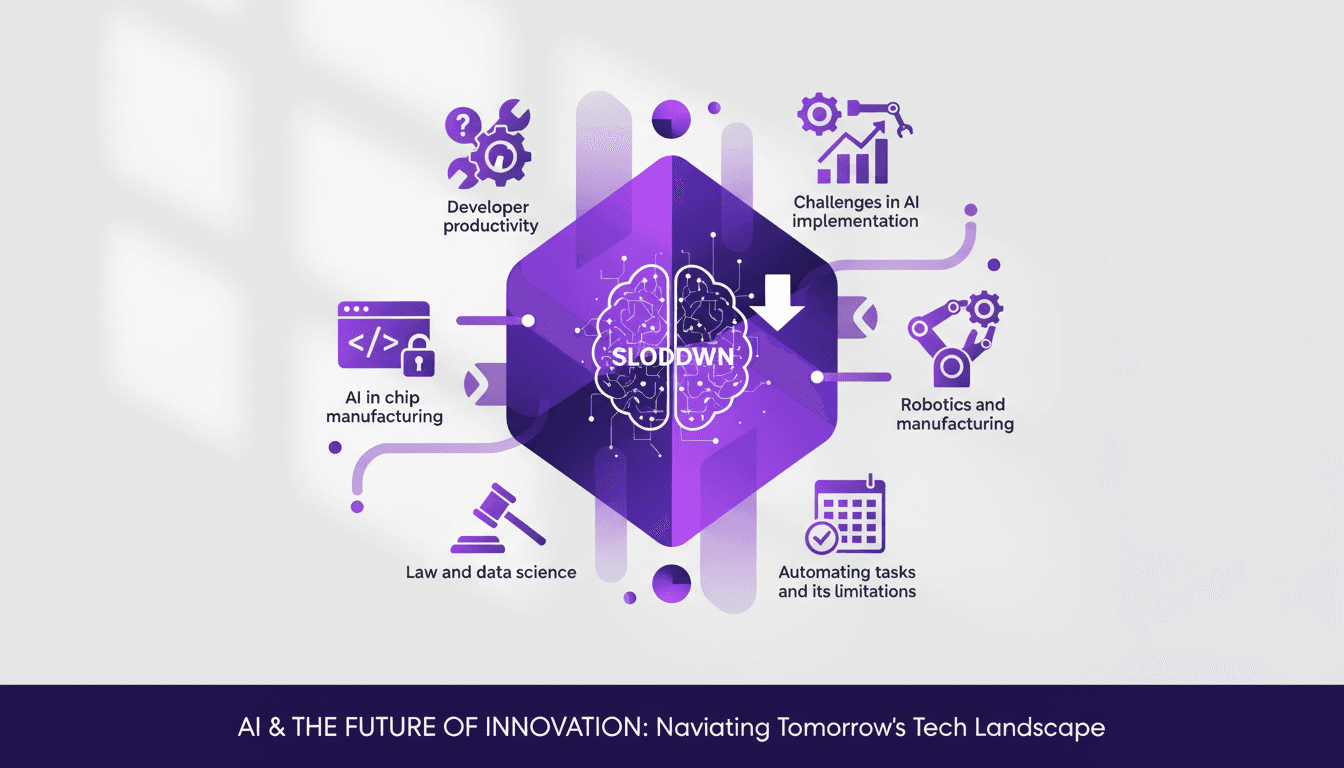

Measuring Dev Productivity with METR: Challenges

I've spent countless hours trying to quantify developer productivity, and when I heard Joel Becker talk about METR, it hit home. The way METR measures 'Long Tasks' and open source dev productivity is a real game changer. With 2030 looming as the potential year where compute growth might hit a wall, understanding how to measure and enhance productivity is crucial. METR offers a unique lens to tackle these challenges, especially in open source environments. It's fascinating to see how AI might transform the way we work, though it has its limits, particularly with legacy code bases. But watch out, don't overuse AI to automate every task. It's still got a way to go, especially in robotics and manufacturing. Joel shows us how to navigate this complex landscape, highlighting the impact of tool familiarity on productivity. Let's dive into these insights that can truly transform our workflows.

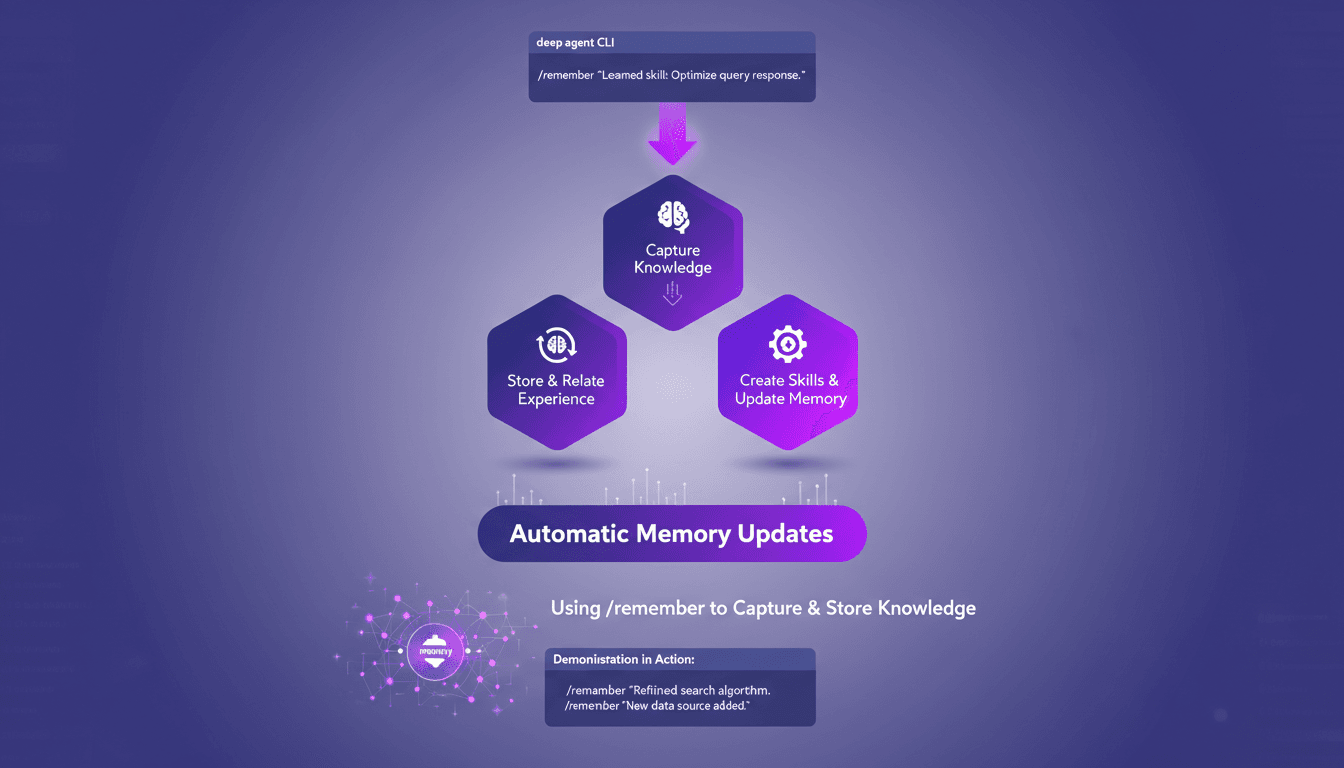

Mastering /remember: Deep Agent Memory in Action

I've spent countless hours tweaking deep agent setups, and let me tell you, the /remember command is a game changer. It's like giving your agent a brain that actually retains useful information. Let me show you how I use it to streamline processes and boost efficiency. With the /remember command in the deep agent CLI, you can teach agents to learn from experience. Let's dive into how this works and why it's a must-have in your toolkit.

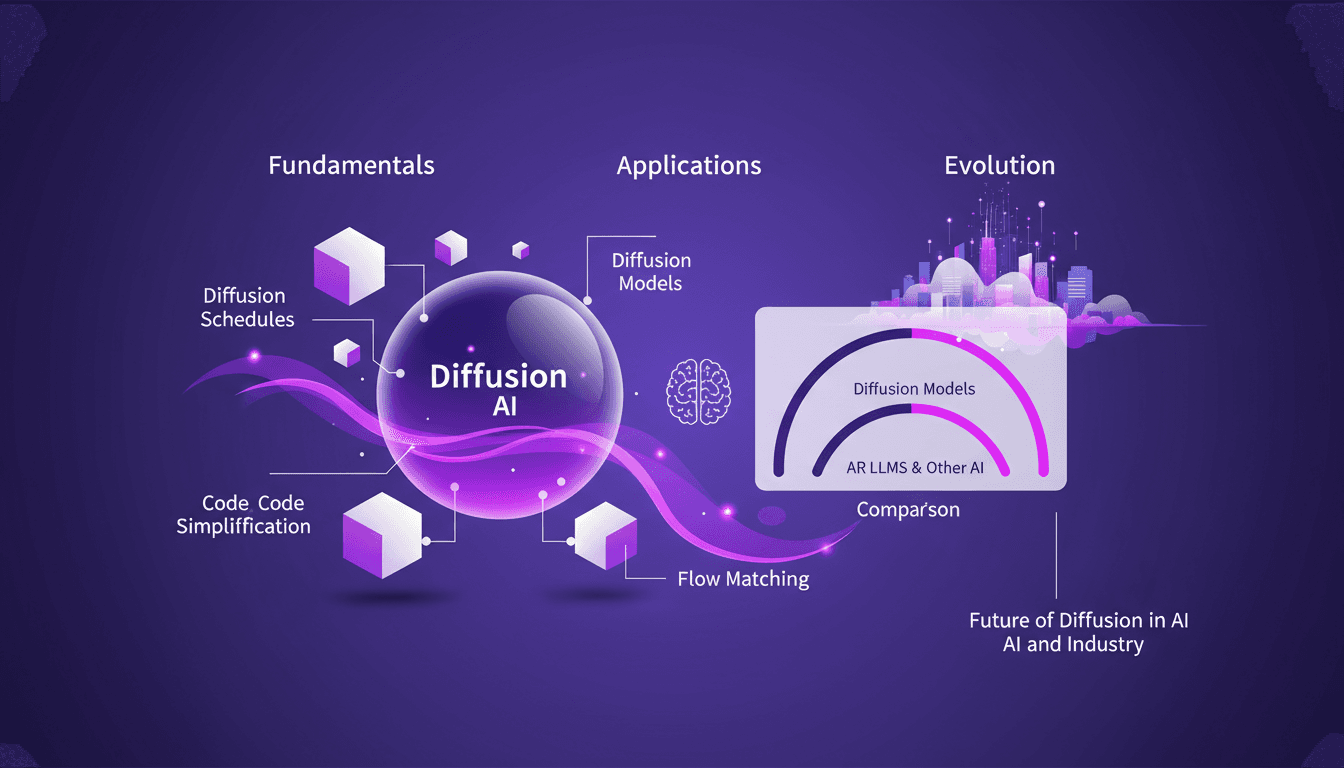

Mastering Diffusion in ML: A Practical Guide

I've been knee-deep in machine learning since 2012, and let me tell you, diffusion models are a game changer. And they're not just for academics—I'm talking about real-world applications that can transform your workflow. Diffusion in ML isn't just a buzzword. It's a fundamental framework reshaping how we approach AI, from image processing to complex data modeling. If you're a founder or a practitioner, understanding and applying these techniques can save you time and boost efficiency. With just 15 lines of code, you can set up a powerful machine learning procedure. If you're ready to explore AI's future, now's the time to dive into mastering diffusion.

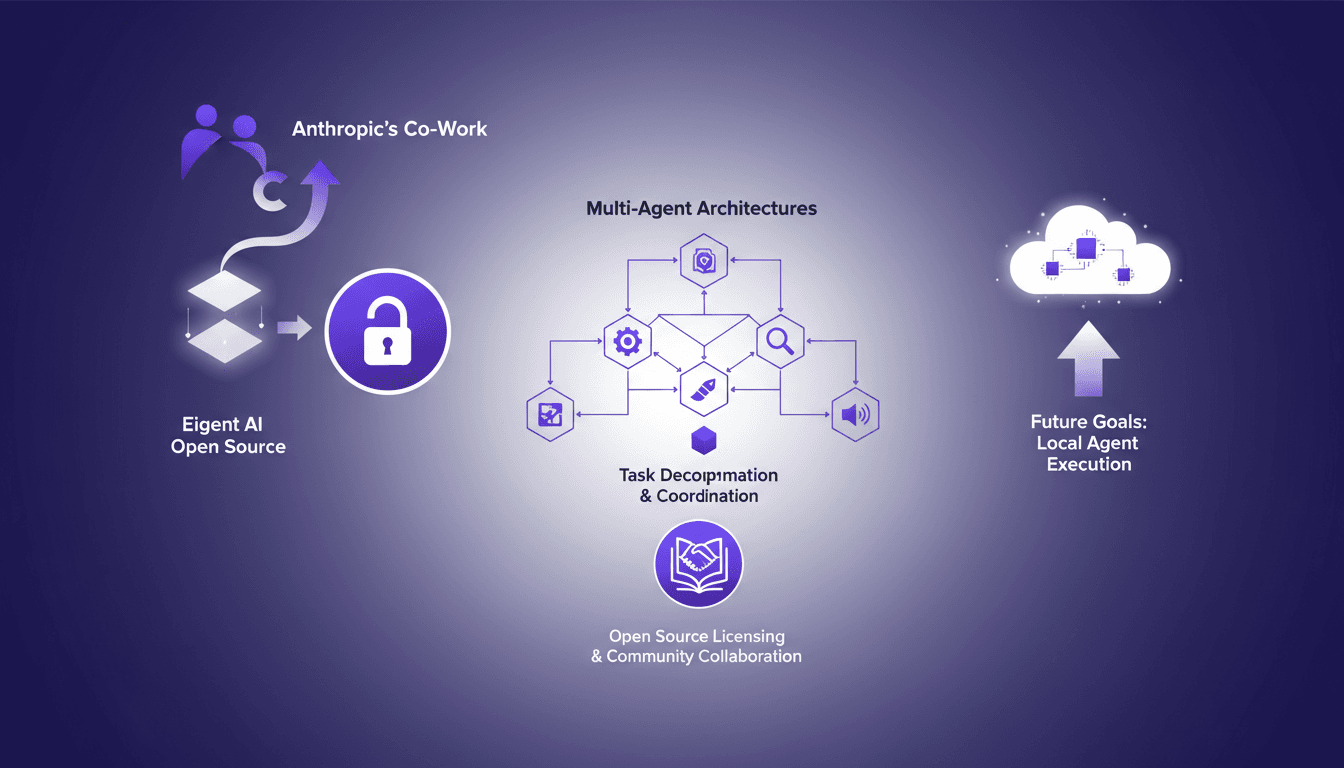

Eigent AI Open Sources to Challenge Claude Cowork

I remember the day Eigent AI decided to open source our product. It was a bold move, inspired by Anthropic's co-work release. This decision reshaped our approach to multi-agent architectures. By opening up our architecture, we aimed to leverage community collaboration and enhance our multi-agent systems. The challenge was significant, but the results were worth it, especially in terms of task decomposition and coordination using DAG. If you're curious about how this has shaken up our development process, let's dive into this transformation together.