Measuring Dev Productivity with METR: Challenges

I've spent countless hours trying to quantify developer productivity, and when I heard Joel Becker talk about METR, it hit home. The way METR measures 'Long Tasks' and open source dev productivity is a real game changer. With 2030 looming as the potential year where compute growth might hit a wall, understanding how to measure and enhance productivity is crucial. METR offers a unique lens to tackle these challenges, especially in open source environments. It's fascinating to see how AI might transform the way we work, though it has its limits, particularly with legacy code bases. But watch out, don't overuse AI to automate every task. It's still got a way to go, especially in robotics and manufacturing. Joel shows us how to navigate this complex landscape, highlighting the impact of tool familiarity on productivity. Let's dive into these insights that can truly transform our workflows.

I've been in the trenches, wrestling with the complexities of measuring developer productivity. When I first heard Joel Becker talk about METR's approach, it resonated with my struggles. We know compute growth might hit a wall by 2030, and understanding how to measure and enhance productivity is more crucial than ever. METR offers a unique perspective, especially in open source environments where tool familiarity can make or break productivity. There's also AI, which could transform the way we work, but don't rely too much on automation, especially with legacy code. I've seen too many colleagues get burned trying to automate everything. AI still has a long way to go, particularly in areas like robotics. Joel guides us through these challenges, highlighting the impact of productivity on chip manufacturing and R&D. Let's dive into these insights that can truly transform our workflows.

Understanding Compute Growth and Its Implications

Compute growth has long been a silent driver of technological productivity. But come 2030, we might face a game-changer. I remember a discussion mentioning the potential slowdown in compute growth, a prospect that could redefine how we measure productivity. We start at a point on the compute curve, precisely 28, and this number carries significant implications.

"If compute growth slows, time horizon growth might follow suit."

The notion of software singularity could be pivotal for us developers. Imagine a world where software evolves to directly impact our development capabilities. Yet, preparing for constraints in compute power is no easy task. First, I assess my current resources, then plan for alternatives.

One must wonder: how do we anticipate these changes? Here are some steps:

- Regularly evaluate resource consumption.

- Invest in more energy-efficient technologies.

- Plan for contingency scenarios during demand peaks.

Tool Familiarity: The Hidden Driver of Productivity

In our field, familiarity with tools can transform our output. This is something I've observed firsthand. The concept of the J curve is fascinating: initially, productivity dips before it rises, typically spanning 3 to 6 months. That's exactly what I experienced when adopting tools like GitHub Copilot.

To speed up the learning curve, here are some strategies I've tried:

- Organize internal training sessions.

- Encourage peer mentoring.

- Implement pilot projects to experiment with new tools without pressure.

Ultimately, tool familiarity can significantly reduce project timelines.

Challenges in Measuring AI's Impact on Productivity

AI is revolutionizing how we work, especially in chip manufacturing where it improves yield. However, its application in automating R&D tasks remains limited. I've often found that balancing automation with human oversight is tricky.

To integrate AI without losing the human touch, I prioritize the following steps:

- In-depth analysis of tasks that can be automated.

- Maintain human control over critical processes.

- Train teams to collaborate effectively with AI.

Remember, AI doesn't replace humans but complements them in well-defined contexts.

Legacy Code Bases: The Backbone of Open Source

Legacy code bases are often seen as burdens, yet they are vital for the survival of open source projects. I've frequently had to juggle between updating these systems and starting new projects. AI could help us manage these systems, but be wary of the trade-offs.

Common dilemmas I often encounter:

- Refactor old code or start from scratch?

- Integrate AI tools to analyze existing code.

- Train developers to understand and maintain legacy code.

A concrete example: in a recent project, using AI tools reduced refactoring time by 30%.

AI's Broader Impact: From Law to Robotics

AI is not just affecting software development but also fields like law and data science. Future prospects in robotics and manufacturing are promising, but there are limitations.

To prepare for AI-driven changes, here's what I recommend:

- Stay informed about technological advancements.

- Engage in continuous training.

- Evaluate AI's potential impacts on your sector.

In the end, AI should be seen as a tool for innovation, not a threat.

Diving into METR's insights on developer productivity, I've extracted some practical takeaways that can really make a difference in our day-to-day work. First off, tool familiarity is key. I've seen it firsthand: a developer who knows their tools can save two hours a day on crucial tasks. Next, AI is a powerful ally, but be cautious with its implementation. I often find integrating AI into manufacturing and R&D processes is like playing chess. It can be a game changer, but only if orchestrated properly. Lastly, don't underestimate the value of legacy code. It's often more stable and can provide a strong foundation for future innovations.

We're on the brink of 2023, and by 2030, we might feel the pinch of compute growth constraints. It's time to brace our workflows for this potential slowdown. I highly recommend starting to integrate these strategies today. For a deeper dive, check out the full video on YouTube. You might uncover gems I haven't touched on here.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

AI's Limited Self-Knowledge: Practical Breakdown

I've spent countless hours dissecting AI models, and one thing's clear: their self-awareness is like a tiny sliver of reality—fascinating but limited. This gap affects not only their operation but also our interaction with them. In this podcast, I'll walk you through these nuances, using speculative fiction as a lens to explore AI's identity and comprehension. We'll dive into the impact of this fiction on human-AI relationship perceptions, AI identity, and tools for AI's comprehension of complex concepts. It's a challenge, yes, but also an opportunity to enrich our interaction with these models. Join me to discover why AI's limited self-knowledge isn't just a hurdle but also a way to reinvent our approach to technology.

Mastering Cling Motion Transfer: A Cost-Effective Guide

I still remember the first time I tried Cling Motion Transfer. It was a game-changer. For less than a dollar, I turned a simple video into viral content. In this article, I'll walk you through how I did it, step by step. Cling Motion Transfer is an affordable AI tool that stands out in the realm of video creation, especially for platforms like TikTok. But watch out, like any tool, it has its quirks and limits. I'll guide you through image and video selection, using prompts, and how to finalize and submit your AI-generated content. Let's get started.

Fastest TTS on CPU: Voice Cloning in 2026

I remember the first time I tried running a text-to-speech model on my consumer-grade CPU. Slow, frustrating, like being stuck in the past. But with the TTH model, everything's changed. Imagine achieving lightning-fast TTS performance on regular hardware, with some astonishing voice cloning thrown in. In this article, I guide you through this 2026 innovation: from technical setup to comparisons with other models, and how you can integrate this into your projects. If you're a developer or just an enthusiast, you're going to want to see this.

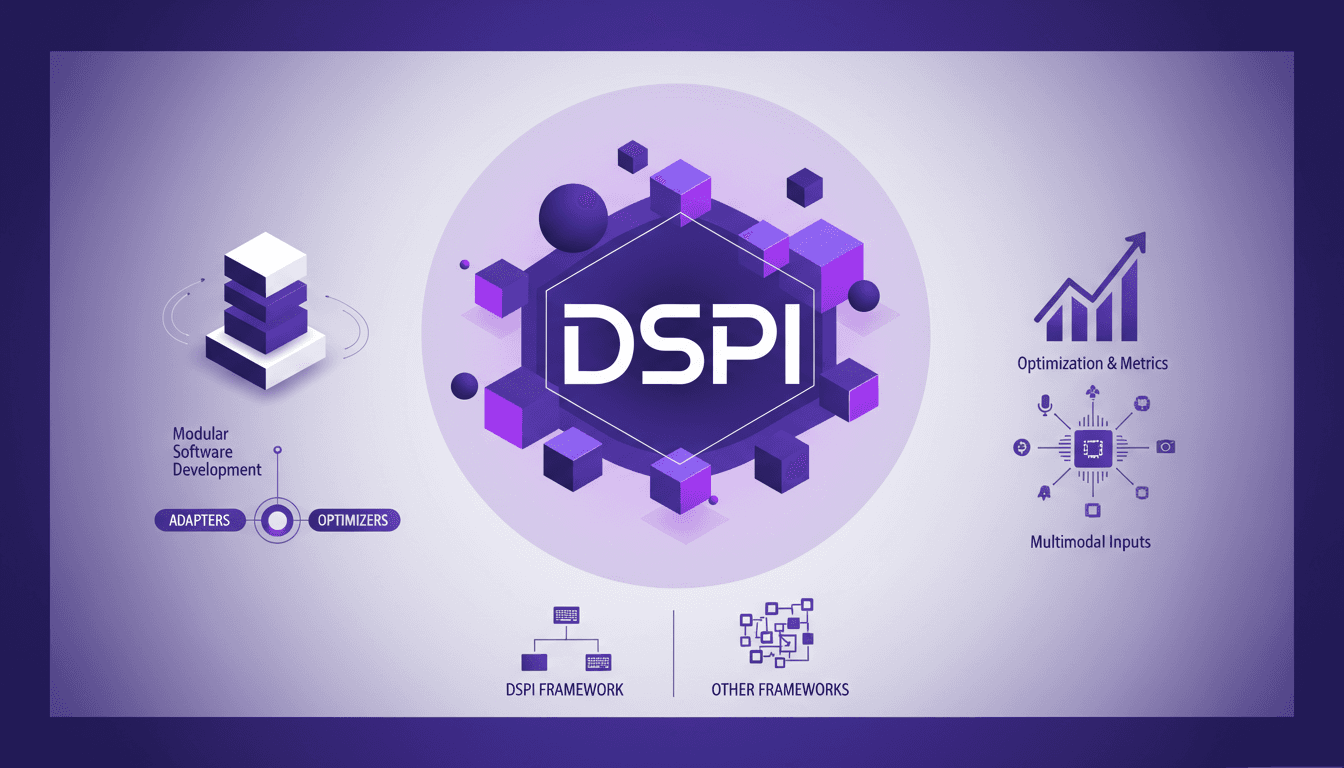

DSPI: Revolutionizing Prompt Engineering

I've been diving deep into DSPI, and let me tell you, it's not just another framework — it's a game changer in how we handle prompt engineering. First, I was skeptical, but seeing its modular approach in action, I realized the potential for efficiency and flexibility. With DSPI, complex tasks are simplified through a declarative framework, which is a significant leap forward. And this modularity? It allows for optimized handling of inputs, whether text or images. Imagine, for a classification task, just three images are enough to achieve precise results. It's this capability to manage multimodal inputs that sets DSPI apart from other frameworks. Whether it's for modular software development or metric optimization, DSPI doesn't just get the job done, it reinvents it.

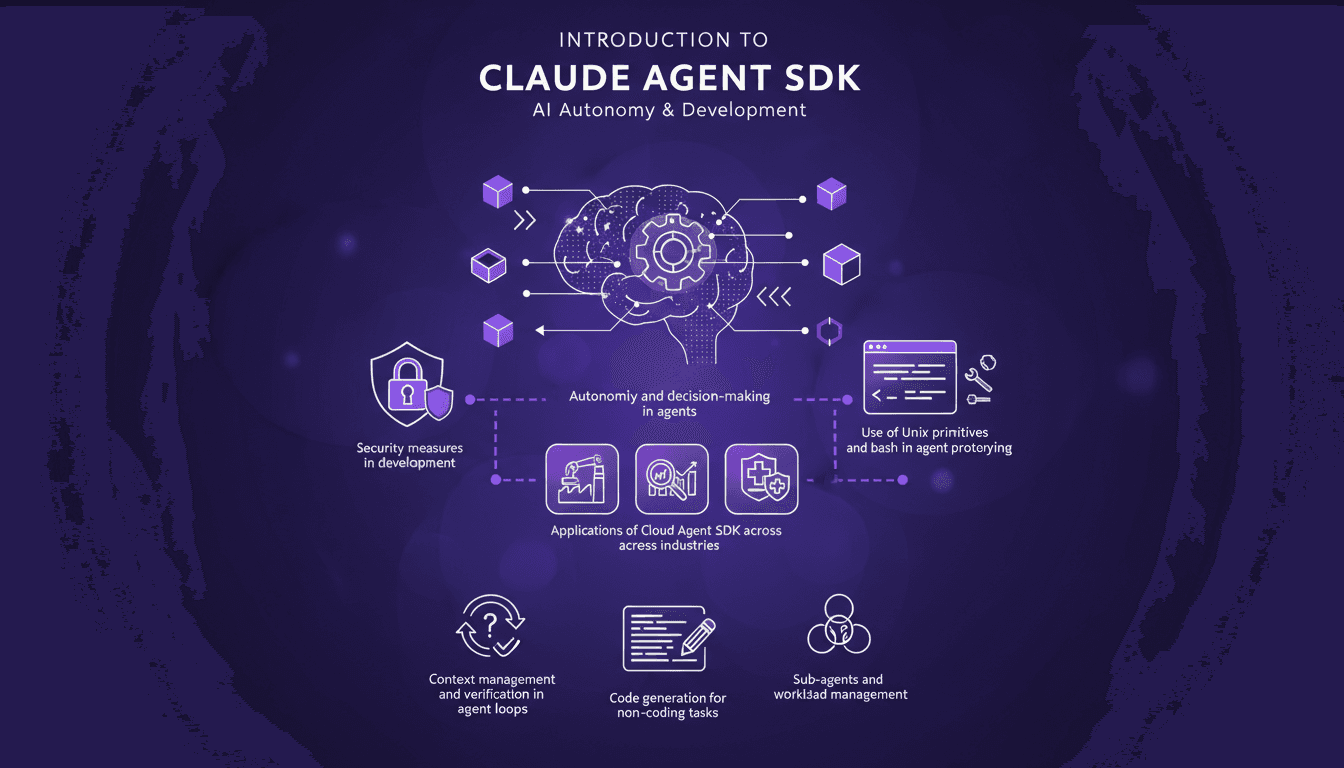

Mastering Claude Agent SDK: Practical Guide

Ever tried orchestrating a team of sub-agents with Unix commands and felt like you were herding cats? I’ve been there. With Claude Agent SDK, I finally found a way to streamline decision-making and boost efficiency. Let me walk you through how I set this up and the pitfalls to avoid. Claude Agent SDK promises autonomy and decision-making power for agents across industries, but only if you navigate its complexities correctly. I connect my agents, manage their workload, and secure it all with Unix primitives and bash. But watch out, there are limits you'll need to watch. Ready to dive into the details?