Kimmy K2.5: Mastering the Agent Swarm

I remember the first time I dove into the Kimmy K2.5 model. It was like stepping into a new AI era, where the Agent Swarm feature promised to revolutionize parallel task handling. I've spent countless hours tweaking, testing, and pushing this model to its limits. Let me tell you, if you know how to leverage it, it's a game-changer. With 15 trillion tokens and the ability to manage 500 coordinated steps, it's an undisputed champion. But watch out, there are pitfalls. Let me walk you through harnessing this powerful tool, its applications, and future implications.

I remember the first time I dove into the Kimmy K2.5 model. It felt like stepping into a new AI era. We're talking about a powerhouse with 15 trillion tokens and the ability to manage 500 coordinated steps. But beware, it's not for beginners. I've spent hours optimizing, testing, pushing it to its limits. And let me tell you, I've had surprises (some good, others not so much). The Agent Swarm feature is what impressed me the most. It allows for efficient orchestration of parallelizable tasks, and that's where its real magic lies. Yet, flipping the switch and expecting miracles? Not quite. You need to know where and how to use it, and that's where my experience comes into play. I’ll show you how I mastered the Agent Swarm, the performance I achieved compared to other models, and the applications that transformed my workflows. And most importantly, I'll share the pitfalls to avoid so you don't get burned like I did initially.

Understanding the Kimmy K2.5 Model

As a practitioner, let’s dive into the architecture of the Kimmy K2.5, Moonshot AI’s flagship model. What immediately catches attention is the impressive number of 15 trillion tokens it has been trained on, including text, visual images, and videos. This massive data scale enables the model to be multimodal, a feature that makes it extremely versatile across different tasks. But watch out, training on such a scale also has its constraints, especially regarding the resources needed to deploy the model effectively.

The orchestrator agent plays a crucial role here. It is responsible for task management, coordinating up to 500 steps with parallelizable subtasks. This is a real asset for complex projects where managing multiple processes in parallel can make the difference between success and failure. However, it’s essential not to overload this orchestrator agent, as that could lead to performance issues.

Harnessing the Agent Swarm Feature

Now, let’s talk about the Agent Swarm, a feature that enables coordination of up to 500 steps with self-directed sub-agents. In practice, this means complex tasks can be broken down into parallelizable subtasks, each managed by its own agent. It's a game-changer in real-world scenarios where time is a critical factor.

For example, in front-end development applications, the Agent Swarm excels at handling tasks like video-to-code generation from images. But watch out, under the 100 million user mark, using the Agent Swarm can be a trade-off in terms of resources. It requires careful orchestration to avoid wasting valuable resources.

Training and Performance Insights

In my personal workflow for optimizing Kimmy K2.5 training, I found that precise hyperparameter tuning is crucial. Performance benchmarks show that the model can outperform competitors like OpenAI in specific tasks, but it's vital to understand where its strengths and weaknesses lie.

Common pitfalls include overloading resources, which can slow down the entire process. It’s often quicker to run tests in small batches rather than trying to orchestrate everything in one go. This balances performance with resource constraints.

Comparing Kimmy K2.5 with Other Models

Now, let’s compare Kimmy K2.5 with its competitors. What stands out is its multimodal capability and Agent Swarm management. For example, in multilingual tasks, it outperforms models like OpenAI and Gemini. However, it has its limitations, particularly in cases where precise task coordination is less critical.

For the future, integrating Kimmy K2.5 into your AI strategy can offer a competitive edge, provided you understand its capabilities and limits well. Resources like Practical Intro to Reinforcement Learning can be helpful here.

Applications and Future Implications

Finally, let’s examine where Kimmy K2.5 is already making an impact today. It is particularly effective in areas like visual coding and front-end development. The future applications of Agent Swarm technology are promising, with potential implications in fields like large-scale data analysis.

To navigate the technical specifications and access options, Kimmy offers flexible solutions, such as access via Open Router. However, it’s crucial to understand the usage restrictions, especially concerning the number of users. Looking ahead, the Agent Swarm's ability to handle complex tasks in parallel represents a significant advancement in AI.

Kimmy K2.5 isn't just about raw power; it's about how you harness it. I've orchestrated complex tasks with it, and honestly, it's a game changer – especially when managing parallelizable subtasks. But watch out, it does have its limits. First, understand these limits to really maximize what this beast can do for you. A few key points: if you have less than 100 million users, you're in the clear. The flagship K2.5 model is trained on 15 trillion tokens – that's massive. But remember, the potential is huge if you know how to harness it. Ready to integrate Kimmy K2.5 into your workflow? Start exploring its capabilities today and see how it transforms your AI approach. For a deeper dive, check out the full video 'Kimi K2.5 - The Agent Swarm Champion' on YouTube. Honestly, it's worth watching to fully grasp how this tech can boost your daily operations.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Ollama Launch: Tackling Mac Challenges

I remember the first time I fired up Ollama Launch on my Mac. It was like opening a new toolbox, gleaming with tools I was eager to try out. But the real question is how these models actually perform. In this article, we'll dive into Ollama Launch features, put the GLM 4.7 flash model through its paces, and see how Claude Code stacks up. We'll also tackle the challenges of running these models locally on a Mac and discuss potential improvements. If you've ever tried running a 30-billion parameter model with a 64K context length, you know what I'm talking about. So, ready to tackle the challenge?

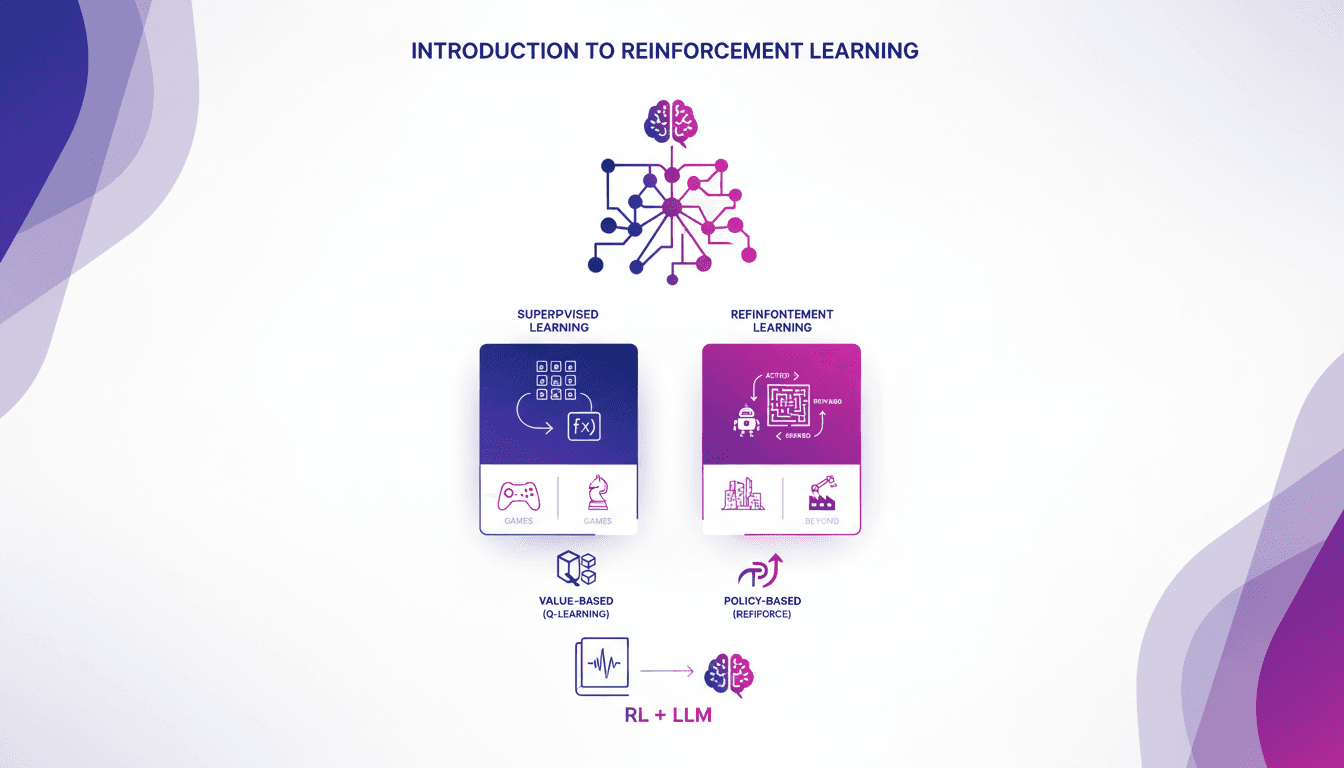

Practical Intro to Reinforcement Learning

I remember the first time I stumbled upon reinforcement learning. It felt like unlocking a new level in a game, where algorithms learn by trial and error, just like us. Unlike supervised learning, RL doesn't rely on labeled datasets. It learns from the consequences of its actions. First, I'll compare RL to supervised learning, then dive into its real-world applications, especially in games. I'll walk you through value-based methods like Q-learning and policy-based methods, showing how these approaches are transforming massive language models. In the end, you'll see how three key ways of using RL to fine-tune large language models deliver impressive results.

Clone Any Voice for Free: Qwen TTS Revolutionizes

I remember the first time I cloned a voice with Qwen TTS—it was like stepping into the future. Imagine having such a powerful tool, and it's open source, right at your fingertips. This isn't just theory; it's about real-world application today. Last June, Qwen announced their TTS models, and by September, the Quen 3 TTS Flash with multilingual support was ready. For anyone interested in voice cloning and multilingual speech generation, this is a true game changer. With models ranging from 0.6 billion to 1.7 billion parameters, the possibilities are vast. But watch out, there are technical limits to be mindful of. In this article, I'll guide you through multilingual capabilities, open-source release, and emotion synthesis. Get ready to explore how you can leverage this tech today.

Mastering Diffusion in ML: A Practical Guide

I've been knee-deep in machine learning since 2012, and let me tell you, diffusion models are a game changer. And they're not just for academics—I'm talking about real-world applications that can transform your workflow. Diffusion in ML isn't just a buzzword. It's a fundamental framework reshaping how we approach AI, from image processing to complex data modeling. If you're a founder or a practitioner, understanding and applying these techniques can save you time and boost efficiency. With just 15 lines of code, you can set up a powerful machine learning procedure. If you're ready to explore AI's future, now's the time to dive into mastering diffusion.

Measuring Dev Productivity with METR: Challenges

I've spent countless hours trying to quantify developer productivity, and when I heard Joel Becker talk about METR, it hit home. The way METR measures 'Long Tasks' and open source dev productivity is a real game changer. With 2030 looming as the potential year where compute growth might hit a wall, understanding how to measure and enhance productivity is crucial. METR offers a unique lens to tackle these challenges, especially in open source environments. It's fascinating to see how AI might transform the way we work, though it has its limits, particularly with legacy code bases. But watch out, don't overuse AI to automate every task. It's still got a way to go, especially in robotics and manufacturing. Joel shows us how to navigate this complex landscape, highlighting the impact of tool familiarity on productivity. Let's dive into these insights that can truly transform our workflows.