Continual Learning with Deep Agents: My Workflow

I jumped into continual learning with deep agents, and let me tell you, it’s a game changer for skill creation. But watch out, it's not without its quirks. I navigated the process using weight updates, reflections, and the Deep Agent CLI. These tools allowed me to optimize skill learning efficiently. In this article, I share how I orchestrated the use of deep agents to create persistent skills while avoiding common pitfalls. If you're ready to dive into continual learning, follow my detailed workflow so you don't get burned like I did initially.

When I jumped into continual learning with deep agents, I didn't realize it was going to revolutionize how I create skills. Initially, I got burned trying to integrate weight updates without fully understanding the process. But once I figured out how to orchestrate it with the Deep Agent CLI, I really started to optimize skill learning. Here, I walk you through how I juggled reflections over trajectories and prompt optimization in language space. The Langmith Fetch utility was also crucial in this journey. Don't fall into the traps I encountered. I'll show you how I built an efficient workflow for persistent skill creation using deep agents. So, ready to dive in?

Understanding Continual Learning with Deep Agents

Continual learning is like the holy grail in AI. Why? Because so far, our agents often remain static, unable to improve like a human would. But with deep agents, we have a chance to change that. First, you need to grasp the crucial role of weight updates. That's where the absorption of new knowledge takes place. But beware, it's always about finding the delicate balance between what's already learned and what needs to be integrated. And trust me, at first, it's easy to get lost in the settings and miss the core.

Mastering Weight Updates and Context Learning

Weight updates are the core of learning. Without them, nothing moves. But it's not just a matter of technique; it's also strategy. Context learning allows for the integration of new data without forgetting everything. In practice, it's about calibrating updates: too fast, and you lose precision; too slow, and you miss the opportunity. I've been burned a few times, thinking faster was better. Wrong. You need to gauge properly and avoid classic pitfalls.

Reflection Over Trajectories: A Practical Approach

Reflection over trajectories might sound abstract, but in practice, it's fundamental. Each action of the agent is precious data. I've used it to refine skills and optimize performance. But watch out, it doesn't always work. Sometimes, it's better to revert to more classic methods. Analyzing trajectories is like an art: you need to know when to stop and how to interpret the data you collect.

Skill Creation with Deep Agent CLI and Langmith Fetch

Creating skills is where Deep Agent CLI and Langmith Fetch come into play. The first step is defining what you want to achieve. I've used skillmd files to structure my skills, and it was a game changer. But beware, each skill needs to be persistent and adapted to the environment. It can be quite a challenge. I had to readjust my method several times to avoid getting lost in unnecessary details.

Validating and Testing Created Skills

Validation is the name of the game. Without it, there's no way to know if your skills hold up. I've tested various methods, sometimes costly in terms of time and resources. But some strategies, like low-cost simulations, proved effective. Honestly, validating and testing is an endless cycle in continual learning. But it's also what makes the process so rewarding.

- Continual Learning: Importance of balancing old and new.

- Weight Updates: Necessary but watch out for speed.

- Reflection Over Trajectories: Useful but handle with care.

- Skill Creation: Using Deep Agent CLI and Langmith Fetch.

- Validation: Essential for ensuring skill sustainability.

So, diving into continual learning with deep agents isn't just theory—it's a game changer for skill creation. By mastering weight updates, I've noticed how it streamlines the entire process. Then, when I reflect over trajectories, I can refine even further. Finally, with Langmith Fetch optimization, I've significantly streamlined my workflow.

- Mastering weight updates is crucial for clarifying the learning process.

- Reflecting over trajectories allows for refining and optimizing skills.

- Langmith Fetch helps to streamline and simplify workflows effectively.

Learning with deep agents is truly transformative for skill creation, but watch out for resource limits and increasing complexity. Take the time to integrate these techniques into your AI projects. I encourage you to watch the full video for deeper insights. It's a valuable resource for anyone looking to transform their skill development process.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Continual Learning with Deepagents: A Complete Guide

Imagine an AI that learns like a human, continuously refining its skills. Welcome to the world of Deepagents. In the rapidly evolving AI landscape, continual learning is a game-changer. Deepagents harness this power by optimizing skills with advanced techniques. Discover how these intelligent agents use weight updates to adapt and improve. They reflect on their trajectories, creating new skills while always seeking optimization. Dive into the Langmith Fetch Utility and Deep Agent CLI. This complete guide will take you through mastering these powerful tools for an unparalleled learning experience.

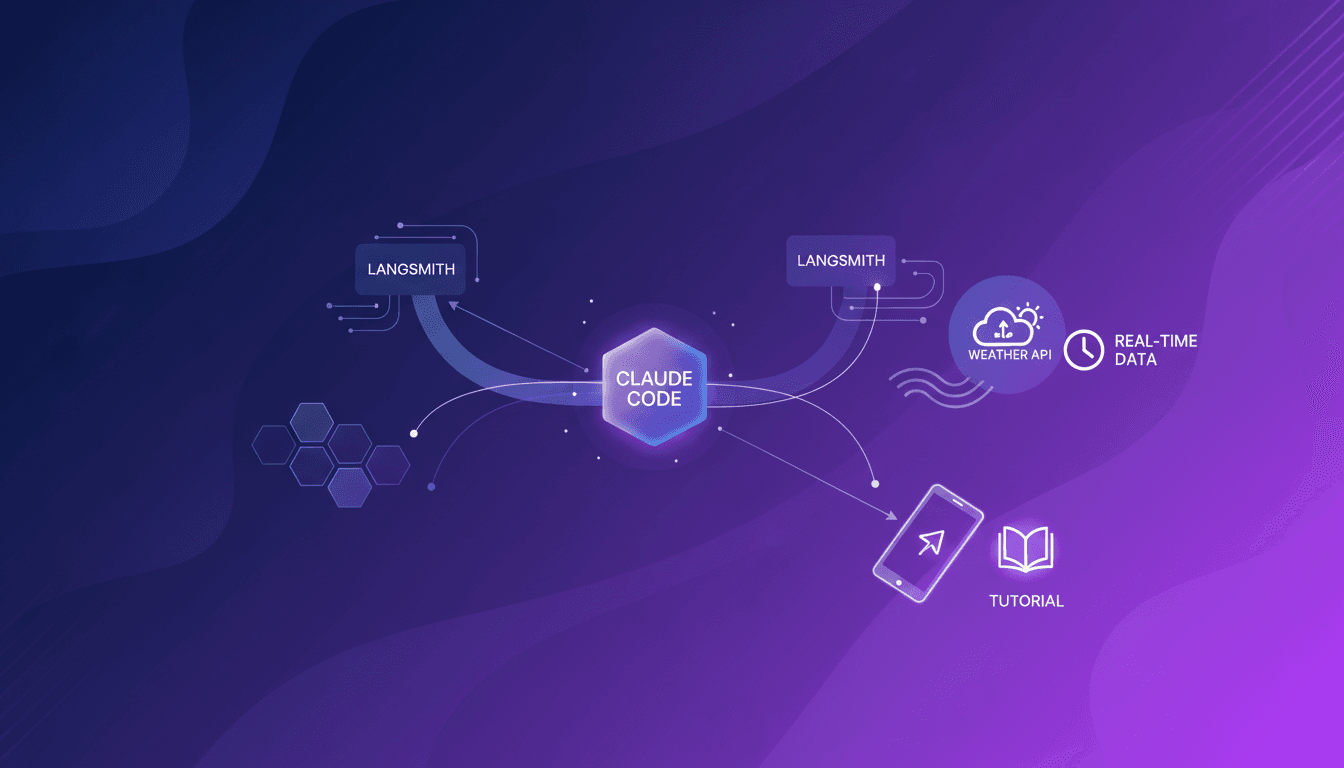

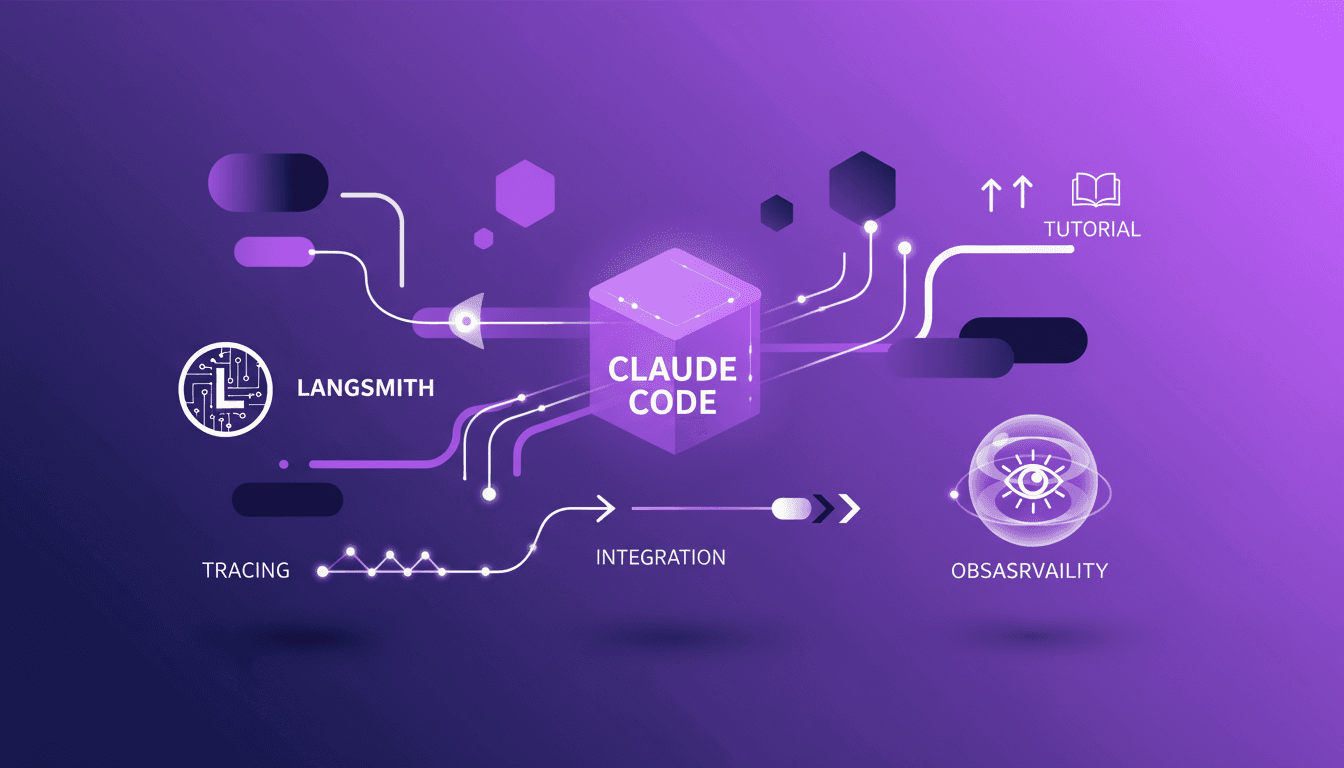

Integrate Claude Code with LangSmith: Tutorial

I remember the first time I tried to integrate Claude Code with LangSmith. It felt like trying to fit a square peg into a round hole. But once I cracked the setup, the efficiency gains were undeniable. In this article, I'll walk you through the integration of Claude Code with LangSmith, focusing on tracing and observability. We’ll use a practical example of retrieving real-time weather data to show how these tools work together in a real-world scenario. First, I connect Claude Code to my repo, then configure the necessary hooks. Watch out, tracing can quickly become a headache if poorly orchestrated. But when well piloted, the business impact is direct and impressive.

Claude Code-LangSmith Integration: Complete Guide

Step into a world where AI blends seamlessly into your workflow. Meet Claude Code and LangSmith. This guide reveals how these tools reshape your tech interactions. From tracing workflows to practical applications, master Claude Code's advanced features. Imagine fetching real-time weather data in just a few lines of code. Learn how to set up this powerful integration and leverage Claude Code's hooks and transcripts. Ready to revolutionize your digital routine? Follow the guide!

Managing Agent Memory: Practical Approaches

I remember the first time I had to manage an AI agent’s memory. It was like trying to teach a goldfish to remember its way around a pond. That's when I realized: memory management isn't just an add-on, it's the backbone of smart AI interaction. Let me walk you through how I tackled this with some hands-on approaches. First, we need to get a handle on explicit and implicit memory updates. Then, integrating tools like Langmith becomes crucial. We also dive into using session logs to optimize memory updates. If you've ever struggled with deep agent management and configuration, I'll share my tips to avoid pitfalls. This video is an advanced tutorial, so buckle up, it'll be worth it.

Agent Memory Management: Key Approaches

Imagine if your digital assistant could remember your preferences like a human. Welcome to the future of AI, where managing agent memory is key. This article delves into the intricacies of explicit and implicit memory updating, and how these concepts are woven into advanced AI systems. Explore how Cloud Code and deep agent memory management are revolutionizing digital assistant capabilities. From CLI configuration to context evolution through user interaction, dive into cutting-edge memory management techniques. How does Langmith fit into this picture? A practical example will illuminate the fascinating process of memory updating.