Subagents Architecture: Design Decisions Unpacked

I dove into the subagents architecture headfirst, and let me tell you, it's a game changer, but only if you navigate the design decisions like a pro. First, I had to figure out how to orchestrate these subagents efficiently. It's not just about setting them up; it's about making them work for you, not the other way around. With three main categories of design decisions and two crucial tool design choices, understanding the nuances between synchronous and asynchronous invocation is key. If you master context engineering, you can really boost your system's efficiency.

When I dove into subagents architecture, I realized it's a game changer, but only if you navigate the design decisions like a pro. First, I had to orchestrate these subagents efficiently. It's not just about setting them up; it's about making them work for me, not the other way around. We're talking about nifty little helpers in multi-agent systems that, if understood correctly, can really boost your efficiency. But watch out, understanding the subtleties between synchronous and asynchronous invocation is crucial. And let's not forget context engineering—this is where the magic happens. Three main categories of design decisions are at play here, along with two crucial tool choices. If you get this right, you're on the right track. That's where the real magic of subagents architecture lies.

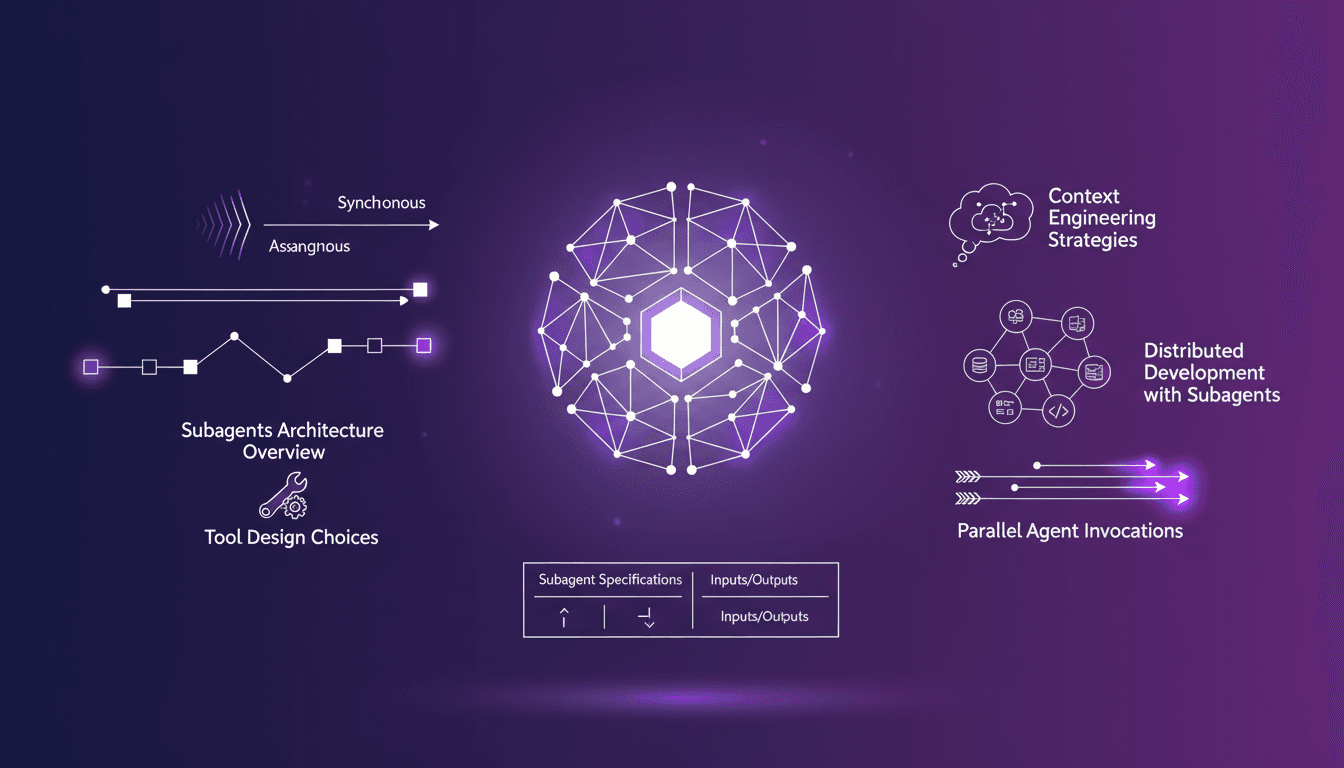

Subagents Architecture Overview

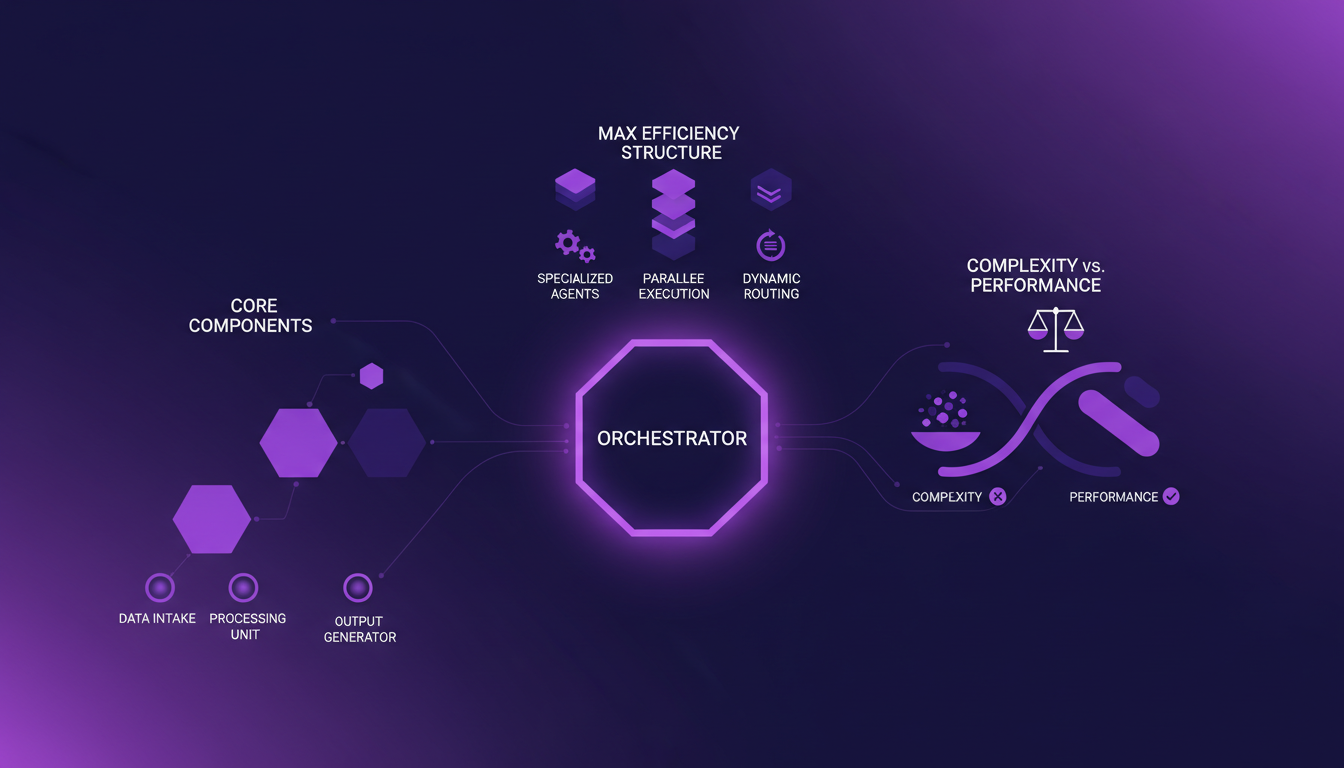

When I first delved into multi-agent systems, subagents architecture was a game changer. It's like having a team of tiny soldiers ready to execute tasks in parallel. First, you need to grasp the core components: a main agent that receives requests and delegates tasks to subagents. These subagents operate independently but under the main agent's supervision, enabling distributed development and impressive scalability. In practice, I've structured my subagents to maximize efficiency with well-thought-out design decisions.

The main challenge is balancing complexity and performance. Too much complexity can slow the system down, while too much simplicity might limit its capabilities. Here are the three main categories of design decisions: synchronous vs asynchronous invocation, tool design choices, and context engineering strategies. Each has its pros and cons, but together, they define how your subagents will interact and behave.

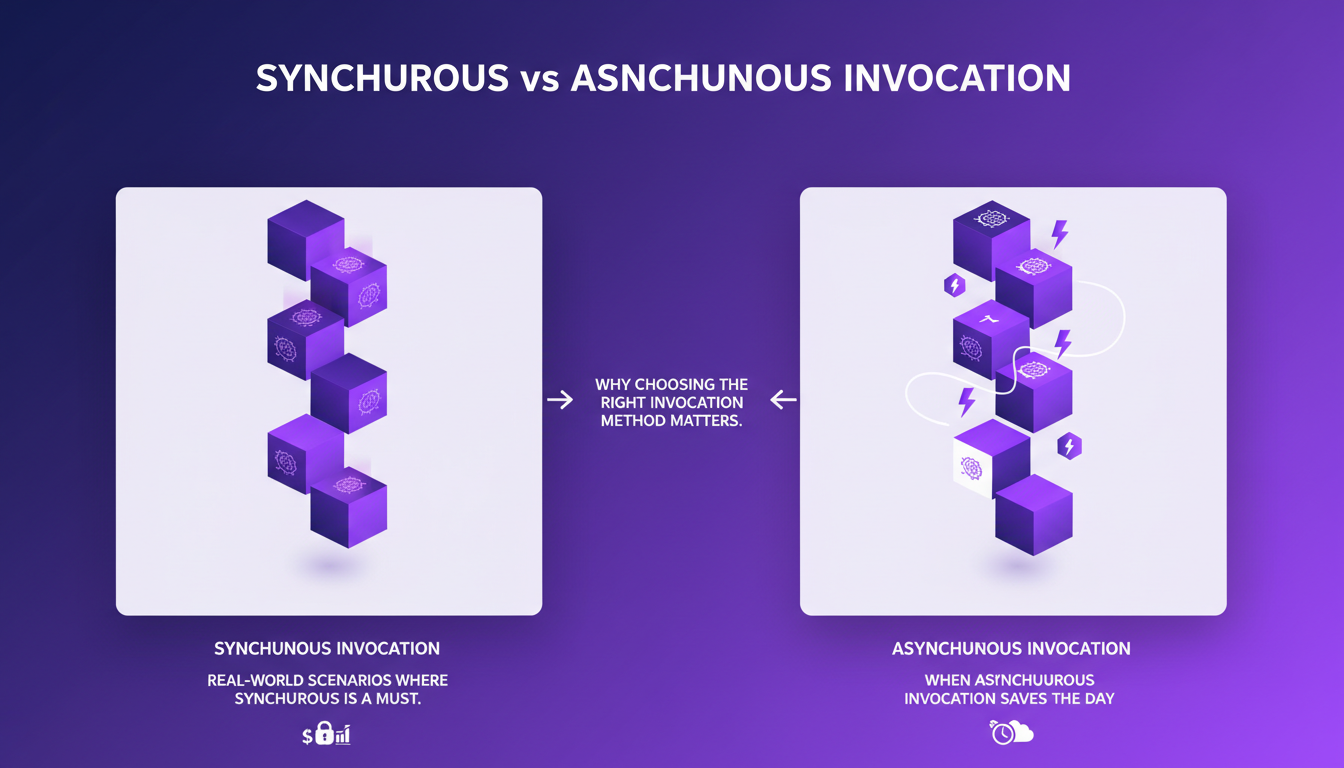

Synchronous vs Asynchronous Invocation

Choosing between synchronous and asynchronous invocation is crucial. I've found that synchronous invocation is ideal when the main agent needs the immediate results of a subagent to proceed. For instance, in a project where data analysis was critical, synchronous invocation ensured the consistency of results.

Conversely, asynchronous invocation is the key in scenarios where latency must be minimized. In another project, I used asynchronous methods for independent tasks, allowing the main agent to avoid being blocked. However, beware, as this method can introduce unexpected latency issues, especially if the background tasks take longer than anticipated.

- Synchronous: Simplicity, but can block the system.

- Asynchronous: More complex, but improves responsiveness.

Tool Design Choices in Multi-Agent Systems

In my experience, two tool design choices have proven crucial. First, using a tool per subagent offers granular control, perfect for projects requiring extensive customization. But beware, this can complicate configuration.

On the other hand, using a single dispatch tool simplifies management. This is an approach I've adopted in projects where simplicity was paramount. However, it might limit direct control over each subagent. Integrating subagents seamlessly into existing systems is essential to avoid overloads and inefficiencies.

Context Engineering Strategies

Context engineering is a critical element for subagents' functionality. I've seen systems fail simply by overloading agents with too much information. To avoid this, I clearly define the subagents' specifications, inputs, and outputs. This ensures that only necessary information is transmitted, avoiding cognitive overload.

Context guides subagents' decisions. For instance, in a recommendation system, providing the right context allowed subagents to make relevant suggestions without overloading the end user. It's a delicate balance between providing enough information for informed decisions and avoiding information overload.

- Clearly define subagent specifications.

- Optimize inputs and outputs to prevent overload.

- Use context to guide subagents' decisions.

Distributed Development with Subagents

Distributed development is crucial for scalability. With subagents, I managed parallel invocations without compromising system consistency. The specifications for subagents, in terms of inputs and outputs, are crucial for maintaining this consistency.

Techniques such as managing parallel invocations and using message queues have helped me ensure that each agent receives the necessary information without duplication or loss. But beware, managing a distributed system requires constant rigor and attention to avoid inconsistencies.

- Distributed development for scalability.

- Manage parallel invocations.

- Ensure consistency in distributed systems.

In summary, subagents architecture offers incredible flexibility, but it requires meticulous planning and execution. By following these strategies, I've been able to build robust systems that adapt and evolve with changing needs.

Building with subagents isn't just about following a blueprint; it's about making informed design decisions that align with your system's needs. First, choose your invocation method—synchronous or asynchronous—based on your performance constraints. Then design your tools to maximize efficiency while respecting operational context limits. And don't underestimate context engineering; it sets the stage for your subagents to operate effectively.

- Choose the right invocation method for your system

- Design tools with a balance between performance and simplicity

- Don't overlook context engineering

These strategies, when applied well, can transform your workflows. But watch out, every decision has its trade-offs, and anticipating them is key. Ready to optimize your multi-agent system? Dive into these strategies and watch your subagents transform your workflow. For a deeper dive, check out the original video 'Building with Subagents: Design Decisions' on YouTube. That's where it all comes together.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

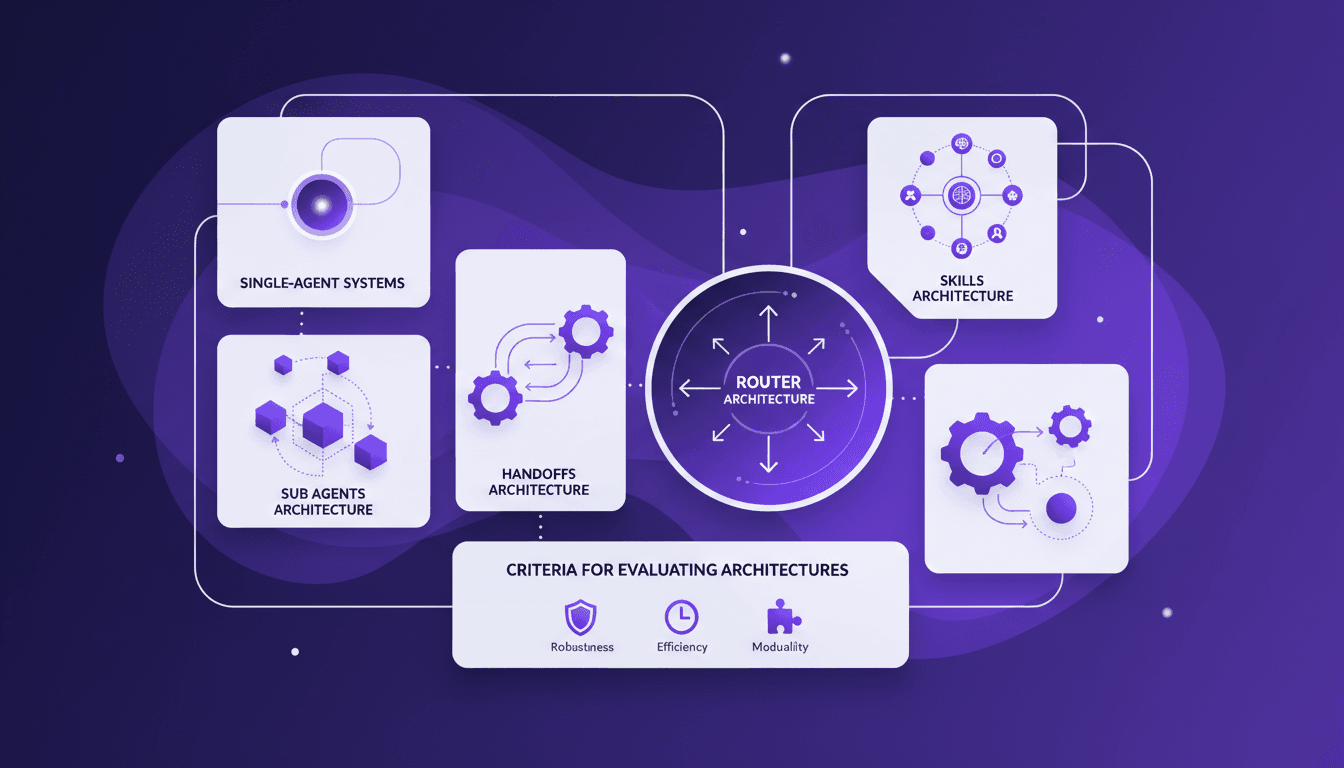

Choosing the Right Multi-Agent Architecture

I remember the first time I tried to implement a multi-agent system. Overwhelmed by the architecture choices, I made a few missteps before finding a workflow that actually works. Here’s how you can choose the right architecture without the headaches. Multi-agent systems can really change how we tackle complex tasks by distributing workload and enhancing interaction. But pick the wrong architecture, and you could face an efficiency and scalability nightmare. We'll explore sub agents, handoffs, skills, and router architectures, plus criteria for evaluating them. And why sometimes, starting with a single-agent system is a smart move. I'll share where I stumbled and where I succeeded to help you avoid the pitfalls. Ready to dive in?

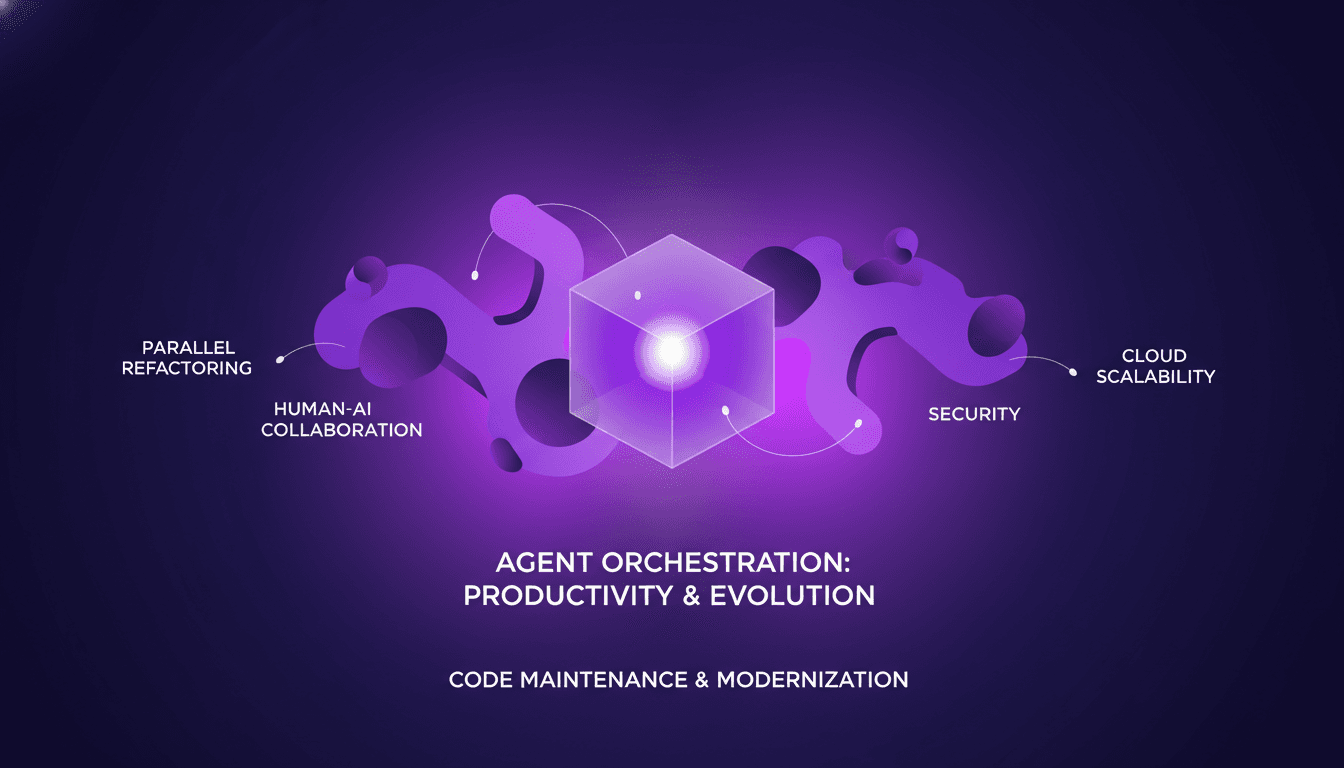

Automating Large-Scale Refactors with Agents

I've been knee-deep in code refactoring for over a decade, and let me tell you, automating this beast with parallel agents is a game changer. We're talking about an orchestration that can revolutionize your workflow. First, I set up my agents, then orchestrate them to maximize productivity impact. This is where the evolution of coding agents comes into play, having real impacts on software development. But watch out, it's not challenge-free. Security and scalability of cloud environments for agent execution are critical. Dive in to discover how to effectively collaborate with AI in software engineering.

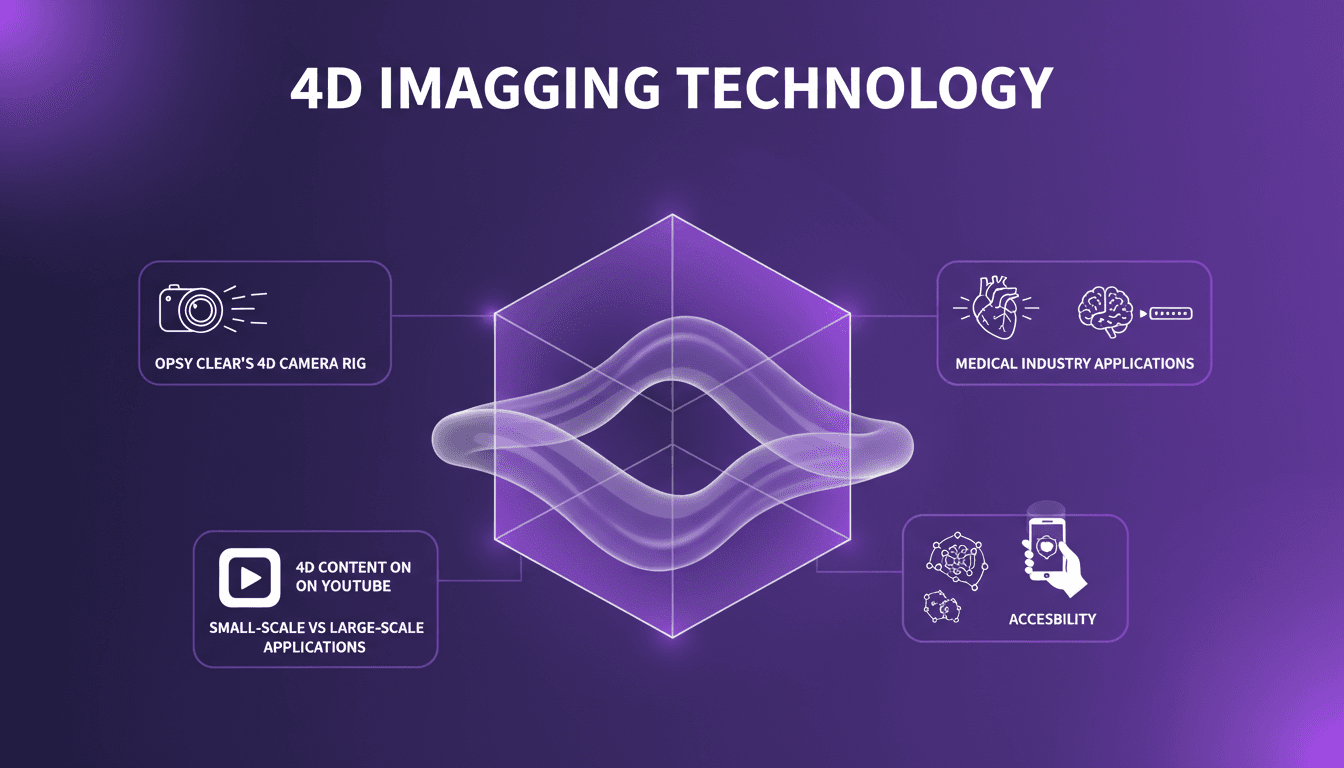

4D Imaging: Transform Your Workflow

I remember the first time I saw a 4D render—it felt like stepping into the future. But the real game changer was when I got my hands on Opsy Clear's 4D camera rig. This isn't sci-fi anymore, it's here, ready to revolutionize your workflow. Whether you're in the medical field or a YouTube content creator, mastering 4D imaging can truly set you apart. Let me walk you through this tech, from its medical applications to its potential on YouTube, and how it's accessible to everyone, regardless of your project's scale.

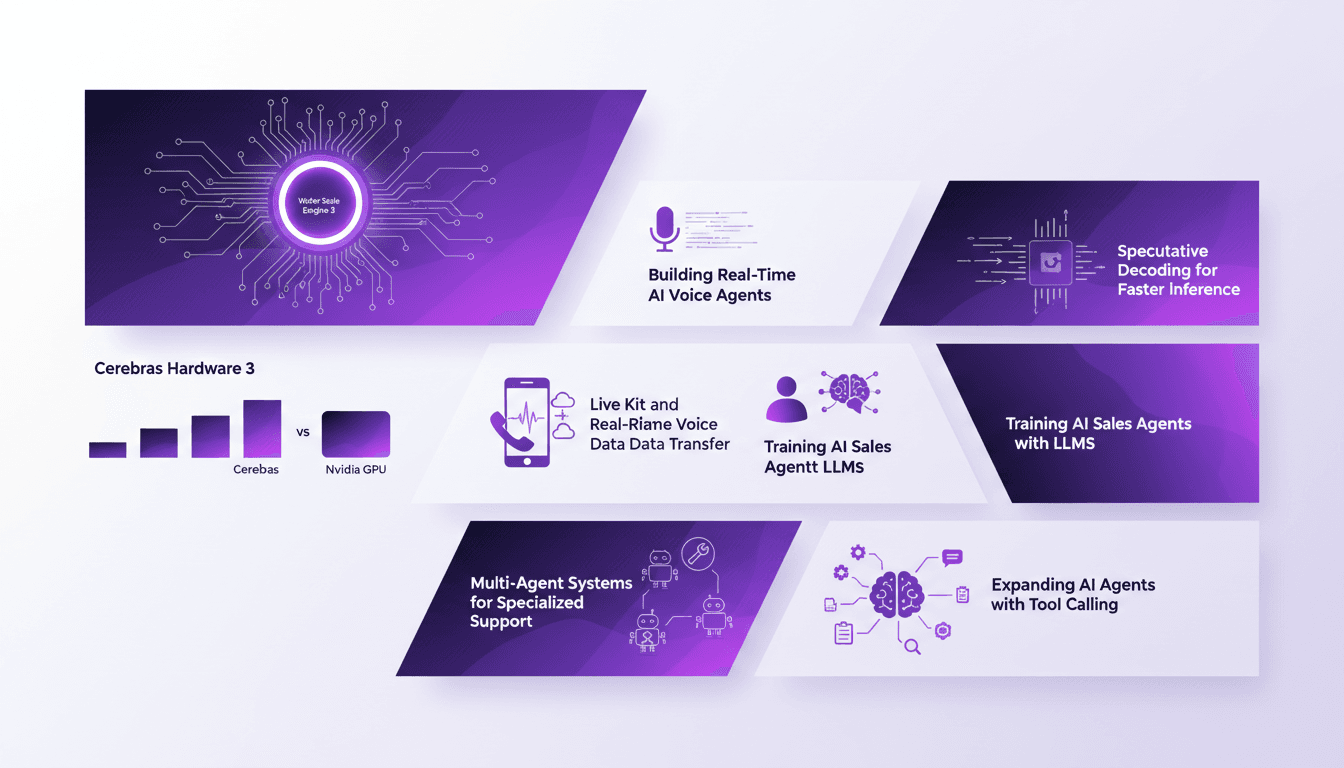

Building a Real-Time AI Agent with Cerebras

I remember the first time I connected a Cerebras system to my AI workflow. The speed was jaw-dropping, but it quickly became clear it wasn't just about speed. It's about orchestrating everything efficiently, from speculative decoding to real-time voice data transfer. With Cerebras's Wafer Scale Engine 3, we're pushing the boundaries of AI inference and real-time applications. In this article, I'll take you through the process of building a real-time AI sales agent using Cerebras hardware, including a comparison with Nvidia GPUs. We'll explore how speculative decoding and Live Kit technology are revolutionizing user experience. Buckle up, because we'll also dive into training AI sales agents with LLMs and expanding into multi-agent systems for specialized support. Let's get started!

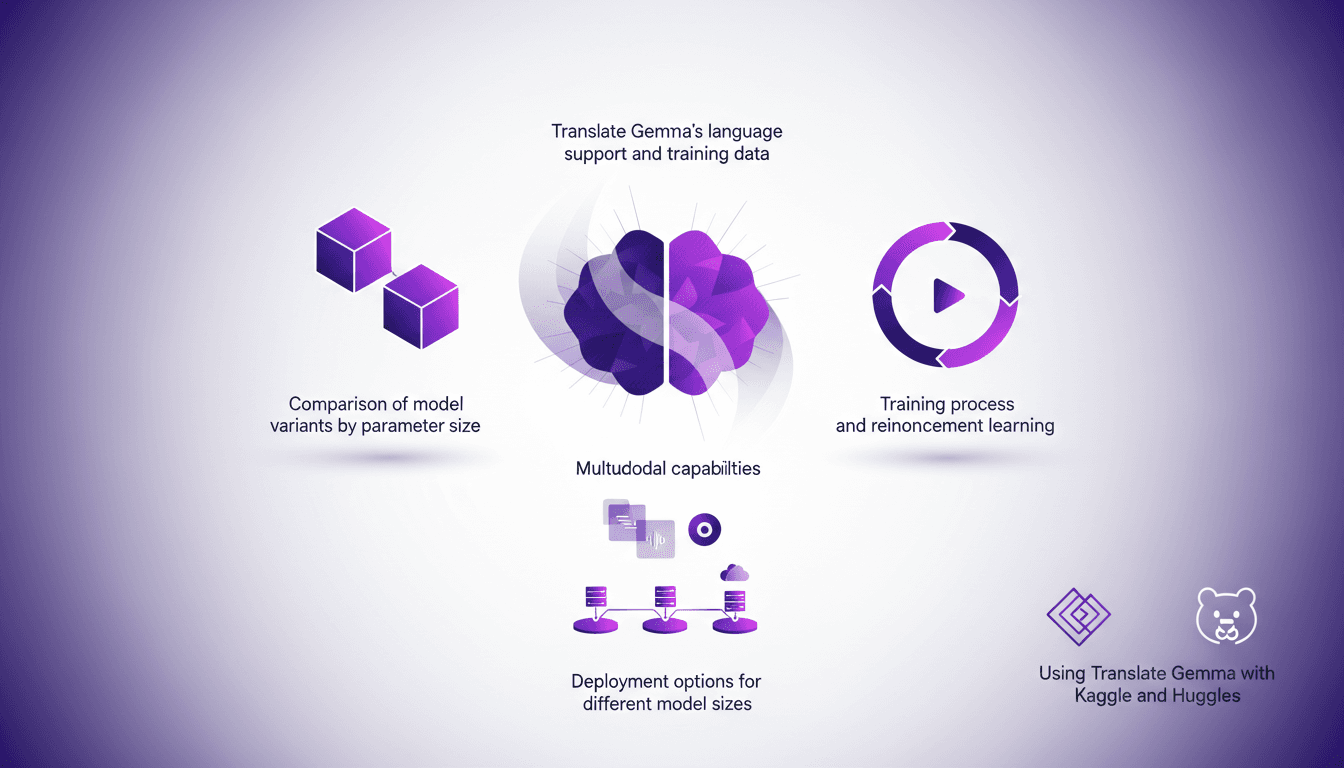

Translate Gemma: Multimodal Capabilities in Action

I've been diving into Translate Gemma, and let me tell you, it's a real game changer for multilingual projects. First, I set it up with my existing infrastructure, then explored its multimodal capabilities. With a model supporting 55 languages and training data spanning 500 more, it's not just about language—it's about how you deploy and optimize it for your needs. I'll walk you through how I made it work efficiently, covering model variant comparisons, training processes, and deployment options. Watch out for the model sizes: 4 billion, 12 billion, up to 27 billion parameters—this is heavy-duty stuff. Ready to see how I used it with Kaggle and Hugging Face?