Nvidia's 13 Open Models: Game Changer for Devs

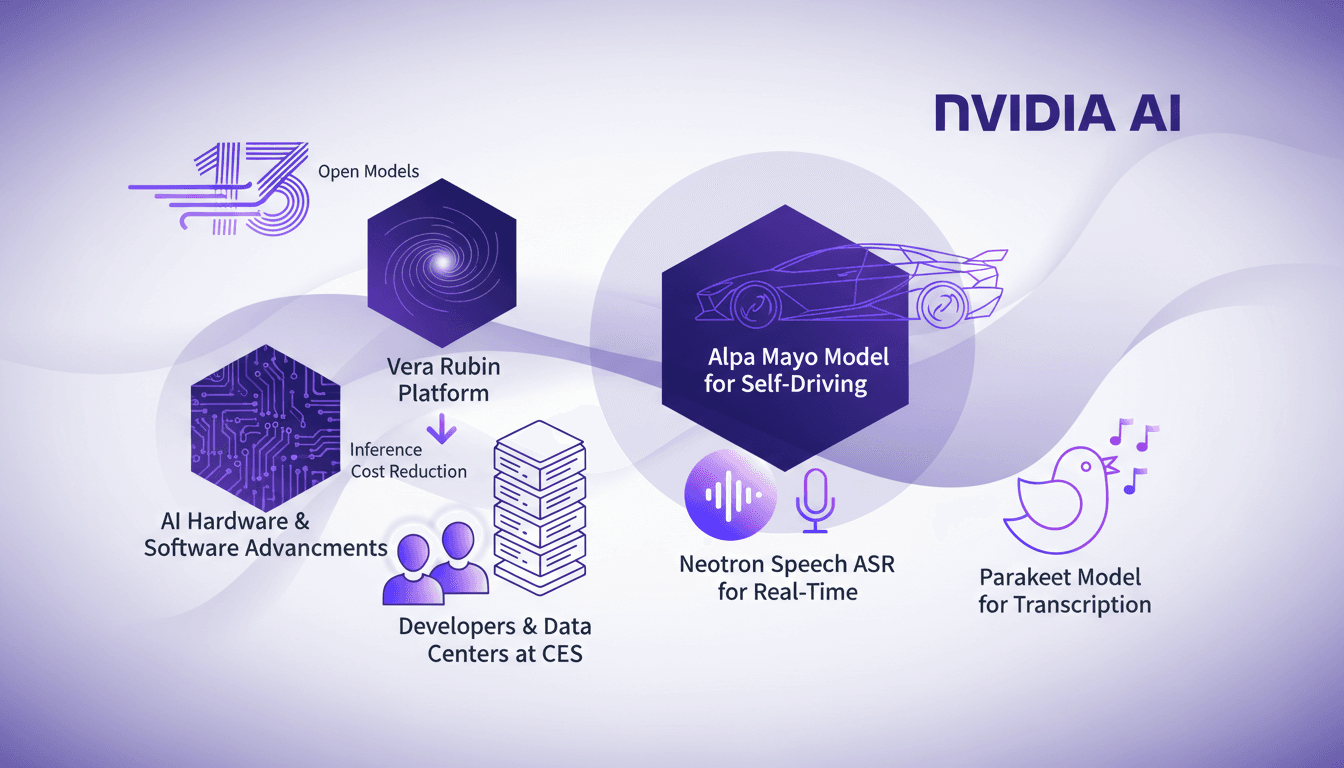

I remember being at CES when Nvidia dropped their bombshell: 13 new open models. It was like a fireworks show of AI innovation, impossible not to dive in. With these models, Nvidia isn't just releasing another gadget—they're redefining the playing field for developers like us. The Vera Rubin model claims to be five times faster than Blackwell chips for data centers, while the Alpa Mayo, a 10-billion parameter model, targets self-driving cars. These tools are truly about cutting costs and boosting functionality. Already, I'm thinking about how to integrate these into my projects, but watch out, there are pitfalls to avoid.

I was at CES when Nvidia dropped a bombshell—13 new open models. It was like watching a fireworks show of AI innovation, and I couldn't wait to dive in. For developers like us, these models aren't just another gadget. They're a game changer. Take the Vera Rubin model, for instance: it claims to be five times faster than Blackwell chips for data centers. And then there's the Alpa Mayo, a 10-billion parameter model designed for self-driving cars. These tools aim to reduce costs and enhance functionality, critical components for our projects. I'm already thinking about how to integrate them, but beware, there are pitfalls—don't get blindsided by the hype without understanding the limits. Nvidia has clearly signaled where AI development is headed, and it's time to gear up for this new wave.

Unpacking Nvidia's 13 Open Models

At the CES 2026 event, Nvidia unveiled 13 open models tailored specifically for developers. These models aim to enhance AI applications across various industries by reducing inference costs and improving integration. As a developer, having access to such powerful and flexible tools is a real asset. But watch out, each model has its limits, and understanding them is crucial to getting the most out of these tools.

Nvidia's models are primarily focused on developers, data centers, and hyperscalers. By not concentrating on consumer products like graphics cards, Nvidia has made it clear that its interest lies in areas that push the boundaries of AI. I've found that this focus translates into models that aren't just theoretical but have a concrete impact on our daily projects.

- 13 models unveiled at CES 2026

- Reduction in inference costs and improved integration

- Focus on developers and data centers

Vera Rubin Platform: Slashing Inference Costs

The Vera Rubin Platform is a major advancement, being five times faster than previous Blackwell chips. By integrating it into my existing setups, I immediately observed substantial savings. However, it's important to be cautious of potential integration issues with older systems. The impact on data center operations is significant, reducing costs and improving efficiency.

What I found particularly impressive is how Nvidia managed to design this system to require four times fewer GPUs to train mixture of experts models compared to previous platforms. This means savings are not just on hardware but also on the time and energy required for training. This approach is crucial for companies looking to optimize their operational costs.

- Five times faster than Blackwell chips

- Significant cost reduction for data centers

- Quick integration but caution with older systems

Alpa Mayo and Neotron: Driving AI Forward

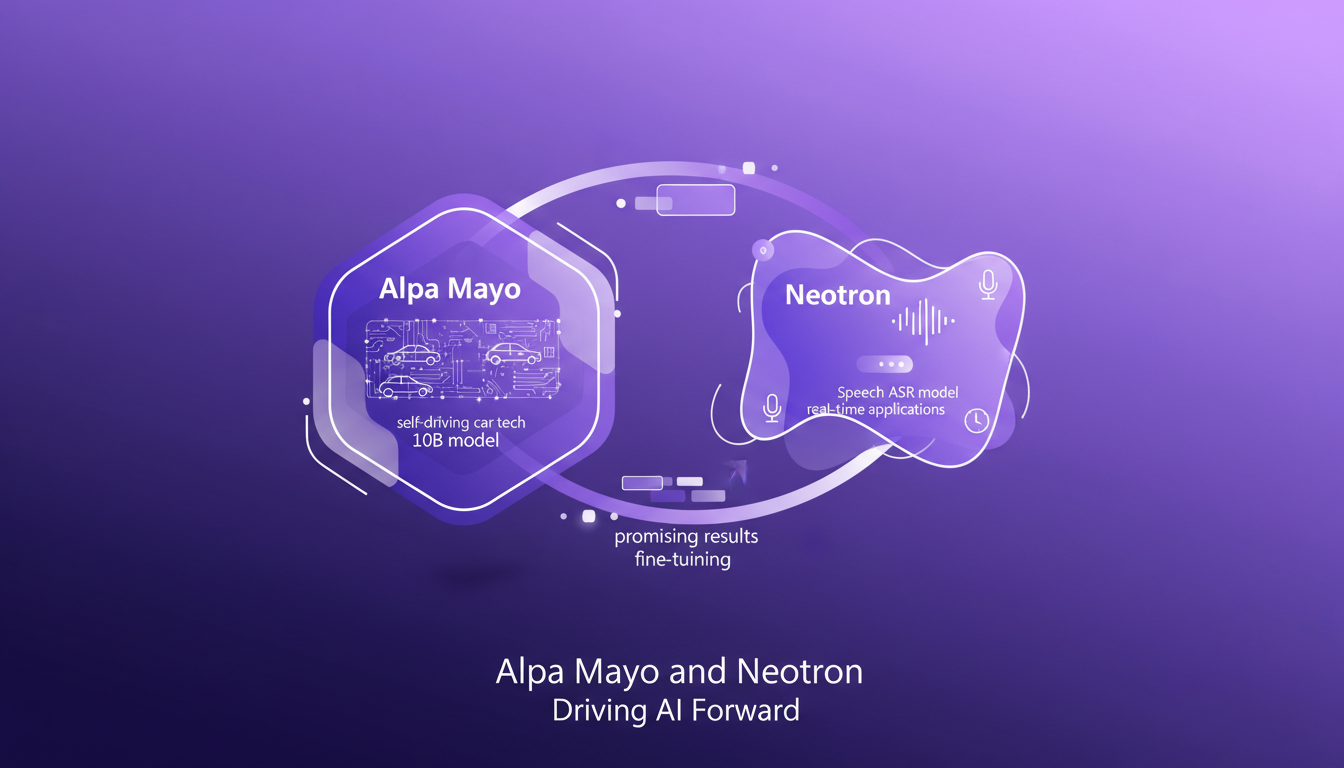

Alpa Mayo is a 10-billion-parameter model aimed at self-driving car technology. When implemented in a pilot project, the results were promising but required fine-tuning. I had to tweak the parameters to achieve optimal performance, especially in complex scenarios where chain of thought reasoning is crucial.

The Neotron Speech ASR model stands out for its real-time capabilities. For live speech recognition applications, it's a real game changer. Using it for voice agents and live captions, I noticed a marked improvement in latency and accuracy.

- Alpa Mayo model for self-driving cars

- Neotron Speech ASR model for real-time applications

- Promising results but requires fine-tuning

AI Hardware and Software: The Developer's Toolkit

Nvidia doesn't stop at models; its advancements in hardware and software support these innovations. Multimodal embeddings and mixture of experts models offer powerful capabilities for AI. Using these tools, I've been able to enhance decision-making processes with chain of thought reasoning.

Here are some practical tips I've applied for integrating these tools into existing workflows:

- Start with testing in a controlled environment before scaling.

- Monitor model complexity to avoid poor performance.

- Use mixed models for specific tasks to improve efficiency.

Integrating Models for Enhanced Functionality

Combining models like Parakeet for transcription with others for comprehensive solutions is an approach I've adopted. Integration challenges can be numerous, but by first testing in a controlled environment and then scaling, these obstacles can be overcome.

It's important to weigh the trade-offs between model complexity and performance. Sometimes it's faster to simplify models to achieve quicker results. This approach has allowed me to achieve significant efficiency gains without sacrificing quality.

- Test first in a controlled environment

- Weigh trade-offs between complexity and performance

- Use combined models for comprehensive solutions

With Nvidia's 13 new open models, we're entering an exciting phase for anyone developing AI applications. I’ve seen significant inference cost reductions by integrating the Vera Rubin platform, which is five times faster than Blackwell chips for data centers. It's a real game changer, but watch out for performance trade-offs. For real-time applications, the Neotron ASR model really optimizes efficiency, but you’ve got to grasp the context limitations. Finally, the Alpa Mayo, this 10 billion parameter model, shows impressive strides for self-driving cars, but be ready to handle massive data volumes.

Ready to leverage Nvidia's models? Dive in, experiment, and transform your AI applications today. Watch the original video to deepen your understanding and apply these advancements to your projects.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Google vs OpenAI: Financial Struggles and AI

I've been knee-deep in AI developments, and let me tell you, the landscape is shifting fast. Google’s making big moves, OpenAI is feeling the heat, and European tech companies are stepping up like never before. Google is vertically integrating at breakneck speed while OpenAI grapples with financial challenges. Meanwhile, AWS and NVIDIA are pushing the boundaries of AI hardware, and Europe is asserting its tech sovereignty. As a practitioner, I see these dynamics up close and I'm here to break it down for you without any sugar-coating.

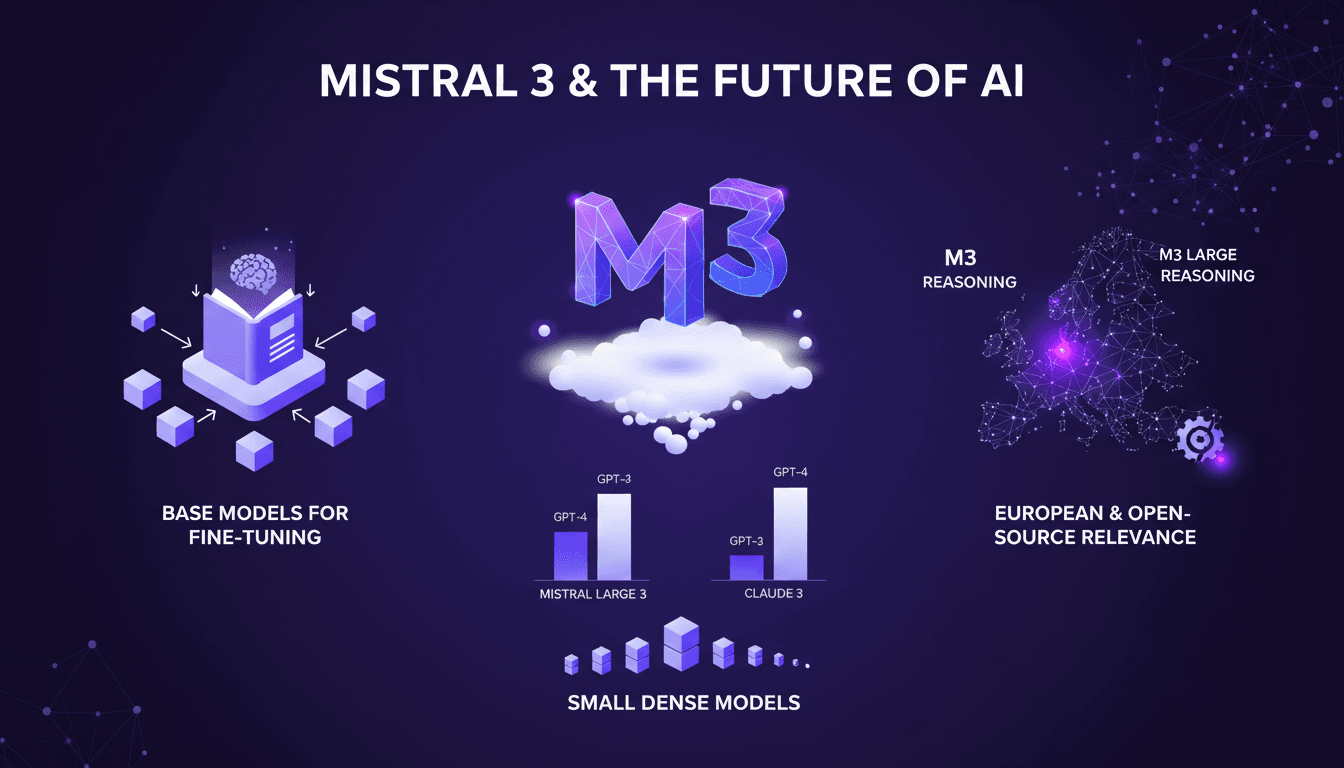

Mistral 3: Europe's Breakthrough or Too Late?

Ever since I got my hands on Mistral 3, I've been diving deep into its mechanics. This isn't just another AI model; it's Europe's bold move in the AI race. With 675 billion parameters, Mistral 3 stands as a heavyweight contender, but is it enough against giants like Deep Seek? I connect the dots between performance, fine-tuning strategies, and what this means for European innovation. Let's break it down together.

Deepfakes Evolving: What You Need to Know

I first encountered deepfakes when a client brought me a video that seemed off. It was a wake-up call, showing me how far AI technology had come—and how much further it could go. As AI advances rapidly, deepfakes are becoming more sophisticated and accessible. They offer creative possibilities but also pose significant security risks. In this article, I take you through the technological advancements, detection challenges, and what we need to know to stay ahead of potential threats. We discuss impacts on the finance and media sectors, and the ethical and legal implications. I share strategies to combat these threats, drawing on five years of experience training specific systems to catch deepfakes. If you're a builder like me, you know staying ahead is crucial, but we also need to be aware of the dangers this technology presents.