Hidden Features Making AI Agents Production-Ready

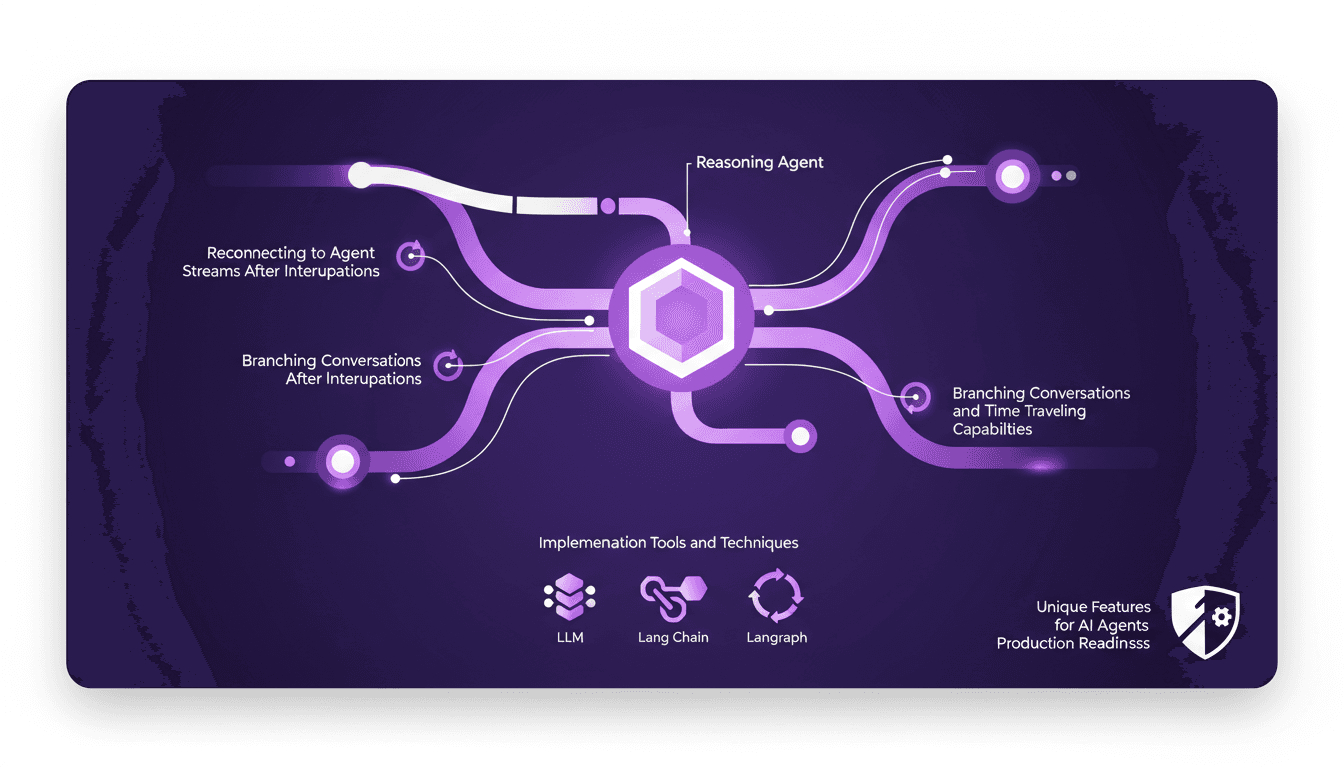

I've spent countless hours in the trenches, fine-tuning AI agents that aren't just smart but truly production-ready. Let's dive into three hidden features that have been game changers in my workflow. You know, AI agents are evolving fast, but making them robust for real-world applications requires digging deeper into lesser-known features. Here's how I leverage these capabilities to enhance efficiency and reliability. We're talking about how I use reasoning agents and streaming thought processes, reconnecting to agent streams after interruptions, and branching conversations with time-traveling capabilities. If you're looking to make your AI agents production-ready, these unique features are indispensable.

I've been deep in the world of AI agents for countless hours, and making them truly production-ready is a real challenge. Sure, most people settle for the basic features, but if you really want to stand out, you have to explore less obvious paths. So, I'm going to share three hidden features that have truly transformed my approach. The first thing I do is leverage reasoning agents paired with streaming thought processes; it changes everything for keeping track in real-time. Then there's reconnecting to streams after an interruption. Trust me, it's saved me more than once. And finally, branching conversations with the ability to time travel, it's like having a time machine for your agents. I've honed these techniques with tools like LLM and langchain, and they've made my agents genuinely ready for the field.

Reasoning Agents and Streaming Thought Processes

When I first started setting up reasoning agents, it became clear how essential it is to stream their thinking process. Instead of just delivering a final answer, showcasing the thought process provides vital context for users and allows intervention when necessary. This capability keeps the context alive throughout the interaction.

However, there's a delicate balance between complexity and performance. Too much detail in the thought process can overload the system and slow down user experience. I got burned by this trap several times before finding the right balance.

- Set up reasoning agents for dynamic decision-making.

- Maintain context with streaming thought processes.

- Avoid common pitfalls related to excessive complexity.

Reconnecting to Agent Streams After Interruptions

Handling interruptions is a constant challenge. By utilizing Thread ID and Reconnect on Mount, I've ensured continuity of conversations even after page refreshes or connection drops. This is crucial in real-world scenarios where every second counts.

There are trade-offs between reconnection speed and data integrity, though. In my experience, it's sometimes better to prioritize integrity, even if it means a slight delay in data recovery.

- Use Thread ID to store conversation state.

- Enable reconnect on mount to retrieve conversations after interruptions.

- Strategies to minimize downtime.

Branching Conversations and Time Traveling Capabilities

Branching conversations are another invaluable tool. With Parent Checkpoint IDs, I can resume a conversation from any point in time, which is incredibly useful for modifying or redirecting the discussion thread.

However, in complex scenarios, it's easy to lose coherence if branches aren't managed correctly. That's why I always ensure to properly structure checkpoints to avoid confusion.

- Implement branching conversations with Parent Checkpoint IDs.

- Use time traveling capabilities effectively.

- Manage branches to maintain coherence.

Implementation Tools and Techniques: LLM, Lang Chain, Langraph

To integrate these features, I opted for tools like LLM, Lang Chain, and Langraph. Their integration into my workflow has been a real game-changer. By going through each step, I've learned to maximize their potential while keeping an eye on cost and efficiency.

I've often had to adjust my approaches to avoid performance and cost pitfalls. For example, excessive use of certain tools can lead to unnecessary overloads.

- Why choose LLM, Lang Chain, and Langraph.

- Steps to integrate into the workflow.

- Lessons learned and cost-efficiency considerations.

Unique Features for AI Agent Production Readiness

Lastly, there are lesser-known features of longchain that stand out, such as reasoning token rendering and stream reconnection. These tools answer tough questions and balance innovation with practical application.

These features have a direct impact on overall agent performance, making applications more robust and ready for production.

- Three lesser-known but crucial longchain features.

- Two tough questions these features help answer.

- Impact on performance and production readiness.

By leveraging these hidden features, I've truly transformed how I build production-ready AI agents. First off, focusing on reasoning and streaming thought processes has seriously bumped up both efficiency and reliability. Then, reconnecting to agent streams after interruptions — a real game changer, but watch out for the synchronization headaches. Finally, with branching conversations and time traveling capabilities, I've unlocked new interaction pathways. But don't get carried away by the excitement: there are limits, especially with resource demands and complexity. I highly recommend you start integrating these techniques into your projects. Share your experiences, and together, let's push the boundaries of what's possible with AI agents. For more insights, check out the full video here: YouTube link.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

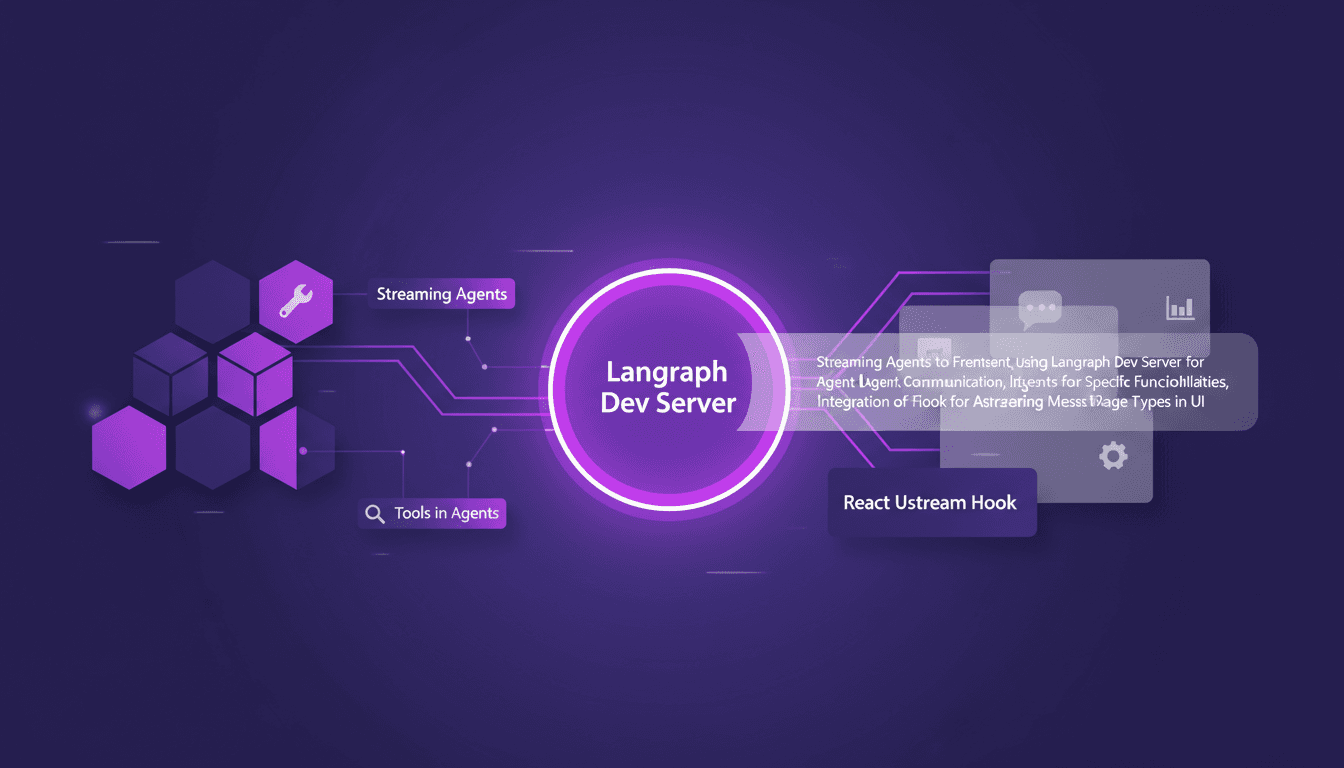

Streaming Agent Messages in React with LangChain

I remember the first time I tried to stream agent messages into a React app. It was a mess—until I got my hands on LangChain and Langraph. Let me walk you through how I set this up to create a seamless interaction between frontend and AI agents. In this tutorial, I'll show you how to connect LangChain and React using Langraph Dev Server. We'll dive into streaming agent messages, using tools like weather and web search, and rendering them effectively in the UI. You'll see how I integrate these messages using the React Ustream hook, and how I handle different message types in the UI. Ready to transform your AI interactions? Let's dive in.

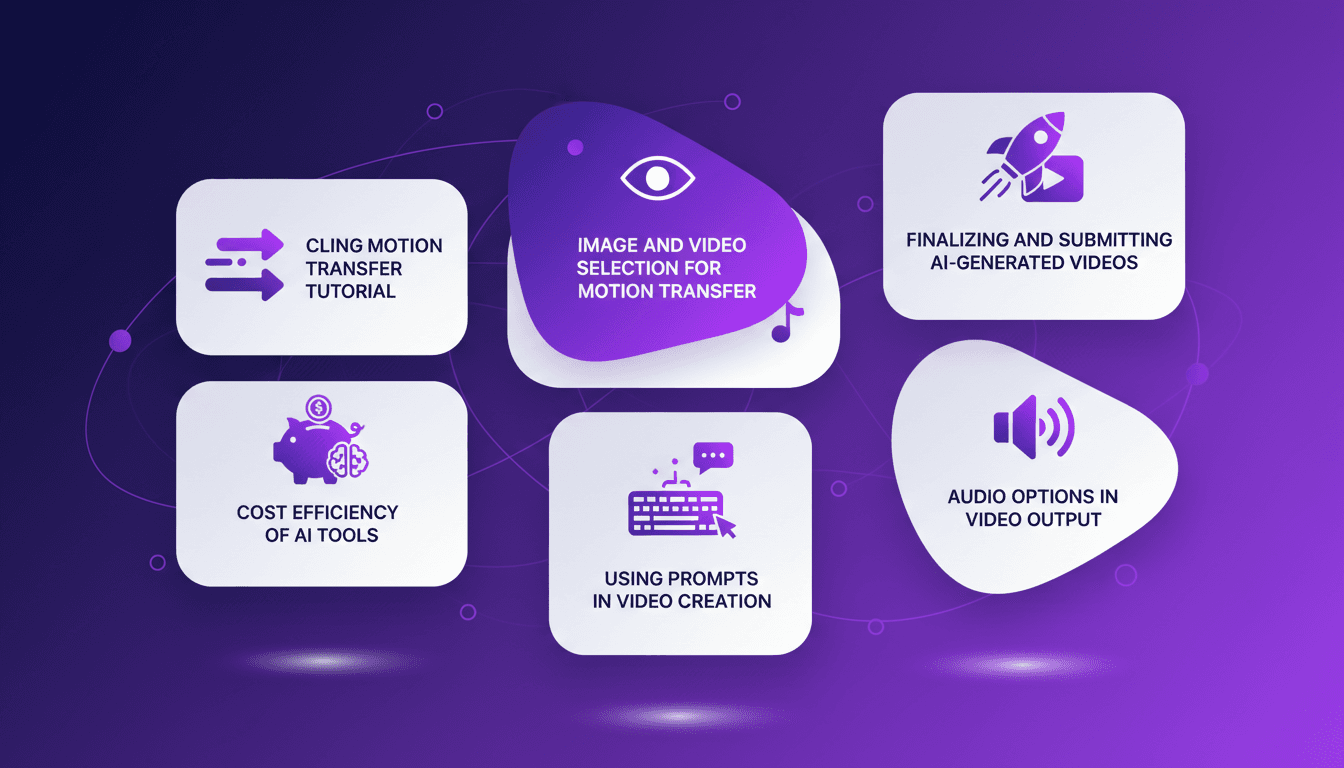

Mastering Cling Motion Transfer: A Cost-Effective Guide

I still remember the first time I tried Cling Motion Transfer. It was a game-changer. For less than a dollar, I turned a simple video into viral content. In this article, I'll walk you through how I did it, step by step. Cling Motion Transfer is an affordable AI tool that stands out in the realm of video creation, especially for platforms like TikTok. But watch out, like any tool, it has its quirks and limits. I'll guide you through image and video selection, using prompts, and how to finalize and submit your AI-generated content. Let's get started.

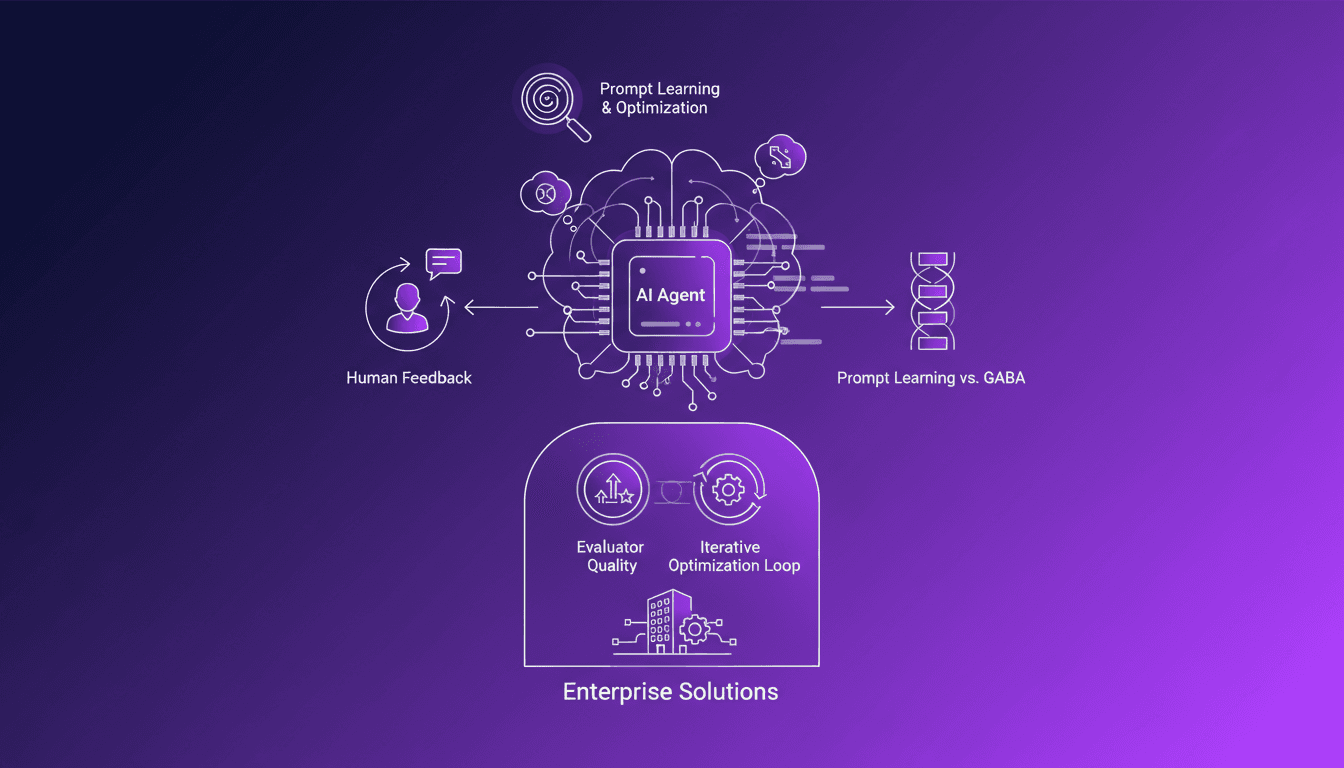

Optimize Your Prompt Learning Loops

I've spent months refining how my AI agents learn. It's not just about throwing prompts at them and hoping for the best. No, it's about building a robust learning loop that evolves with every iteration. The challenges in AI agent development are many, and optimizing these prompts is where the real work begins. In this video, I share the techniques and solutions I've uncovered, from the crucial role of human feedback to the importance of evaluator quality. It's a journey into the complex world of prompt optimization, and I show you how I cracked the code.

Building AI Agents: Challenges and Solutions

Knee-deep in the venture capital world, my inbox is a nightmare of endless emails. Seriously, it's brutal. Then I stumbled upon the LangSmith Agent Builder, and it's been a game changer. Picture a tool that automates and streamlines your daily tasks, freeing up time for what truly matters. But watch out, don't get too carried away; there are limits you need to know. For instance, beyond 100K tokens, things get tricky. Still, amidst the daily grind, this tool is a breath of fresh air. It not only boosts your productivity but also strengthens your LinkedIn presence. In short, it's a must-have for us venture capital professionals.

2025 AI Code Summit: Innovations and Insights

I walked into the 2025 AI Engineering Code Summit in New York, and the energy was palpable. This wasn't just another tech event; it was a gathering of the minds shaping the future of AI coding. With AI's role in software organizations expanding, understanding the latest tools and collaborations is crucial. DeepMind stands out with its new releases like Gemini 3 and Nano Banana Pro. These innovations aren't just gadgets; they're transforming how we approach software development. I've experimented with these tools, and I can tell you, they're redefining the way we orchestrate our projects. It's an exciting time for AI, and this summit is the focal point of these disruptions.