Reinforcement Learning for LLMs: New AI Agents

I remember the first time I integrated reinforcement learning into training large language models (LLMs). It was 2022, and with the development of ChatGPT fresh in my mind, I realized this was a real game-changer for AI agents. But be careful—there are trade-offs to consider. Reinforcement learning is revolutionizing how we train LLMs, offering new ways to enhance AI agents. In this article, I'll take you through my journey with RL in LLMs, sharing practical insights and lessons learned. I'm diving into reinforcement learning with human feedback (RLHF), AI feedback (RLIF), and verifiable rewards (RLVR). Get ready to explore how these approaches are transforming the way we design and train AI agents.

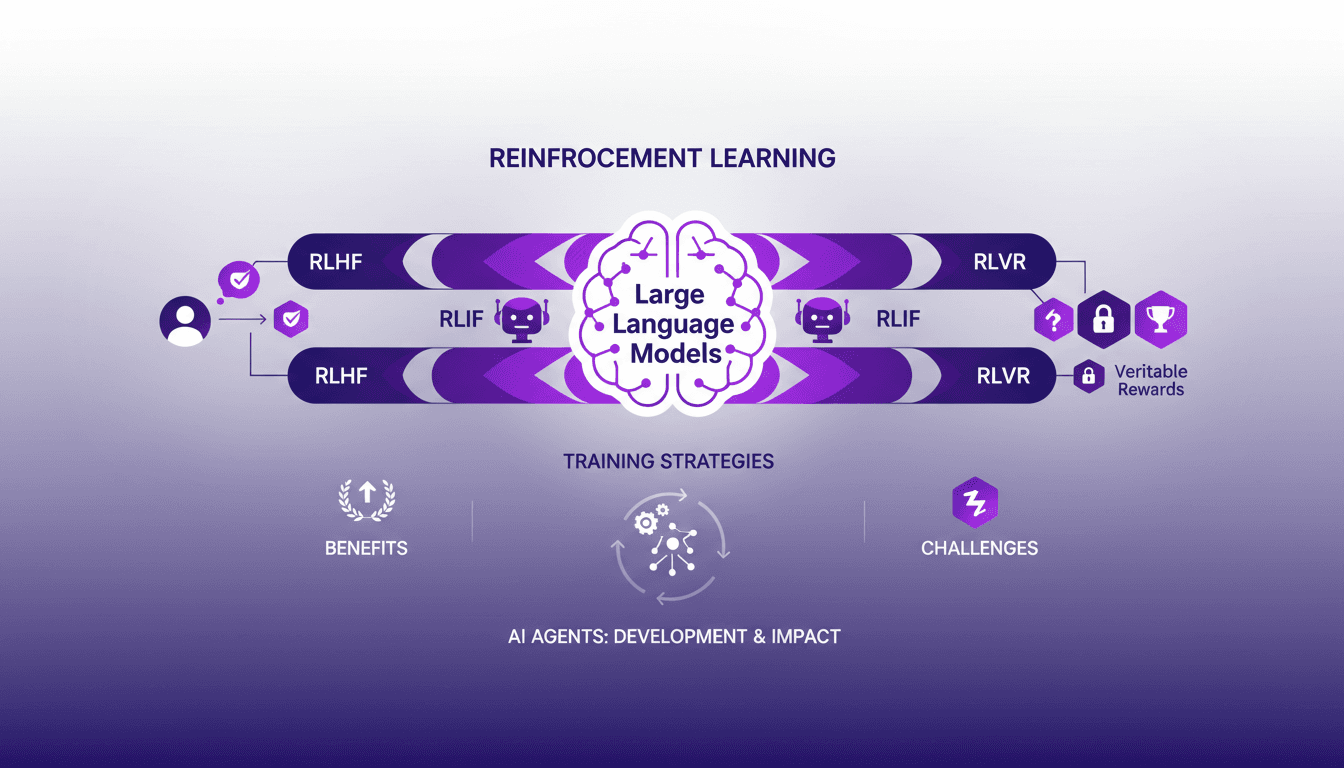

I remember the first time I integrated reinforcement learning into training large language models (LLMs). It was 2022, and the development of ChatGPT was still fresh on my mind. I connected the dots and realized that this was a real game-changer for AI agents. But let's not get carried away too quickly—there are trade-offs to consider. Reinforcement learning is revolutionizing how we train LLMs, offering new ways to enhance AI agents. In this article, I'll walk you through my journey with RL in LLMs, sharing practical insights and lessons learned from my experiences. We'll dive into the specifics of reinforcement learning with human feedback (RLHF), AI feedback (RLIF), and verifiable rewards (RLVR). I'll also cover training strategies for LLMs and the challenges and benefits of reinforcement learning approaches. Get ready to explore how these methods are transforming the way we design and train AI agents, and remember that while it's a huge step forward, there are pitfalls to avoid.

Diving into Reinforcement Learning for LLMs

When we talk about reinforcement learning (RL), we're talking about giving machines the ability to learn through trial and error. It's like training a dog to do tricks — you give it a treat when it succeeds, nothing when it fails. For Large Language Models (LLMs), it's the same, but on a massive scale. RL is crucial for LLMs because it allows us to shift from token-level evaluation (each word or character) to response-level evaluation. This changes everything, as instead of correcting each word, we judge the entire response, which is much more natural.

LLMs like those developed by OpenAI use a three-step strategy: pre-training, supervised fine-tuning, and RL with human feedback. This method was a game-changer in 2022 with the development of ChatGPT. We saw a direct application of these techniques, passing through phases like self-supervised pre-training, where models learn to predict the next piece of text autonomously.

It's a step forward in how we interact with AIs, making feedback much more intuitive and aligned with human expectations.

Reinforcement Learning with Human Feedback (RLHF)

With Reinforcement Learning with Human Feedback (RLHF), we directly incorporate human preferences into the LLM training process. Essentially, we train a reward model based on human feedback so the model generates responses aligned with what humans consider helpful and appropriate. I've implemented RLHF in recent projects, and let me tell you, the challenges are numerous. Initially, you have to balance between human labor intensity and model accuracy.

Facing these challenges, I often had to juggle between human labor and computational efficiency. For instance, while RLHF can be noisy and biased, it's crucial for adjusting models to better understand human nuances.

Watch out, RLHF can quickly become resource-intensive in terms of human labor. It's important to thoroughly assess your needs before diving in.

- RLHF requires significant human labor for annotation and feedback.

- It delivers results more aligned with human preferences despite inherent noise.

- Striking a good balance between efficiency and accuracy is essential.

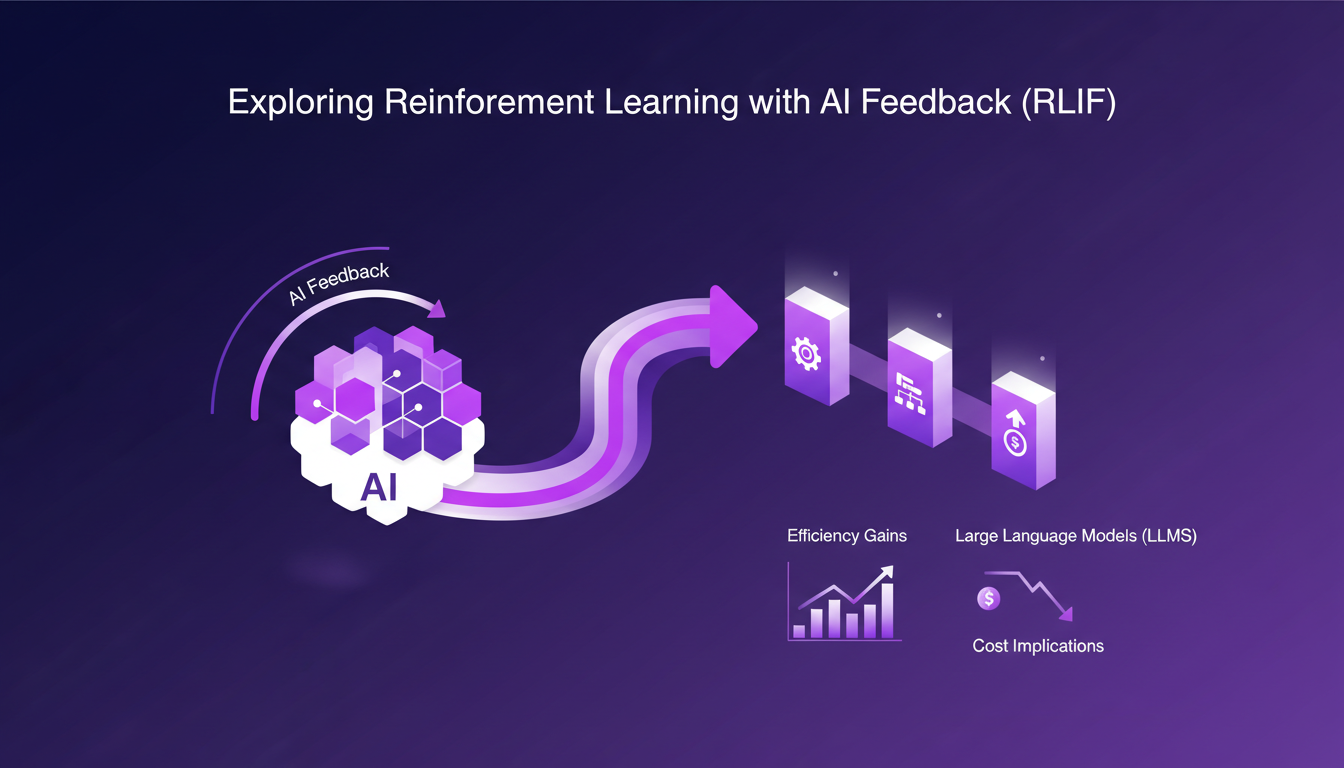

Exploring Reinforcement Learning with AI Feedback (RLIF)

With Reinforcement Learning with AI Feedback (RLIF), we talk about tremendous potential for automating certain tasks. In my workflow, integrating AI feedback allowed significant efficiency gains. Indeed, RLIF is particularly useful for tasks evaluating criteria like harmfulness or helpfulness with concrete rubrics.

But let's be clear, RLIF has its limits. It's less costly than RLHF, but can sometimes lack precision. For example, in some cases, AI might miss subtle nuances that only a human could capture. This is where trade-offs become interesting. You gain in scalability and cost, but at the expense of some precision.

- RLIF is more scalable than RLHF but can lack nuances.

- It's ideal for tasks with well-defined evaluation criteria.

- Be prepared to accept potentially high biases.

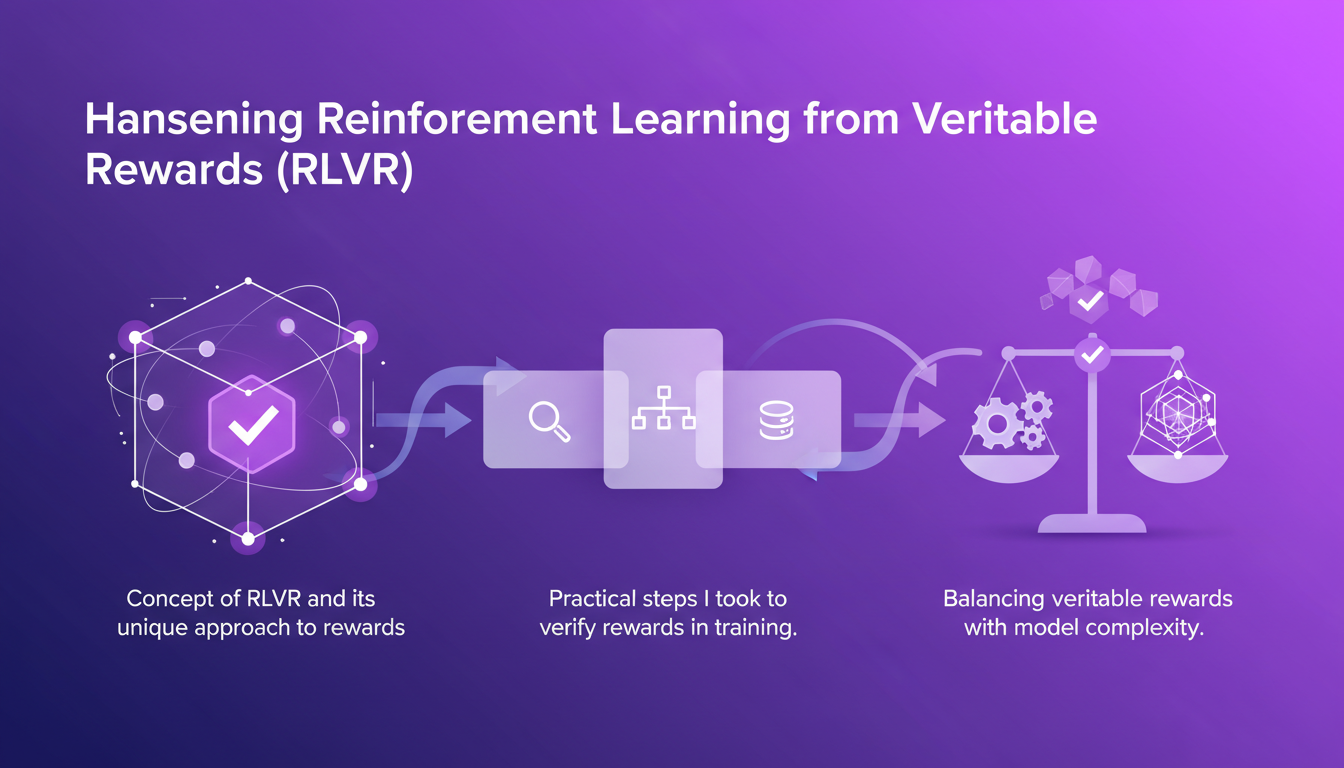

Harnessing Reinforcement Learning from Verifiable Rewards (RLVR)

The concept of Reinforcement Learning from Verifiable Rewards (RLVR) is fascinating because it introduces a unique approach where rewards are verified using concrete data. I've personally worked on verifying rewards during training, and while it sounds simple, the challenges are numerous. Ensuring the accuracy and relevance of rewards is crucial, especially as models become more complex.

In this framework, using code to evaluate the correctness of outcomes is a common practice. However, it's important to ensure that rewards are relevant at every stage, or you risk biasing the entire training process.

Pro tip: Make sure the rewards used are always in line with your model's final goals.

- RLVR allows verification of outcomes using concrete data and code.

- It's crucial to ensure relevance of rewards at every training stage.

- Models can become very complex, making the task more challenging.

Challenges and Future of AI Agents

Let's talk challenges. Reinforcement learning, while powerful, is not without flaws. Challenges like computational cost and sparse rewards are still present. I've often had to readjust my strategies to overcome these obstacles, particularly when deploying custom AI agents.

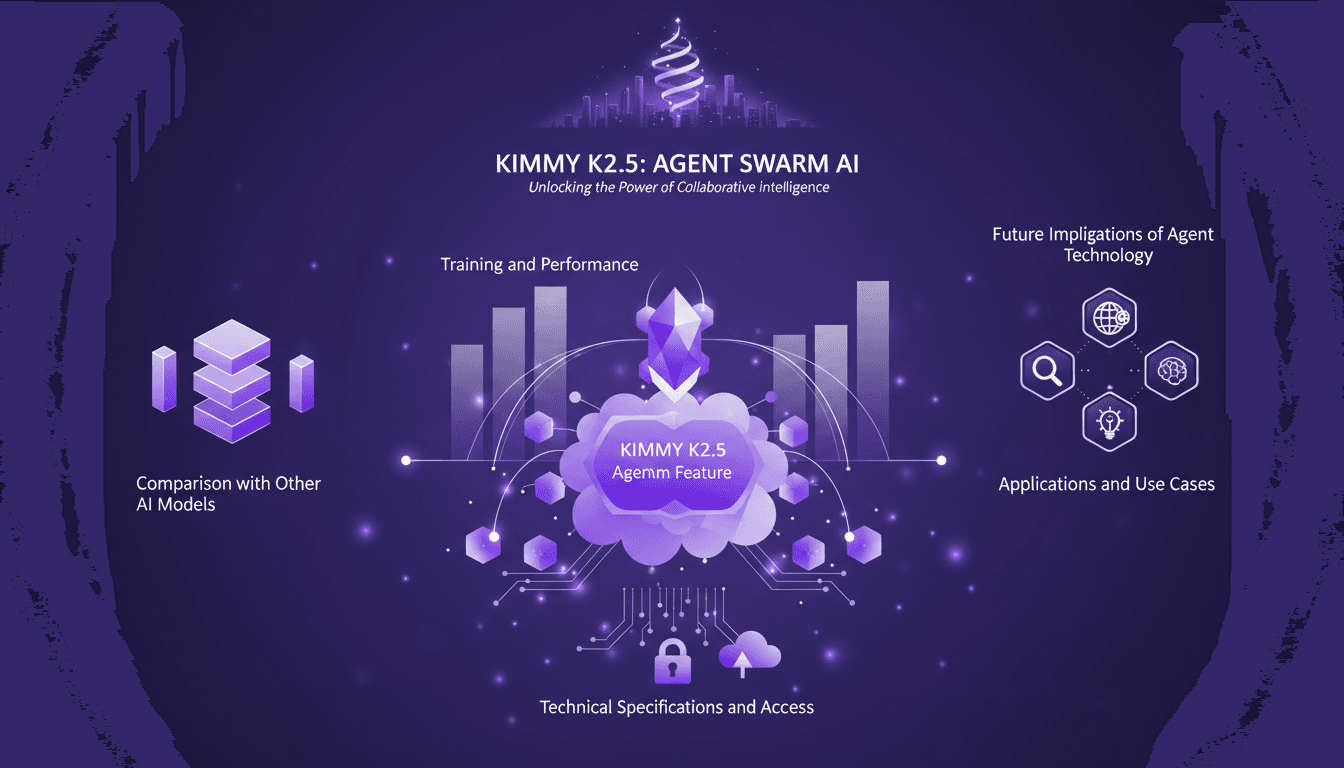

The future of AI agents is promising with custom applications like Kimmy K2.5 mastering agent swarms. But to deploy these agents effectively, it's imperative to understand the strategic implications of each RL approach we choose.

In conclusion, reinforcement learning continues to evolve and transform LLM training. Whether through RLHF, RLIF, or RLVR, each approach has its pros and cons. But one thing's certain, the right choice of tool and method can make all the difference.

So, what did I learn by diving into reinforcement learning for training LLMs? First, RLHF, or reinforcement learning with human feedback, highlighted the value of human context, but watch out, it can quickly eat up time and resources. Then, RLIF leverages AI feedback to speed things up, but you need to be cautious about the quality of automated feedback. Finally, RLVR really showcased the power of verifiable rewards, but again, parameters have to be fine-tuned to avoid bias.

Looking forward with a measure of enthusiasm: reinforcement learning is truly a game changer for LLMs, but let's not ignore the technical and ethical boundaries.

Ready to dive deeper? I urge you to experiment with RL in your LLM projects and share your insights with the community. Check out the full video for a deeper dive: Video link. Let's push the boundaries of AI together.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

Kimmy K2.5: Mastering the Agent Swarm

I remember the first time I dove into the Kimmy K2.5 model. It was like stepping into a new AI era, where the Agent Swarm feature promised to revolutionize parallel task handling. I've spent countless hours tweaking, testing, and pushing this model to its limits. Let me tell you, if you know how to leverage it, it's a game-changer. With 15 trillion tokens and the ability to manage 500 coordinated steps, it's an undisputed champion. But watch out, there are pitfalls. Let me walk you through harnessing this powerful tool, its applications, and future implications.

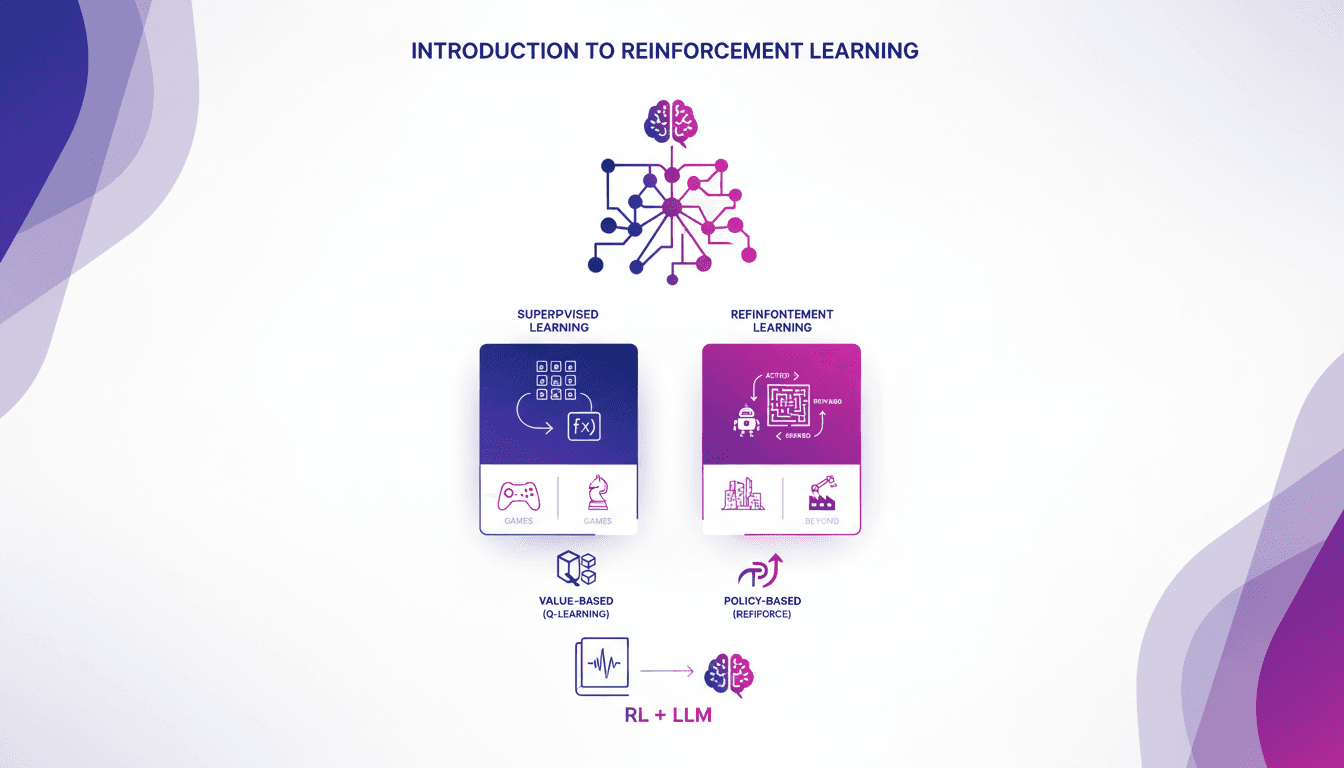

Practical Intro to Reinforcement Learning

I remember the first time I stumbled upon reinforcement learning. It felt like unlocking a new level in a game, where algorithms learn by trial and error, just like us. Unlike supervised learning, RL doesn't rely on labeled datasets. It learns from the consequences of its actions. First, I'll compare RL to supervised learning, then dive into its real-world applications, especially in games. I'll walk you through value-based methods like Q-learning and policy-based methods, showing how these approaches are transforming massive language models. In the end, you'll see how three key ways of using RL to fine-tune large language models deliver impressive results.

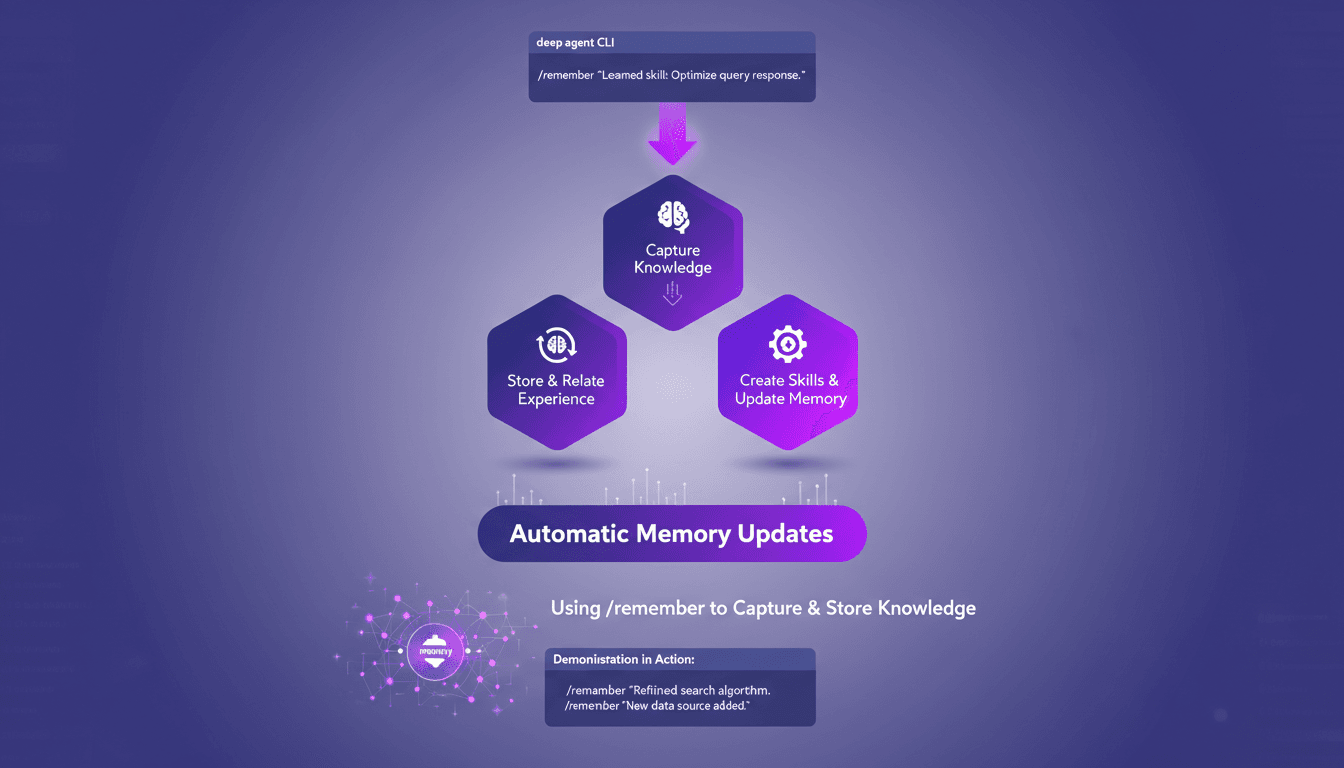

Mastering /remember: Deep Agent Memory in Action

I've spent countless hours tweaking deep agent setups, and let me tell you, the /remember command is a game changer. It's like giving your agent a brain that actually retains useful information. Let me show you how I use it to streamline processes and boost efficiency. With the /remember command in the deep agent CLI, you can teach agents to learn from experience. Let's dive into how this works and why it's a must-have in your toolkit.

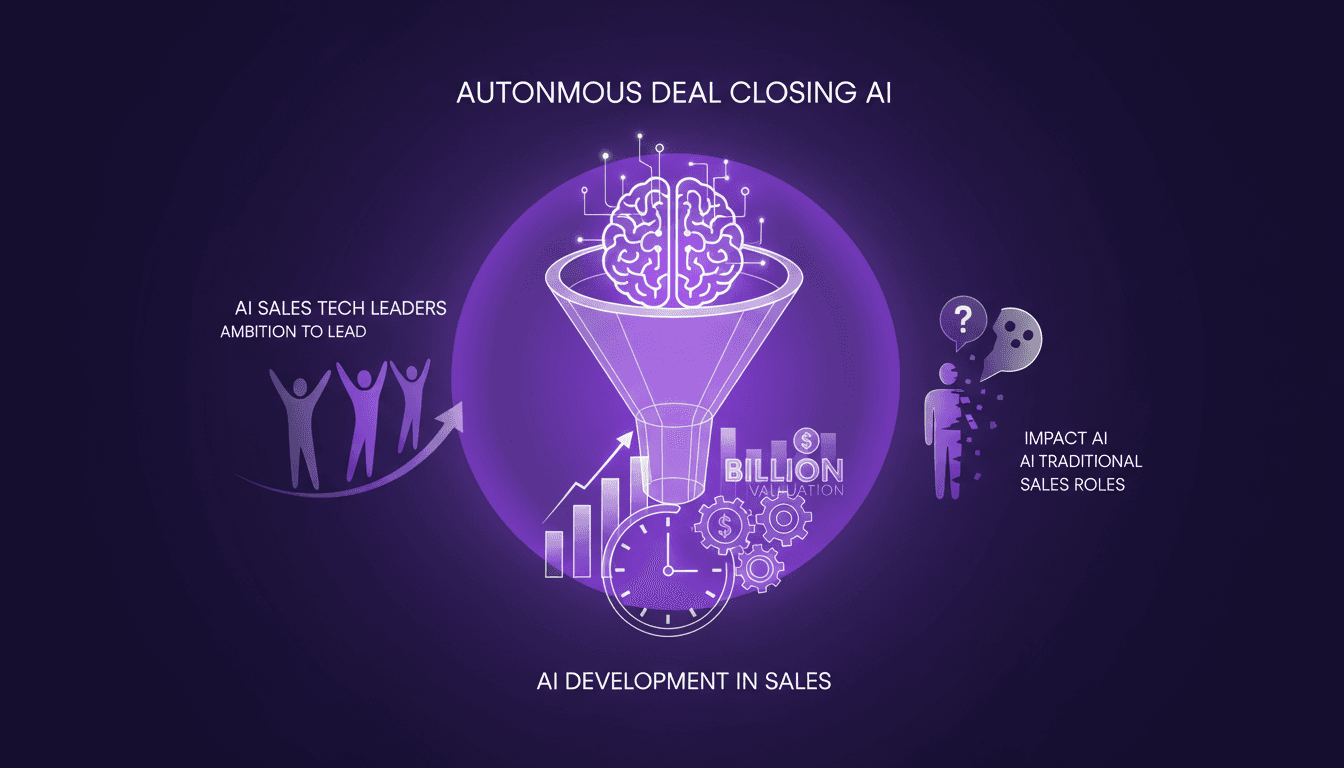

AI in Sales: Closing Deals Autonomously

I've been in sales long enough to see the hype come and go. But when I first connected AI to my sales pipeline, everything changed. It wasn't just another gadget; I witnessed AI autonomously closing deals, and that was my 'aha' moment. AI is now managing entire sales processes, from lead generation to deal closing, without a human in sight. With an ambitious team and rapid development, we're looking at a future where AI redefines the sales role. In just 12 months, we might see a company valued in the billions because of this tech. Let's dive into what's making this possible.

Mastering Countdown Sequences for Events

I've been orchestrating events for years, and if there's one thing I've learned, it's the power of a well-executed countdown. Whether it's launching a product or kicking off a live event, the countdown sequence can make or break the experience. In this tutorial, I'll show you how I set up sequences that not only sync perfectly but also add a layer of excitement and anticipation. First tip: never underestimate the symbolism of the countdown, that build-up of tension before the big moment. We start by synchronizing our timings, ensuring every element is in place before the grand launch. Ready to turn the ordinary into the extraordinary?