Agent Observability: Evaluate and Optimize

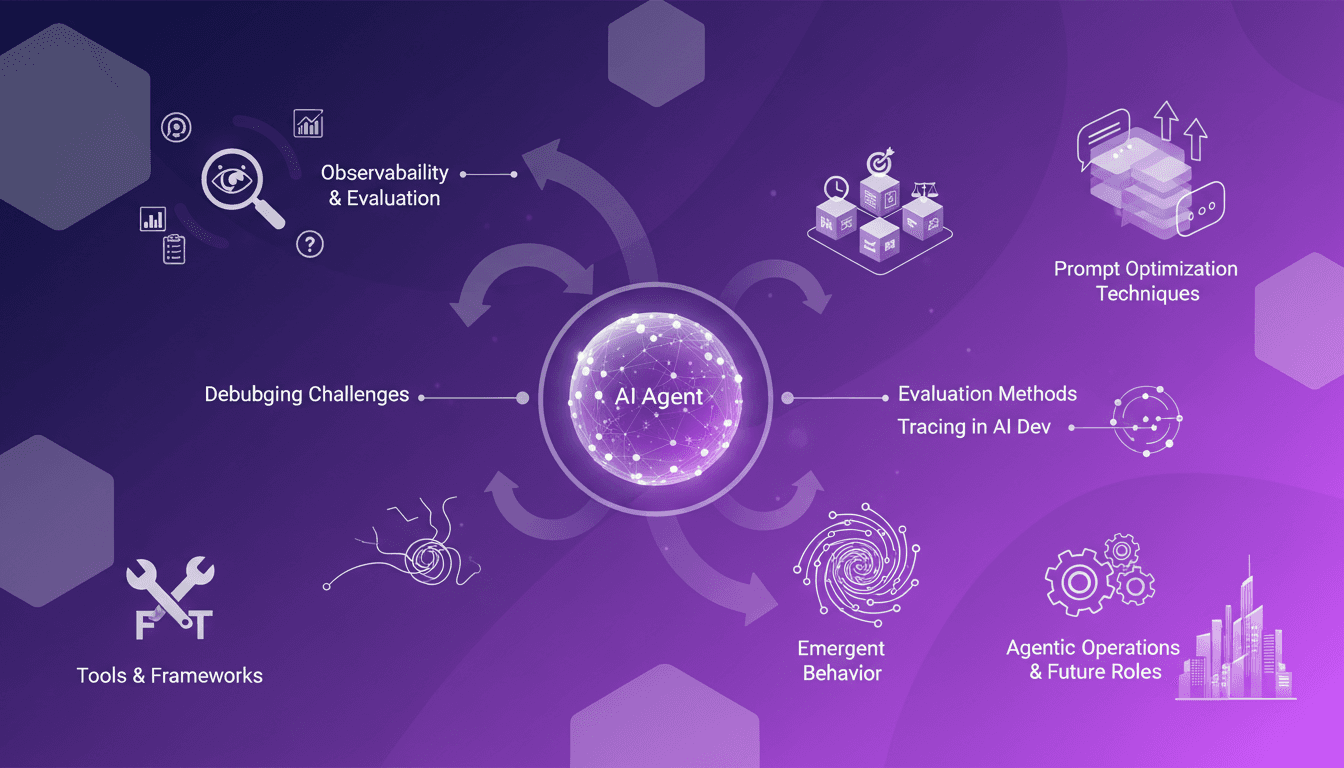

I remember the first time I got burned by non-deterministic errors in AI agents. Debugging felt like chasing shadows. But then I discovered the power of agent observability, and everything changed. In this article, I'll walk you through how agent observability can transform your debugging and evaluation processes, making them more efficient and reliable. We'll dive into the challenges of debugging AI agents, methods of evaluation, and the crucial role of tracing in AI development. If you're like me, you know that optimizing prompts and understanding emergent behavior are key, especially when it comes to agentic operations and future roles of these technologies. Get ready to discover tools and frameworks that will revolutionize your workflow.

I remember the first time I got burned by non-deterministic errors in my AI agents. It was like chasing shadows. But then I discovered agent observability, and everything changed. You know, in AI development, debugging can be a real headache. You think you've covered all bases, and then an unpredictable error pops up. That's where agent observability comes into play. With it, I managed to transform my debugging and evaluation processes, making everything more efficient and reliable. We'll explore together the challenges of debugging AI agents, methods to evaluate them, and the importance of tracing. I'll also share some tips on optimizing your prompts and understanding emergent behavior, which is crucial, especially when we're talking about agentic operations and their future roles. Ready to revolutionize your workflow with the right tools and frameworks? Let's dive in.

Understanding Agent Observability

Agent observability is like having a dashboard for your AI, but it's not just simple monitoring. It's a critical tool for pinpointing non-deterministic errors that can pop up unexpectedly. Why is this crucial? Without a critical mass of data points to review, you're essentially flying blind in AI development.

Initially, without observability, I found myself often groping in the dark, trying to understand why my agents weren't behaving as expected. You see, these non-deterministic errors are like ghosts in the machine. They appear and disappear without warning. Hence, the importance of gathering enough data to study them thoroughly.

Debugging AI Agents: Overcoming Challenges

Debugging AI agents is like solving a puzzle where the pieces keep changing shape. The challenges are numerous: reasoning errors, misinterpreted context, and more. This is where observability comes in. It allows you to trace every step, from input to output, through tool calls.

But beware, tracing without a clear plan can be a time sink. I've learned the hard way that knowing where to look is key to identifying root causes. At times, I was tempted to trace every detail, but it quickly became overwhelming.

- Observability facilitates understanding reasoning errors.

- It helps identify problematic execution steps.

- Beware of unnecessary tracing, or you'll drown in data.

Effective Agent Evaluation Methods

Evaluating an agent isn't just about checking if it works. It's about testing its reasoning across different dimensions. Methods vary from single-step tests to full turn evaluations to multi-turn evaluations. And in this dance, tracing plays a key role.

I've integrated these methods into my daily operations. For instance, I use real-time evaluations to adjust my prompts and optimize agent performance.

- Single-step tests validate each step individually.

- Full-turn evaluations test the agent in complex scenarios.

- Prompt optimization is crucial for improving outcomes.

Prompt Optimization Techniques

Prompt optimization is an art unto itself. You can tweak structure, conciseness, or specificity. But beware, over-optimizing can make your AI rigid. I've often seen overly complex prompts hinder an agent's flexibility.

For example, by simplifying prompt structures, I reduced response times and improved agent efficiency. However, don't overdo the simplicity, or you'll lose precision.

- Structure: Tailor the form to guide the agent effectively.

- Conciseness: Be direct to avoid misunderstandings.

- Specificity: Target precisely for optimal results.

Emergent Behavior and Future Roles

Emergent behavior in AI agents is somewhat the Holy Grail of AI innovation. It's about those unexpected capabilities manifesting when agents interact with their environment. But this phenomenon also poses challenges in terms of control and predictability.

Thanks to observability, I've been able to better understand these emergent behaviors, anticipating interactions I hadn't considered. However, there's always a balance to be struck between innovation and control. Too much freedom can lead to unpredictable results.

- Agentic operations allow systems to learn and evolve.

- Observability helps anticipate and understand emergent behaviors.

- Balancing innovation and control is crucial to avoid drifts.

Integrating agent observability isn't just buzz—it's a real game changer in AI development. First, I noticed that tracing agent actions makes debugging so much easier, which is a huge relief considering the usual challenges. Then, effective evaluation methods allowed me to enhance agent performance in a tangible way. But watch out, without a critical mass of data points (around 5), your analyses might fall flat. Yes, it can seem intense, but with 10 minutes of video, you dive quickly into the concrete. Want real impact on your AI projects? Start implementing these techniques today. And to dig deeper, I highly recommend watching the original video—it's a game changer.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

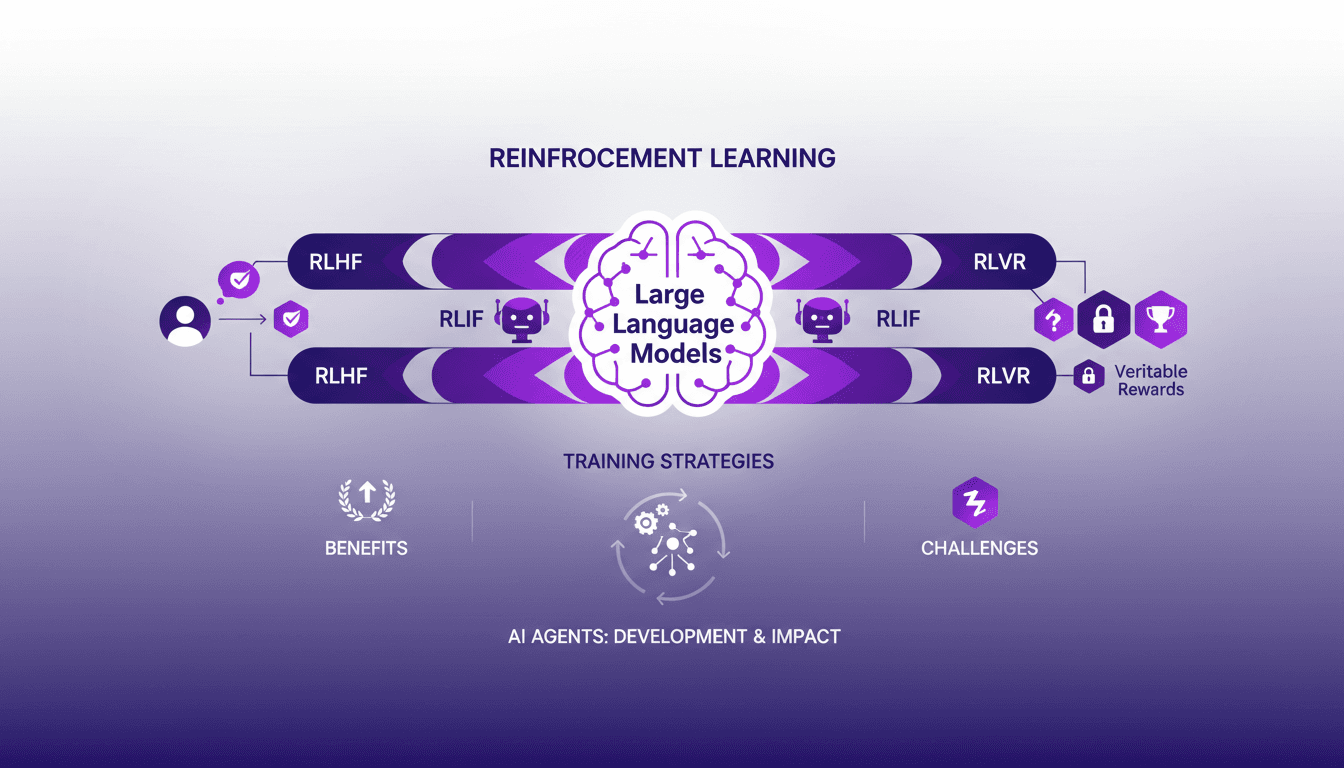

Reinforcement Learning for LLMs: New AI Agents

I remember the first time I integrated reinforcement learning into training large language models (LLMs). It was 2022, and with the development of ChatGPT fresh in my mind, I realized this was a real game-changer for AI agents. But be careful—there are trade-offs to consider. Reinforcement learning is revolutionizing how we train LLMs, offering new ways to enhance AI agents. In this article, I'll take you through my journey with RL in LLMs, sharing practical insights and lessons learned. I'm diving into reinforcement learning with human feedback (RLHF), AI feedback (RLIF), and verifiable rewards (RLVR). Get ready to explore how these approaches are transforming the way we design and train AI agents.

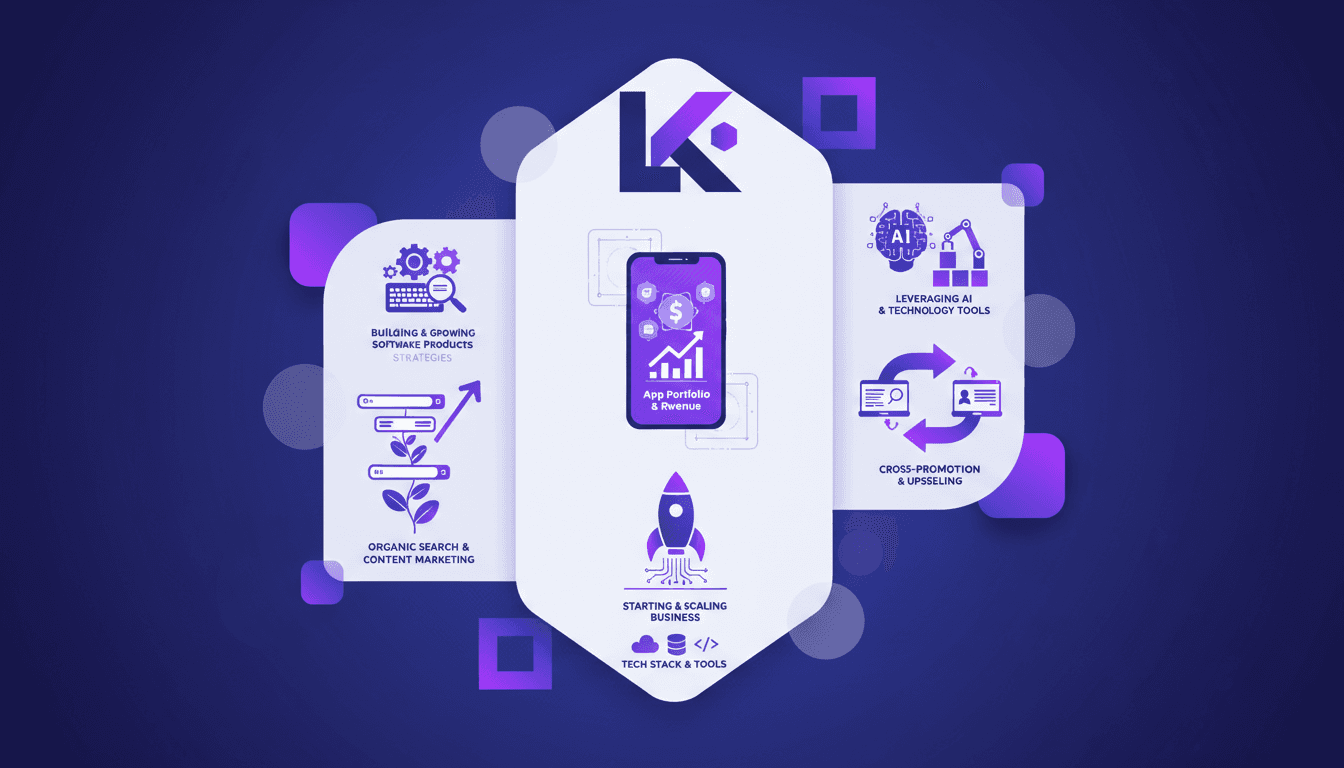

Making $150K/Month: Key App Strategies

I remember vividly when I first hit that $150K/month milestone with my apps. It wasn't magic; it was a mix of strategic moves, tech stack mastery, and a relentless focus on organic growth. This article dives into how Katie Keith orchestrates her 19 apps to generate $1.8 million annually. We discuss her strategies for building and growing multiple software products, the importance of organic search and content marketing, and how she leverages AI and technology tools for product development.

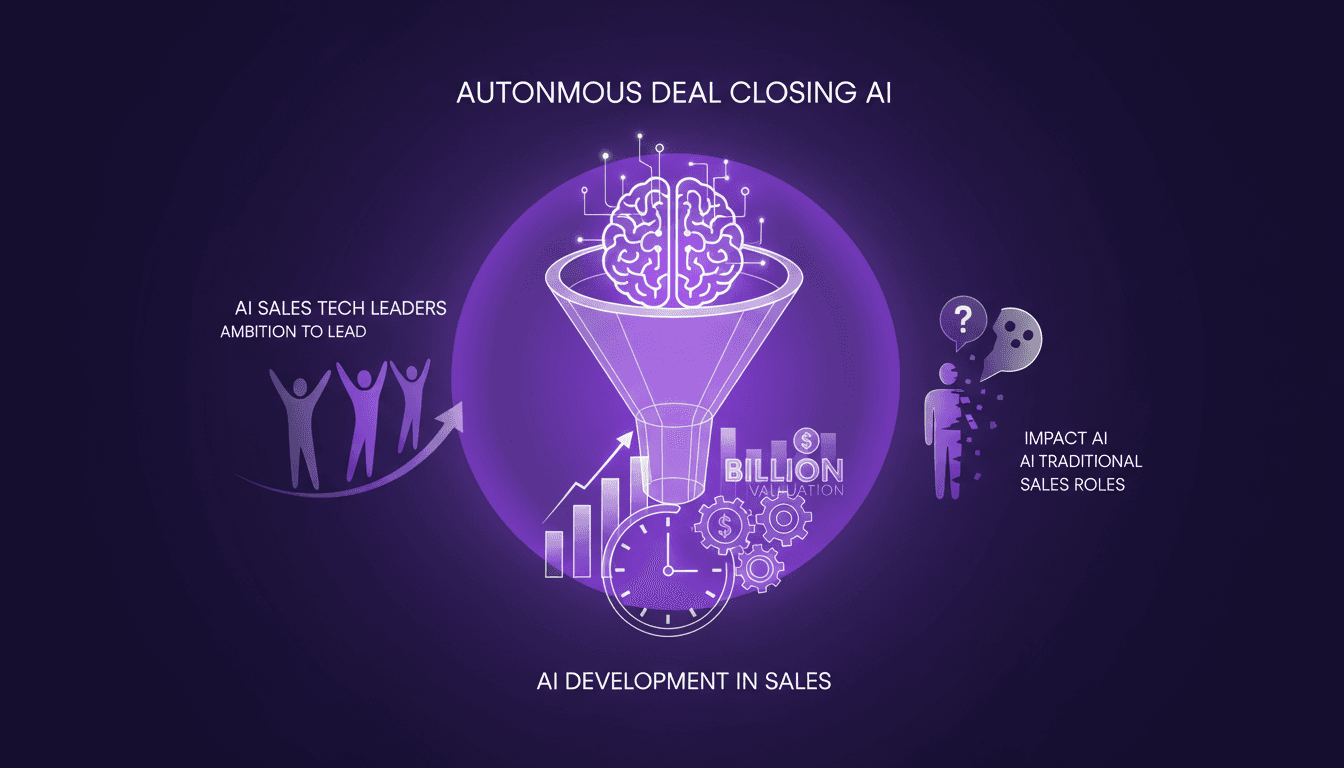

AI in Sales: Closing Deals Autonomously

I've been in sales long enough to see the hype come and go. But when I first connected AI to my sales pipeline, everything changed. It wasn't just another gadget; I witnessed AI autonomously closing deals, and that was my 'aha' moment. AI is now managing entire sales processes, from lead generation to deal closing, without a human in sight. With an ambitious team and rapid development, we're looking at a future where AI redefines the sales role. In just 12 months, we might see a company valued in the billions because of this tech. Let's dive into what's making this possible.

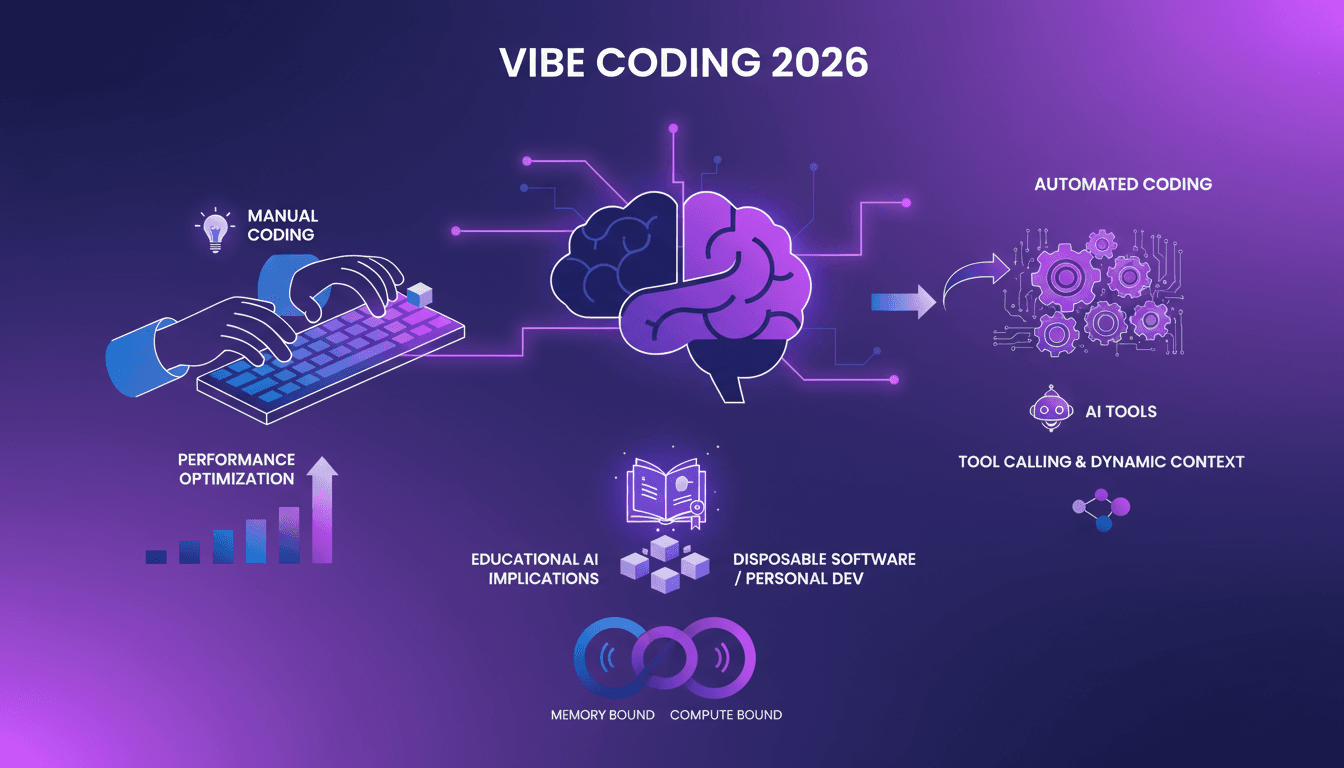

Vibe Coding 2026: AI Tools for Optimization

I remember the first time I heard about vibe coding. It was 2026, and the coding landscape was shifting at breakneck speed. AI tools were no longer just assistants; they were turning into indispensable colleagues. But beware, with this power comes increased complexity. Navigating these waters wasn't just about understanding the tools, but mastering them. In this article, I share my field experiences and insights from industry leaders. We'll dive into the real-world implications of vibe coding, the technical trade-offs, and how to optimize your workflow with these tools. Vibe coding in 2026 is a world where manual coding becomes the exception, and optimizing with AI is the norm.

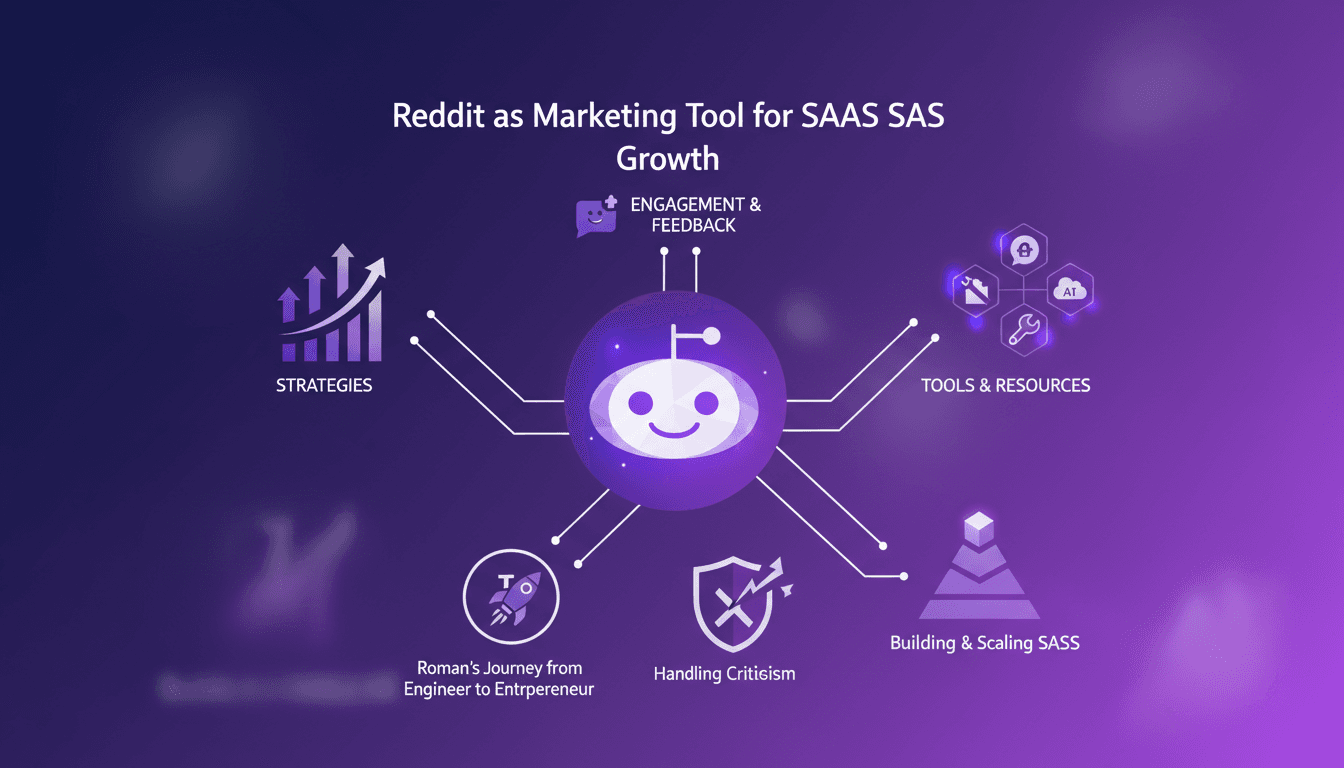

How Reddit Grew My SaaS to $34K/Month

I transitioned from being a mechanical engineer to running a SaaS pulling in $34K a month. My secret weapon? Reddit. I remember those late nights diving into threads, asking questions, and engaging with a community that gave me raw, unfiltered feedback. With Reddit, I tested ideas, refined my product, and turned criticism into growth levers. In this article, I share my strategies for leveraging Reddit as a powerful marketing tool to boost your SaaS. But beware, navigating between positive comments and trolls requires skill. My journey proves that even without prior marketing experience, success is possible through the power of a well-leveraged community.