Gro Imagine API: Use Effectively

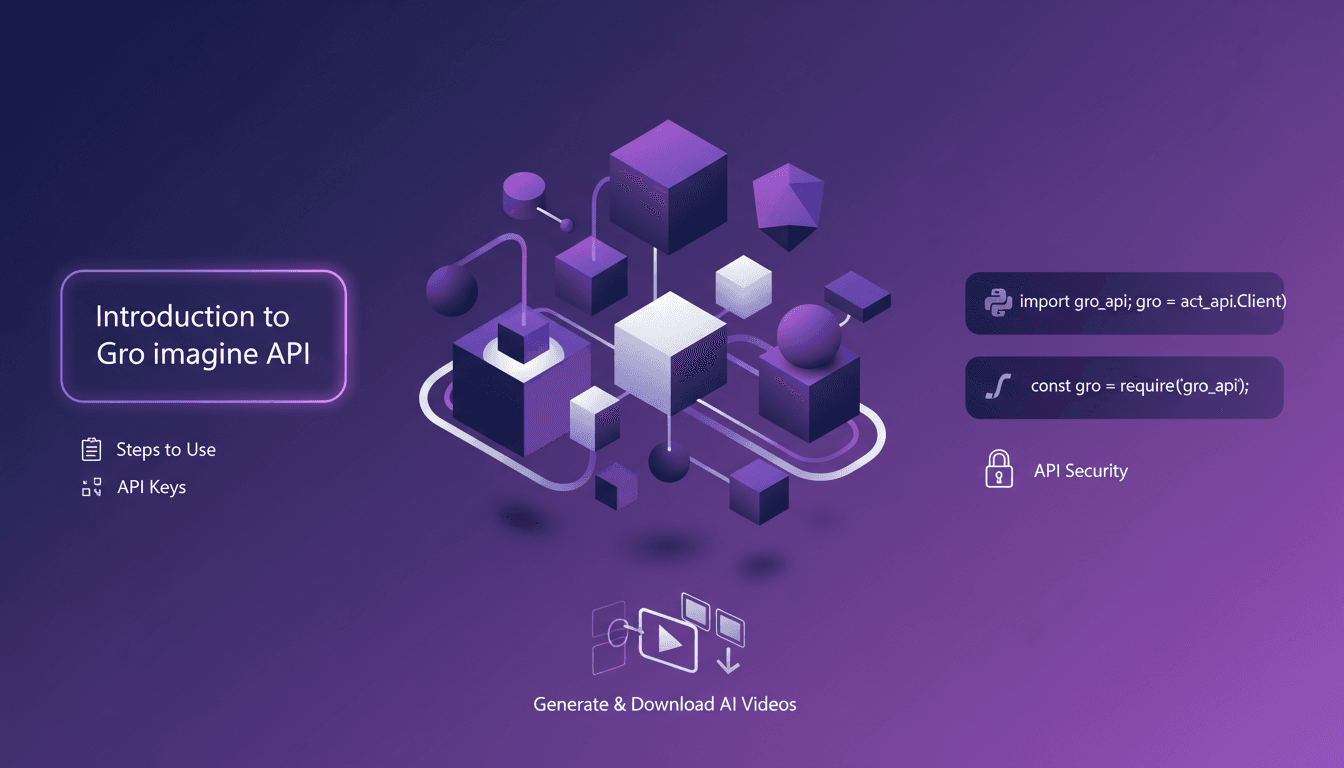

I dove straight into the Gro Imagine API and found it surprisingly straightforward once I got my hands dirty. This API turns text into video, and it’s truly a game changer. But watch out for the quirks: over 15 seconds and you're in trouble. Let me walk you through how I set it up, step by step, with Python and JavaScript snippets for interaction. Securing your API keys is essential, and I’ll guide you on generating and downloading your AI-generated videos without a hitch.

I dove into the Gro Imagine API, and trust me, it's surprisingly straightforward once you get your hands dirty. The aim here is to turn text into video, a real game changer in our field. But like any tool, it has its quirks. For instance, beyond 15 seconds of video, you might run into issues. Don't worry, I'll walk you through how I set this all up. First, I made sure my API keys were securely locked down (never skip this step). Then, I'll guide you with Python and JavaScript snippets to interact with the API. And finally, I'll explain how to generate and download your AI videos without a hitch. Let's dive into a practical and concrete overview!

Getting Started with the Gro Imagine API

To kick things off with the Gro Imagine API, the first thing I did was register for API access and get my API key. Absolutely essential. Without it, you're stuck. The API key is your golden ticket to interact with the API. Keeping it secure is critical—think of it like your banking passwords. I set up environment variables to securely store the key. It might sound basic, but overlooking these security measures at the start can cost you dearly.

Watch out—the initial setup can be tricky if you don't pay attention to security measures. I learned the hard way that it's better to spend a few minutes securing your keys than chasing data leaks.

Step-by-Step: Using Gro Imagine API

To get the API working, I followed a simple three-step process. First, I prepared my text input for video generation. The key here is to carefully craft your prompt. Then, I made HTTP requests using Python to interact with the API. Be careful not to exceed the 15-second limit for video length, it's a significant boundary.

“I followed a simple three-step process to get the API working.”

The process is incredibly straightforward: prepare, send, receive. But, you have to remain vigilant about details like video length.

Coding with Python and JavaScript

I integrated Python code to send requests and handle responses. Although JavaScript offers similar capabilities, I found Python more intuitive for this task. It's crucial to handle errors and exceptions to avoid crashes. Efficiency tip: Keep your code modular to adapt easily.

I've seen colleagues get caught by monolithic codebases. Trust me, keeping code modular saves you time and headaches when it comes to future adaptations.

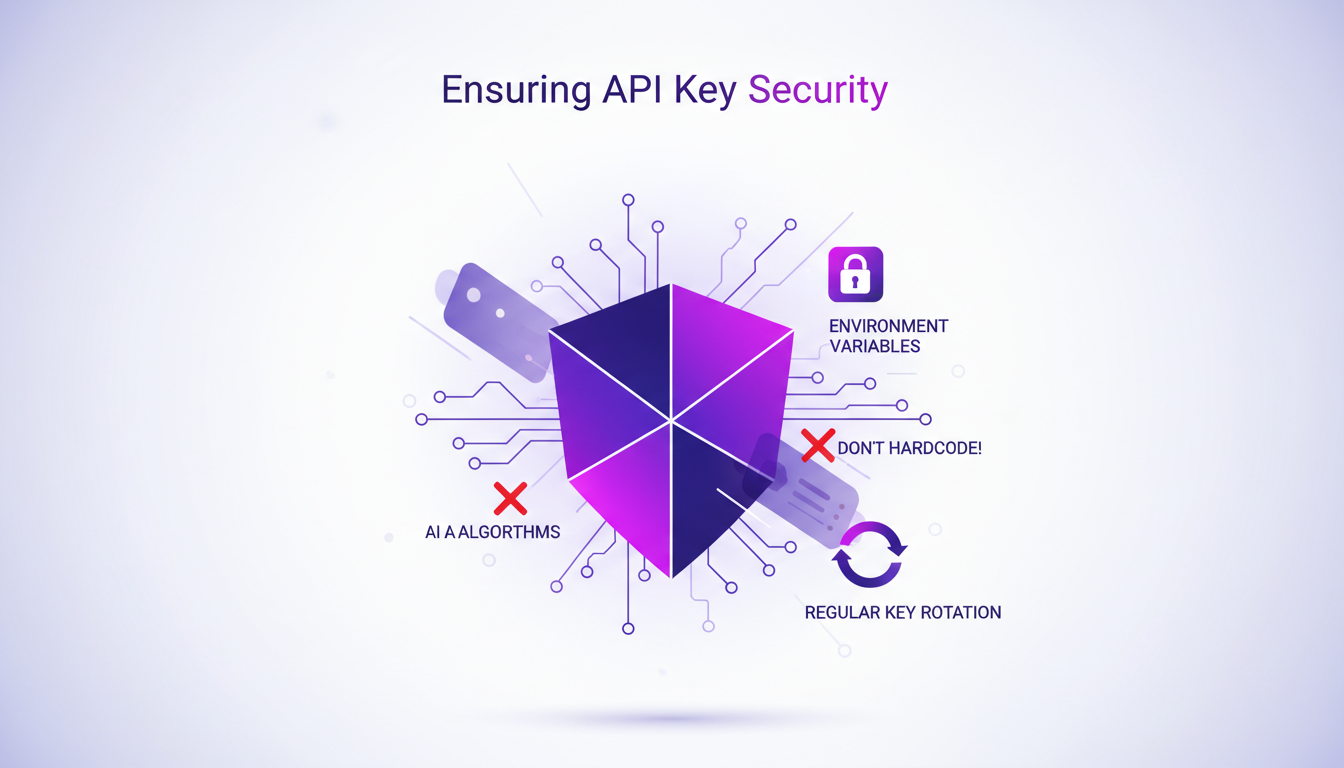

Ensuring API Key Security

To protect my API key, I used environment variables to hide it from prying eyes. It's tempting to hardcode these keys, but don't fall into that trap. Regularly rotating API keys adds an extra layer of security. Consider using a secrets manager for additional peace of mind.

I've seen too many developers underestimate the importance of securing their API keys, often with disastrous consequences. Don't be the one who learns this lesson the hard way.

Generating and Downloading Videos

I generated a video and learned the importance of resolution settings, 480p in this case. The API supports up to 15 seconds per video, a limit to keep in mind. Downloading involves efficiently handling file streams. Sometimes it's faster to use a cloud service for storage and retrieval.

In conclusion, with this setup, you can build software and applications on the Gro Imagine API. Take advantage of this opportunity to create innovative multimedia content.

Navigating the Gro Imagine API isn't just about writing code — it's about seeing the workflow, anticipating pitfalls. First, lock down your security with API keys. Then, follow the simple three-step process, and bam, you're turning text into video. But watch out, we're capped at 15 seconds per clip. No worries, Python and JavaScript snippets got your back for easy API interaction. Always think about security, crucial stuff.

Now, envision the potential: transforming text ideas into videos in mere seconds, it's almost magic. But keep your excitement in check, there are limits to manage.

Ready to dive in? Secure your API key, follow the steps, and start generating those videos. Share your experiences and any tips you uncover. And for a deeper dive, check out the full video here: YouTube link. It's worth the watch.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

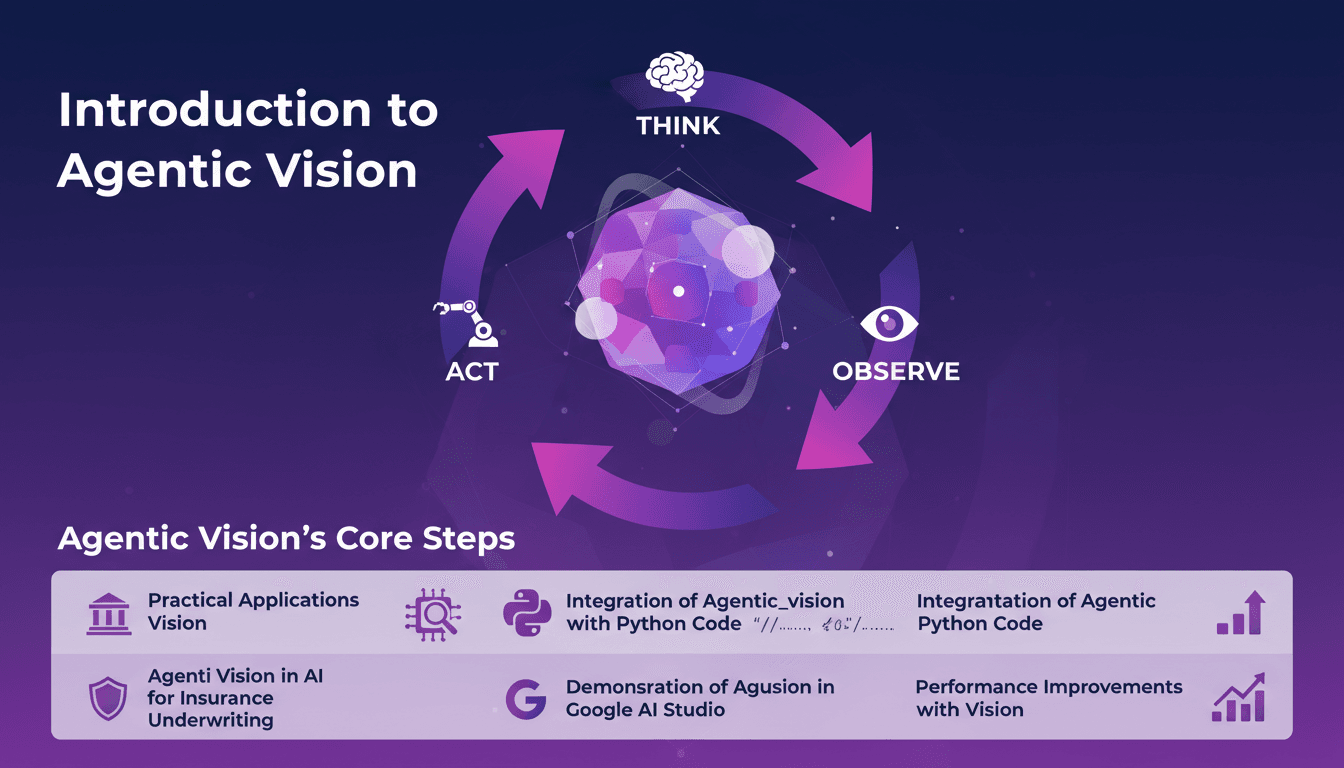

Agentic Vision: Boost AI with Python Integration

I remember the first time I stumbled upon Agentic Vision. It was like a light bulb moment, realizing how the Think, Act, Observe framework could revolutionize my AI projects. I integrated this approach into my workflows, especially for insurance underwriting, and the performance leaps were remarkable. Agentic Vision isn't just another AI buzzword. It's a practical framework that can truly boost your AI models, especially when paired with Python. Whether you're in insurance or any other field, understanding this can save you time and increase efficiency. In this video, I'll walk you through how I applied Agentic Vision with Python, and the performance improvements I witnessed, especially in Google AI Studio.

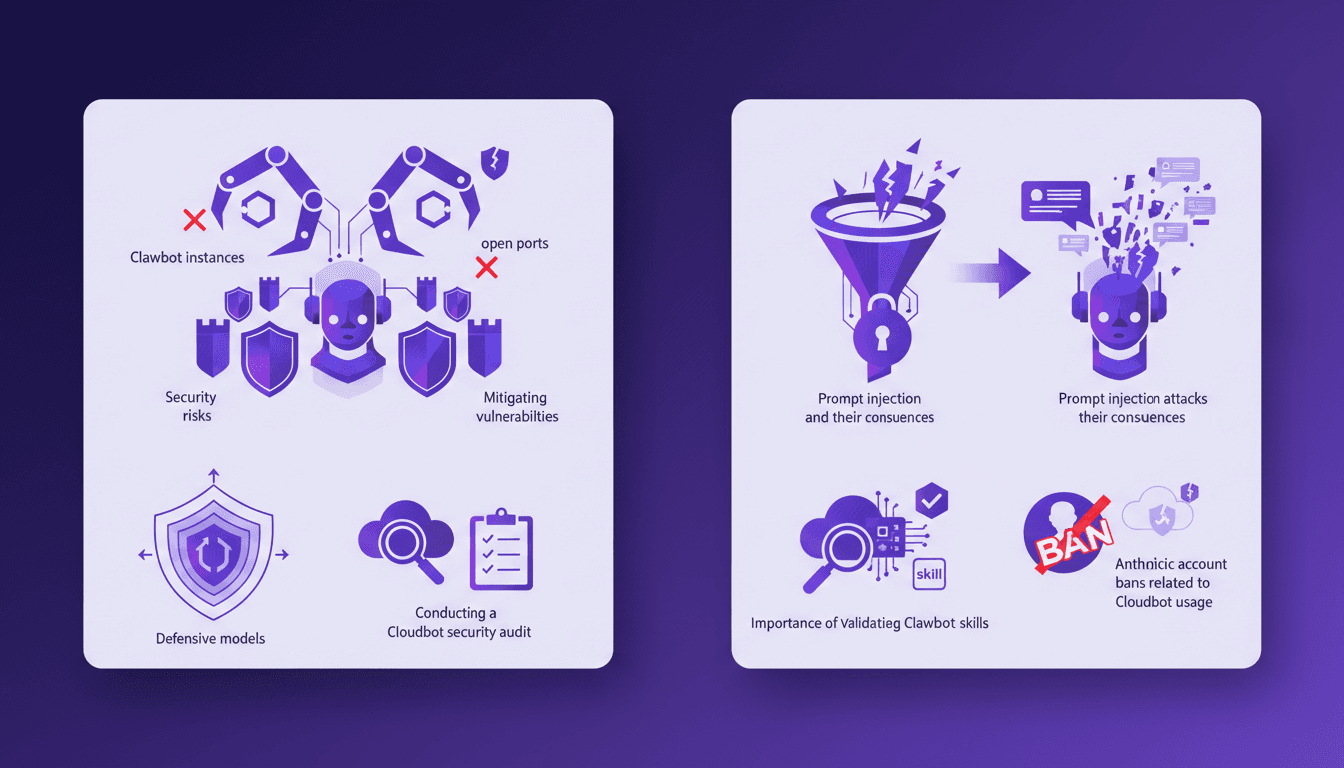

Securing Clawbot: Protect Your Open Ports

I've been there—staring at a Clawbot instance with ports wide open, feeling the chill of potential security breaches. So, how did I secure my setup and avoid costly mistakes? Let's dive into Clawbot vulnerabilities, from prompt injections to weak skill validations. First, I shut those darn open ports. Then, I beef up skill validations to prevent Clawbot from doing things it shouldn't. But watch out for prompt injection attacks—they're sneaky and Anthropic isn't shy about banning accounts. I share my steps to audit your Clawbot system's security and protect your digital assets efficiently.

Kimmy K2.5: Mastering the Agent Swarm

I remember the first time I dove into the Kimmy K2.5 model. It was like stepping into a new AI era, where the Agent Swarm feature promised to revolutionize parallel task handling. I've spent countless hours tweaking, testing, and pushing this model to its limits. Let me tell you, if you know how to leverage it, it's a game-changer. With 15 trillion tokens and the ability to manage 500 coordinated steps, it's an undisputed champion. But watch out, there are pitfalls. Let me walk you through harnessing this powerful tool, its applications, and future implications.

Ollama Launch: Tackling Mac Challenges

I remember the first time I fired up Ollama Launch on my Mac. It was like opening a new toolbox, gleaming with tools I was eager to try out. But the real question is how these models actually perform. In this article, we'll dive into Ollama Launch features, put the GLM 4.7 flash model through its paces, and see how Claude Code stacks up. We'll also tackle the challenges of running these models locally on a Mac and discuss potential improvements. If you've ever tried running a 30-billion parameter model with a 64K context length, you know what I'm talking about. So, ready to tackle the challenge?

Clone Any Voice for Free: Qwen TTS Revolutionizes

I remember the first time I cloned a voice with Qwen TTS—it was like stepping into the future. Imagine having such a powerful tool, and it's open source, right at your fingertips. This isn't just theory; it's about real-world application today. Last June, Qwen announced their TTS models, and by September, the Quen 3 TTS Flash with multilingual support was ready. For anyone interested in voice cloning and multilingual speech generation, this is a true game changer. With models ranging from 0.6 billion to 1.7 billion parameters, the possibilities are vast. But watch out, there are technical limits to be mindful of. In this article, I'll guide you through multilingual capabilities, open-source release, and emotion synthesis. Get ready to explore how you can leverage this tech today.