Minimax M2.5: Unpacking Strengths and Limits

I've had my hands on the Minimax M2.5, and trust me, it's not just another model on the shelf. I integrated it into my workflow, and its affordability made me rethink my entire setup. But hold on, it's not just about the price tag. This model stands out with its technical specs and efficiency. So why should the Minimax M2.5 get your attention? We're diving into its strengths, limitations, and how it stacks up against competitors. We'll also discuss reinforcement learning strategies and potential use cases. Get ready, because this model might just shake up your work approach.

I've been knee-deep with the Minimax M2.5, and as soon as I hooked it into my workflow, I knew it wasn't just another model on the shelf. At just $120 for 50 tokens per second, it made me reconsider my whole setup. But don't get it twisted, it's not just about the price. What's under the hood is just as impressive. In a crowded AI model landscape, the Minimax M2.5 stands out with its technical prowess and affordable pricing. So let's talk about what makes this model tick: reinforcement learning strategies, technical specifications, and how it measures up against the competition. I'll also share insights from OpenHands' benchmarking and discuss the challenges in training and deploying this model. Stick with me, because this model might just change how you approach work.

Affordability and Pricing of Minimax M2.5

When I first came across the Minimax M2.5, the price tag grabbed my attention: a model at $120 offering 50 tokens per second. For someone who's dealt with costly AI models, this was a steal. Then there's the lightning model at $240, which doubles the throughput. Compared to the $15-$20/hour for models like Frontier AI, it’s almost a giveaway.

With operational costs as low as $1/hour, the M2.5 is perfect for budget-conscious projects. In my agency, where every dollar counts, this saving is crucial. The price-performance ratio makes it accessible even for startups with limited funds.

- $120 model with 50 tokens/sec

- $240 lightning model doubling throughput

- $1/hour operational cost

- Ideal for budget-conscious projects

Comparing Minimax M2.5 with Competitors

To get a sense of where the M2.5 stands, I compared its performance with models like Claude Opus and those from Google. The M2.5 shines with its cost efficiency. Where its competitors might cost up to $15-20/hour, the M2.5 offers similar performance for a fraction of the price.

Its speed, cost, and token throughput are its strong points. But be aware, there’s a trade-off between price and specific feature sets. Endorsements from OpenHands and Carnegie Mellon add credibility, but every project has its specific needs.

- Comparison with Claude Opus and Google

- Cost efficiency without sacrificing speed

- Endorsements by OpenHands and Carnegie Mellon

Reinforcement Learning Strategies and Technical Specs

Let's dive into reinforcement learning (RL). The M2.5 uses on-policy and off-policy learning strategies, optimizing its performance. I’ve experimented with asynchronous scheduling strategies for optimized performance, and the Mixture of Experts model balances complexity and efficiency.

The technical specs show impressive token throughput and robust processing power. However, watch out for potential pitfalls in training. I’ve often seen poorly optimized setups lead to mediocre performance.

- Reinforcement learning (on-policy vs off-policy)

- Asynchronous scheduling strategies

- Mixture of Experts model

- Token throughput and processing power

OpenHands' Benchmarking and Use Cases

OpenHands' benchmarking provides valuable insights into real-world performance. The M2.5 shines in varied use cases, from AI research to commercial applications. The endorsements highlight its practical impacts and limitations.

It excels in certain scenarios but might be limited in others. In varied environments, I've found it crucial to consider the specificities of each deployment.

- OpenHands benchmarking

- Varied use cases

- Practical impacts and limitations

Release, Availability, and Deployment Challenges

The M2.5 is currently available, but how do you implement it? Deployment challenges include integrating into existing systems. The learning curve can be steep, but with the right resources, long-term savings justify the initial investment.

Keep an eye out for updates and support from Minimax and its partners. I’ve often seen projects fail due to lack of ongoing maintenance.

- Availability and acquisition of the M2.5

- Integration and deployment challenges

- Balancing initial costs and long-term savings

The Minimax M2.5 isn't just about saving money; it's a true powerhouse. I've put its efficient reinforcement learning strategies to the test, and for just $120, you won't find anything better. Take the 50 tokens per second model, for instance—it delivers impressive processing speed for its price. But if you're looking for even more punch, the $240 model with 100 tokens per second is a real game changer.

- Unbeatable value for money: At $120, it's a great entry point for AI projects without breaking the bank.

- Adjustable performance: The 50 and 100 tokens per second versions let you choose based on your needs.

- Efficient learning strategies: Reinforcement learning methods are optimized for daily use.

Honestly, now's the time to bring it into your workflow. Ready to see how it can transform your AI projects? Dive in. But, as always, test it in your context to see how it performs. Check out the original video for a deeper dive. Your next step? Jump into the Minimax M2.5 world here: video link.

Frequently Asked Questions

Thibault Le Balier

Co-fondateur & CTO

Coming from the tech startup ecosystem, Thibault has developed expertise in AI solution architecture that he now puts at the service of large companies (Atos, BNP Paribas, beta.gouv). He works on two axes: mastering AI deployments (local LLMs, MCP security) and optimizing inference costs (offloading, compression, token management).

Related Articles

Discover more articles on similar topics

WebM MCP: Use Cases and Future Prospects

When I first heard about WebM MCP, I was skeptical. But after diving in, wrapping my head around its APIs, and seeing the potential, I realized it's a game changer for AI agent deployment. Developed by Google and Microsoft, WebM MCP offers a new way to handle media processing with AI agents. In this article, I share my hands-on experience, pitfalls to avoid, and how I integrated this tool into my daily workflow. Imagine managing thousands of tokens for each processed image, with just two APIs to master. I'll guide you through the benefits, use cases, and future prospects of this powerful tool.

LLMs Optimization: RLVR and OpenAI's API

I've been knee-deep in fine-tuning large language models (LLMs) using Reinforcement Learning via Verifiable Rewards (RLVR). This isn't just theory; it's a game of efficiency and cost, with OpenAI’s RFT API as my main tool. In this tutorial, I'll walk you through how I make it work. We're diving into the training process, tackling imbalanced data, and comparing fine-tuning methods, all while keeping a close eye on costs. This is our third episode on reinforcement learning with LLMs, and we'll also discuss OpenAI's RFT API alternatives. Quick heads up: at $100 per hour, it escalates fast!

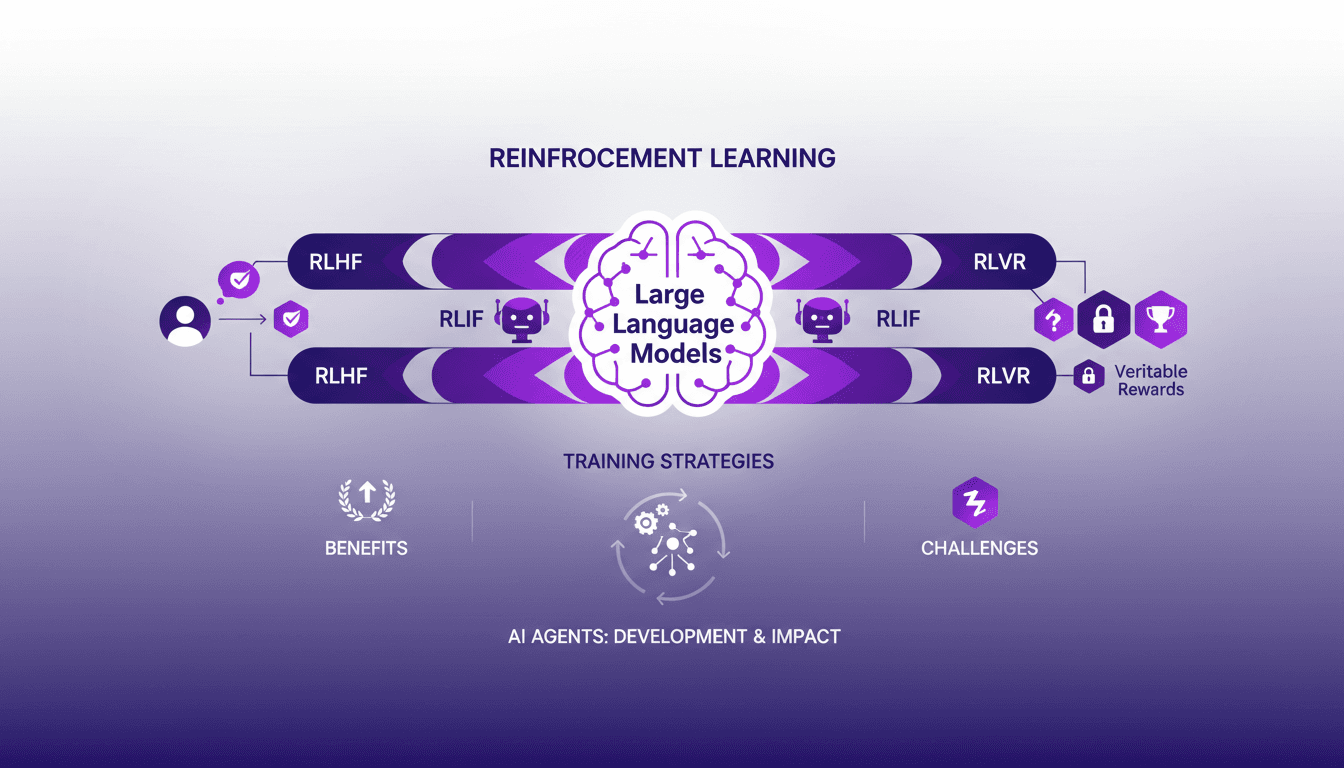

Reinforcement Learning for LLMs: New AI Agents

I remember the first time I integrated reinforcement learning into training large language models (LLMs). It was 2022, and with the development of ChatGPT fresh in my mind, I realized this was a real game-changer for AI agents. But be careful—there are trade-offs to consider. Reinforcement learning is revolutionizing how we train LLMs, offering new ways to enhance AI agents. In this article, I'll take you through my journey with RL in LLMs, sharing practical insights and lessons learned. I'm diving into reinforcement learning with human feedback (RLHF), AI feedback (RLIF), and verifiable rewards (RLVR). Get ready to explore how these approaches are transforming the way we design and train AI agents.

Seedance AI 2.0: Revolutionizing Video Creation

I dove into Seedance AI 2.0 expecting just another AI tool, but what I found was a game changer. This isn't just tech hype—it's a real shift in video creation. With Seedance AI 2.0, we're witnessing a revolution in leveraging AI for video content. It's not just about flashy features; it's about tangible impacts on production workflows. Compared with Cling 3.0 and other models, Seedance AI 2.0 stands out with its technical capabilities and market impact. Chinese companies saw their stocks rise by 10 to 20% in a single trading day. And that native 2048 x 1080 resolution, it's a game changer! I'm sharing how I've integrated it into my workflow and the financial implications to consider. Get ready to see how this technology might redefine the future of video content creation.

OpenClaw: Local Execution vs Cloud Solutions

I remember the first time I ran OpenClaw on my local machine. It felt like unleashing a beast capable of doing everything from device control to data discovery. What really blew my mind was how it stacked up against cloud solutions. In this post, I'll dive into how OpenClaw is shaking up the tech landscape with its local execution. We'll explore its device control capabilities, data discovery potential, and user integration. Trust me, OpenClaw is a game changer, but watch out for the pitfalls and contexts where it might surprise you.